机器学习算法machine_learning_algorithms_1

make sure all our blog md file under post.

文前申明:机器学习算法涉及到很多公式、数学概念。为统一表述,特申明:

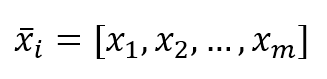

- 关于向量、矩阵:矩阵标记采用\mathbf{X} $\mathbf{X}$;向量标记采用 \vec{x} $\vec{x}$;行向量的元素是用逗号隔开$\vec{x} = [ x_1,x_2,\ldots,x_m ]$;列向量则用分号隔开$\vec{x} = [ x_1;x_2;\ldots;x_n ]$。

那么$n \times m$矩阵$\mathbf{X}$,可表示为由行向量作为元素组成的列向量,即:

$$\mathbf{X}=[\vec{x_1};\vec{x_2};\ldots;\vec{x_n}] \\ where \ \vec{x_i}=[x_{i1},x_{i2},\ldots,x_{im}] \\ where \ i \in \lbrace 1,2,\ldots,n \rbrace$$那么$n \times m$矩阵$\mathbf{X}$,可表示为由列向量作为元素组成的行向量,即:

$$\mathbf{X}=[\vec{x_1},\vec{x_2},\ldots,\vec{x_m}] \\ where \ \vec{x_j}=[x_{1j};x_{2j};\ldots;x_{nj}] \\ where \ j \in \lbrace 1,2,\ldots,m \rbrace$$区间用小括号()表示;有序的元素用中括号[],例如向量中的元素就是有顺序要求的;无序的元素用大括号{}括起来,例如集合中的元素就是不要求顺序的。

| algorithms |

|---|

监督学习、非监督学习、强化学习 分类、回归 参数学习、无参数学习

要知道这本书、这个知识的总体框架,而不一定纠结于细节。当然,若某些细节妨碍对总体框架的理解,那必须掌握他。

应该建立一个表格,把所有机器学习算法(为分清主次,先常用的)的原理、相同点、不同点、计算效率、精度、使用场合任务等等表现出来。

Machine Learning Algorithms

Giuseppe Bonaccorso

1 A Gentle Introduction to Machine Learning机器学习的简单介绍

1.1 Introduction - classic and adaptive machines介绍-经典和适应性机器

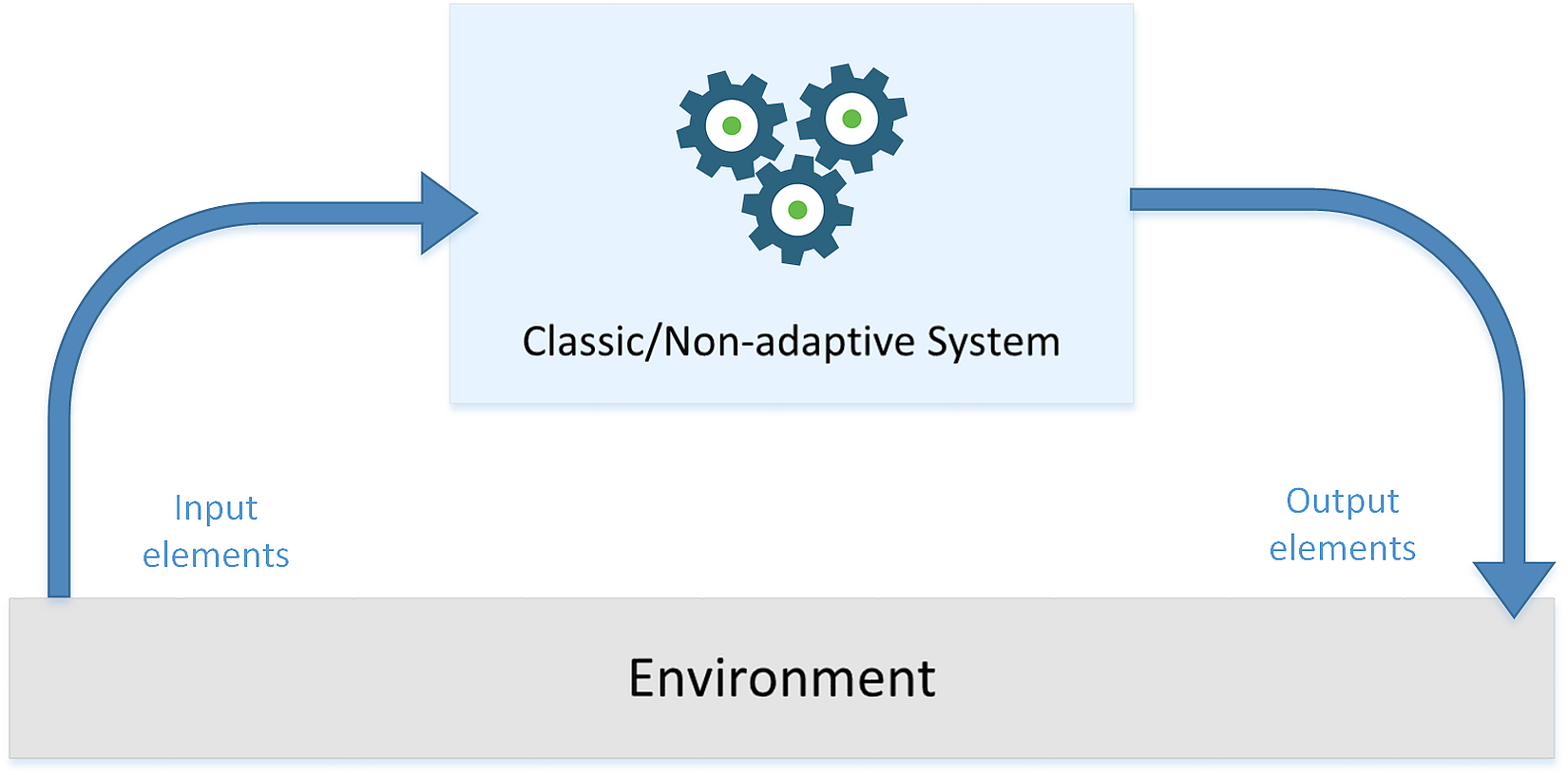

In the following figure, there's a generic representation of a classical system that receives some input values, processes them, and produces output results:在下面的图中,有一个经典系统的通用表示,它接收一些输入值,处理它们,并产生输出结果:

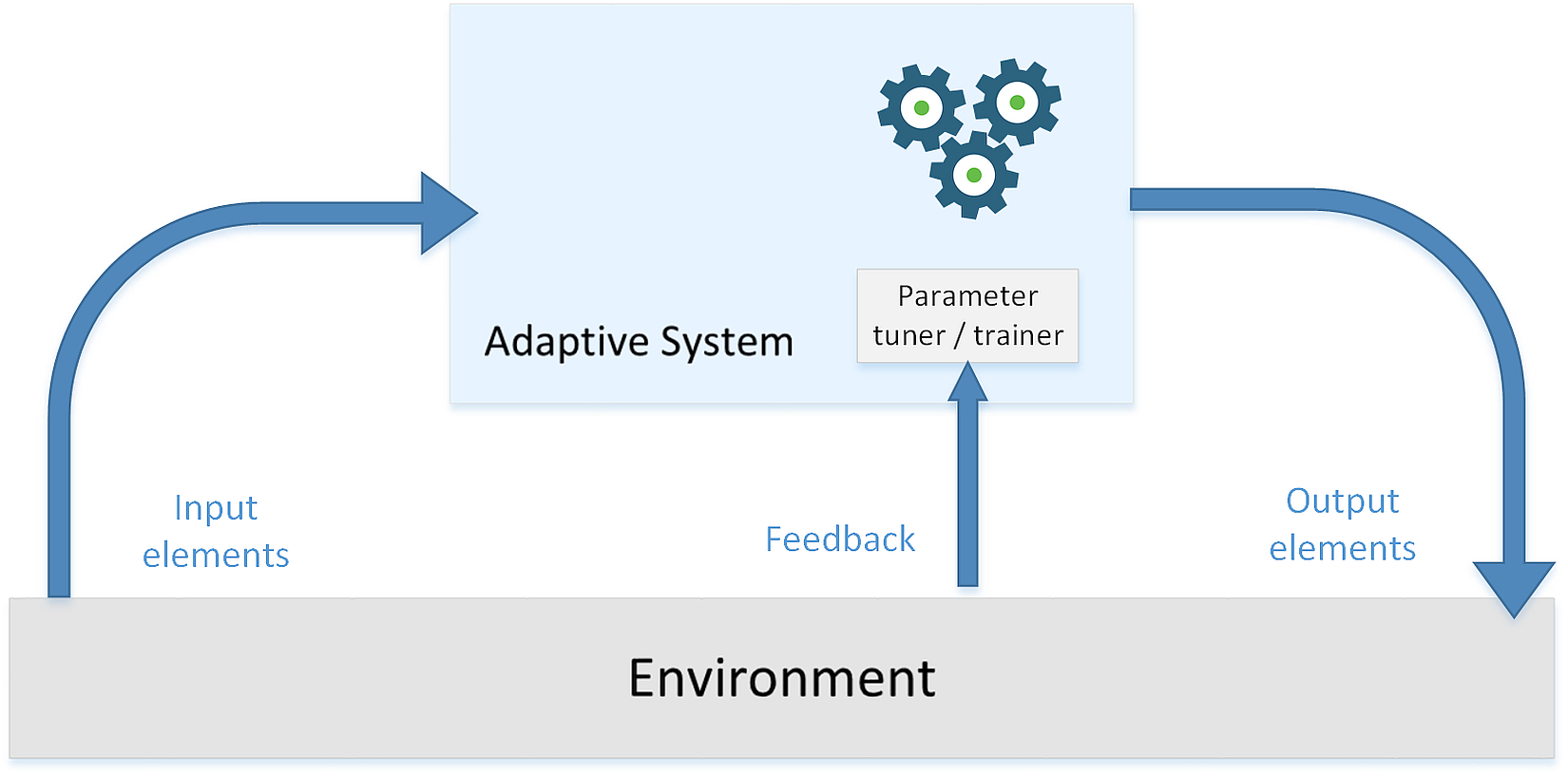

Here's a schematic representation of an adaptive system:这是一个自适应系统的示意图:

Here's a schematic representation of an adaptive system:这是一个自适应系统的示意图:

Such a system isn't based on static or permanent structures (model parameters and architectures) but rather on a continuous ability to adapt its behavior to external signals (datasets or real-time inputs) and, like a human being, to predict the future using uncertain and fragmentary pieces of information.这样的系统不是基于静态或永久性的结构(模型参数和体系结构),而是基于对外部信号(数据集或实时输入)的不断适应能力,像人类一样,利用不确定和零碎的信息预测未来。

Such a system isn't based on static or permanent structures (model parameters and architectures) but rather on a continuous ability to adapt its behavior to external signals (datasets or real-time inputs) and, like a human being, to predict the future using uncertain and fragmentary pieces of information.这样的系统不是基于静态或永久性的结构(模型参数和体系结构),而是基于对外部信号(数据集或实时输入)的不断适应能力,像人类一样,利用不确定和零碎的信息预测未来。

1.2 Only learning matters只有学习才重要

What does learning exactly mean? Simply, we can say that learning is the ability to change according to external stimuli and remembering most of all previous experiences. So machine learning is an engineering approach that gives maximum importance to every technique that increases or improves the propensity for changing adaptively.学习到底意味着什么?简单地说,我们可以说学习是一种根据外界刺激而改变的能力,它能记住所有以前的经历。因此,机器学习是一种工程方法,它最大限度地重视每一项技术,以增加或改善适应变化的倾向。

1.2.1 Supervised learning监督学习

A supervised scenario is characterized by the concept of a teacher or supervisor, whose main task is to provide the agent with a precise measure of its error (directly comparable with output values).监督场景的特点基于老师或监督者,老师或监督者的主要任务是为代理提供其错误的精确度量(直接与输出值比较)。

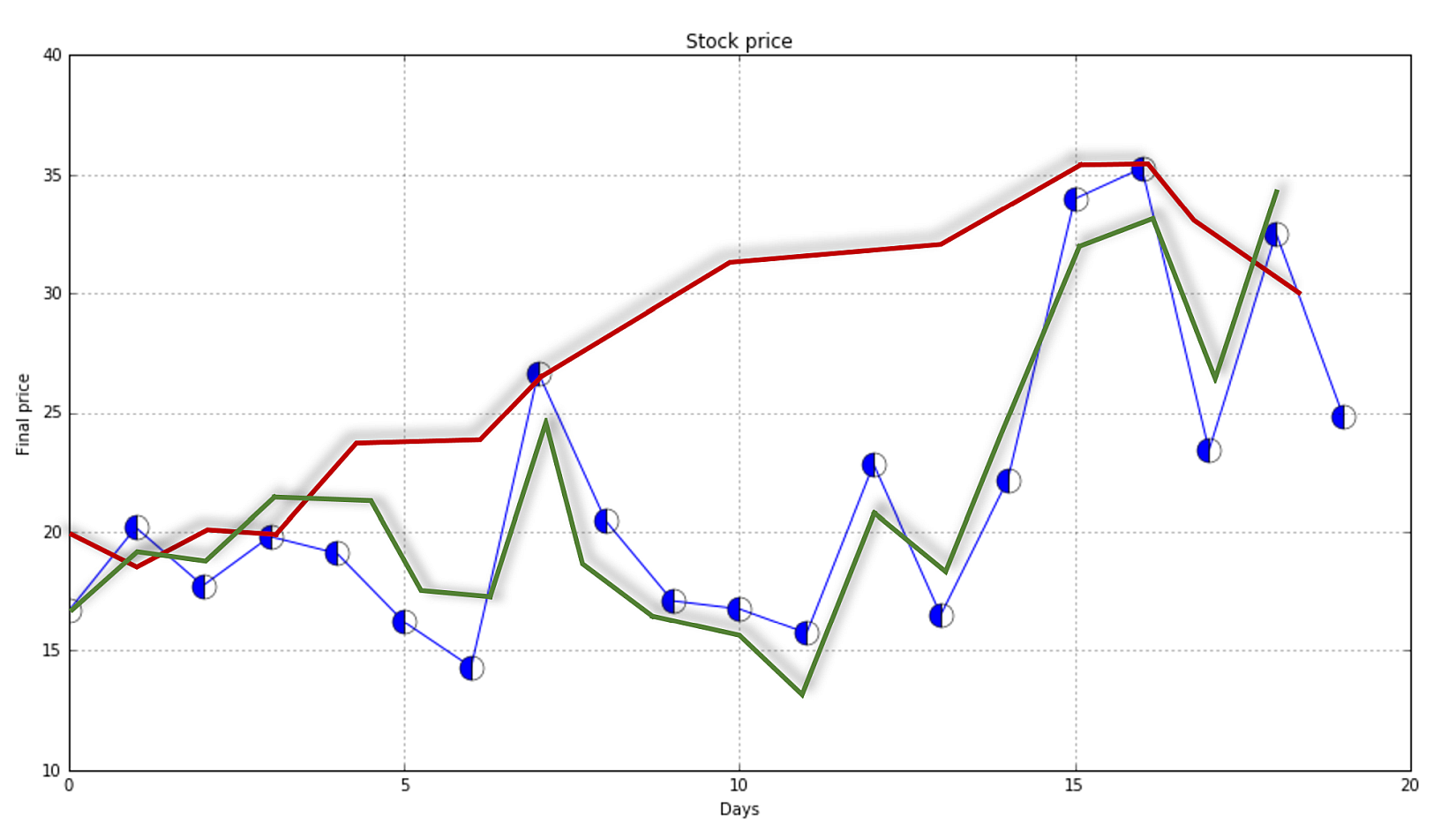

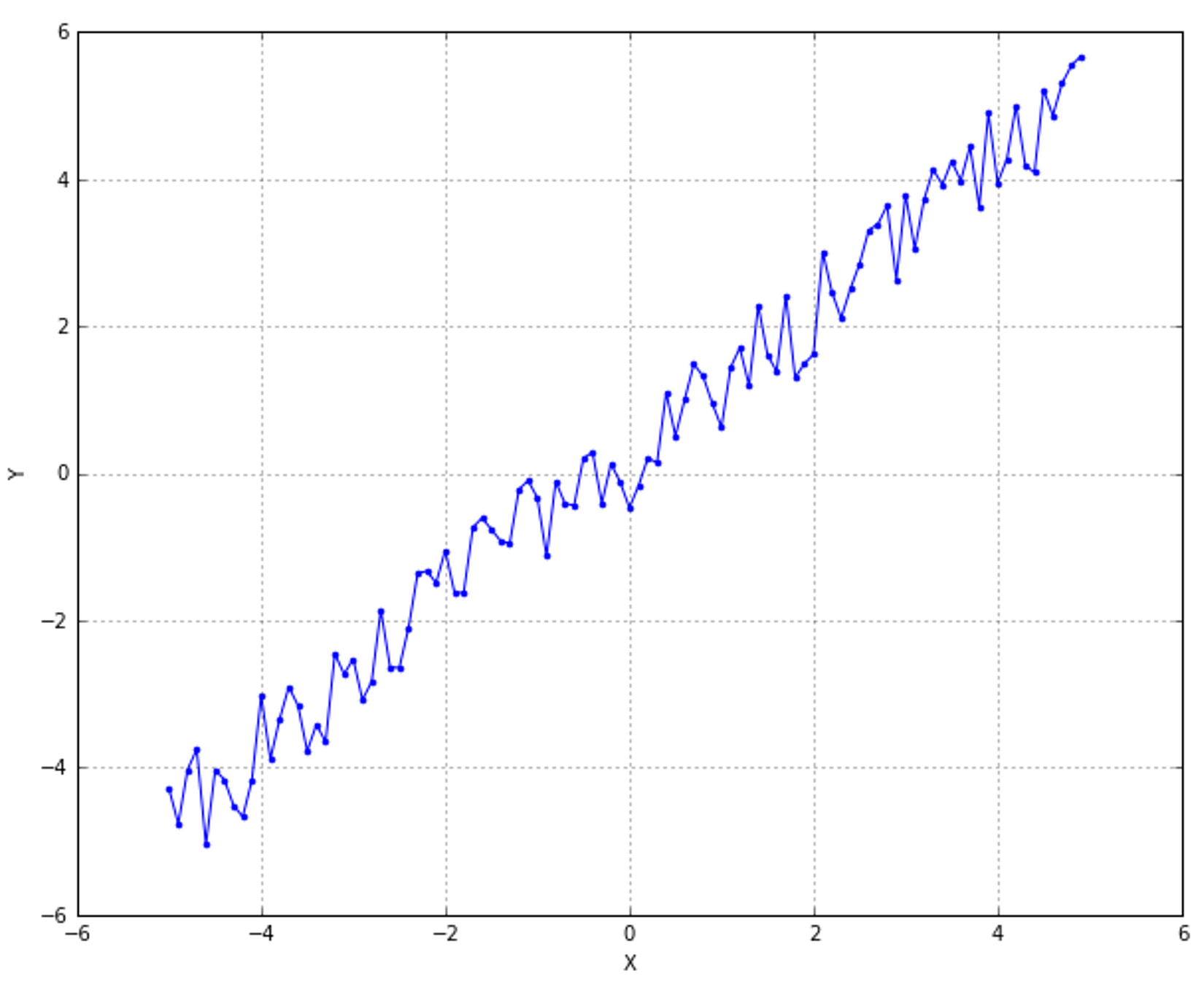

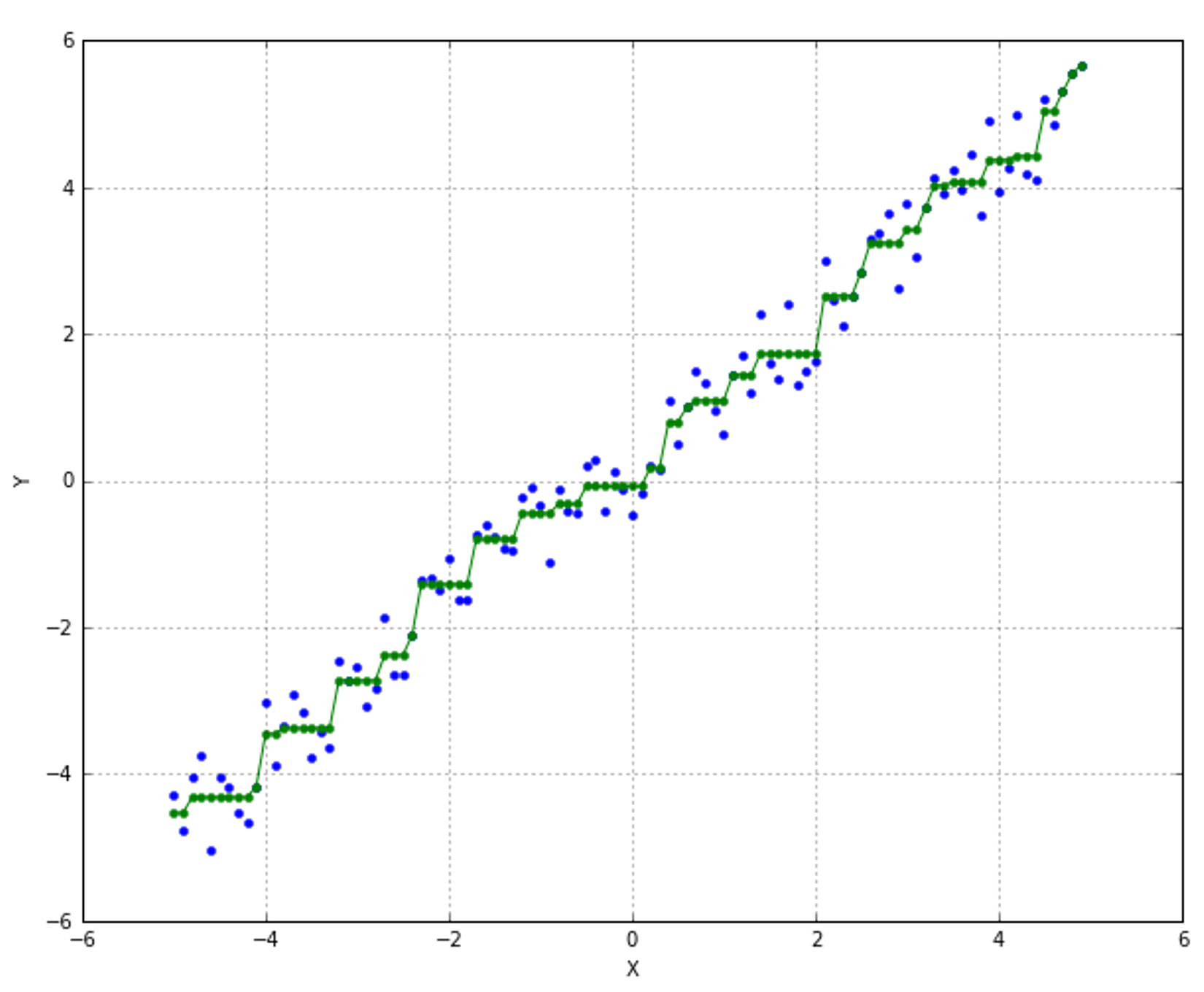

In the following figure, a few training points are marked with circles and the thin blue line represents a perfect generalization (in this case, the connection is a simple segment):在下图中,一些训练点用圆圈标记,蓝色的细线代表完美的泛化(在本例中,连接是一个简单的分段):

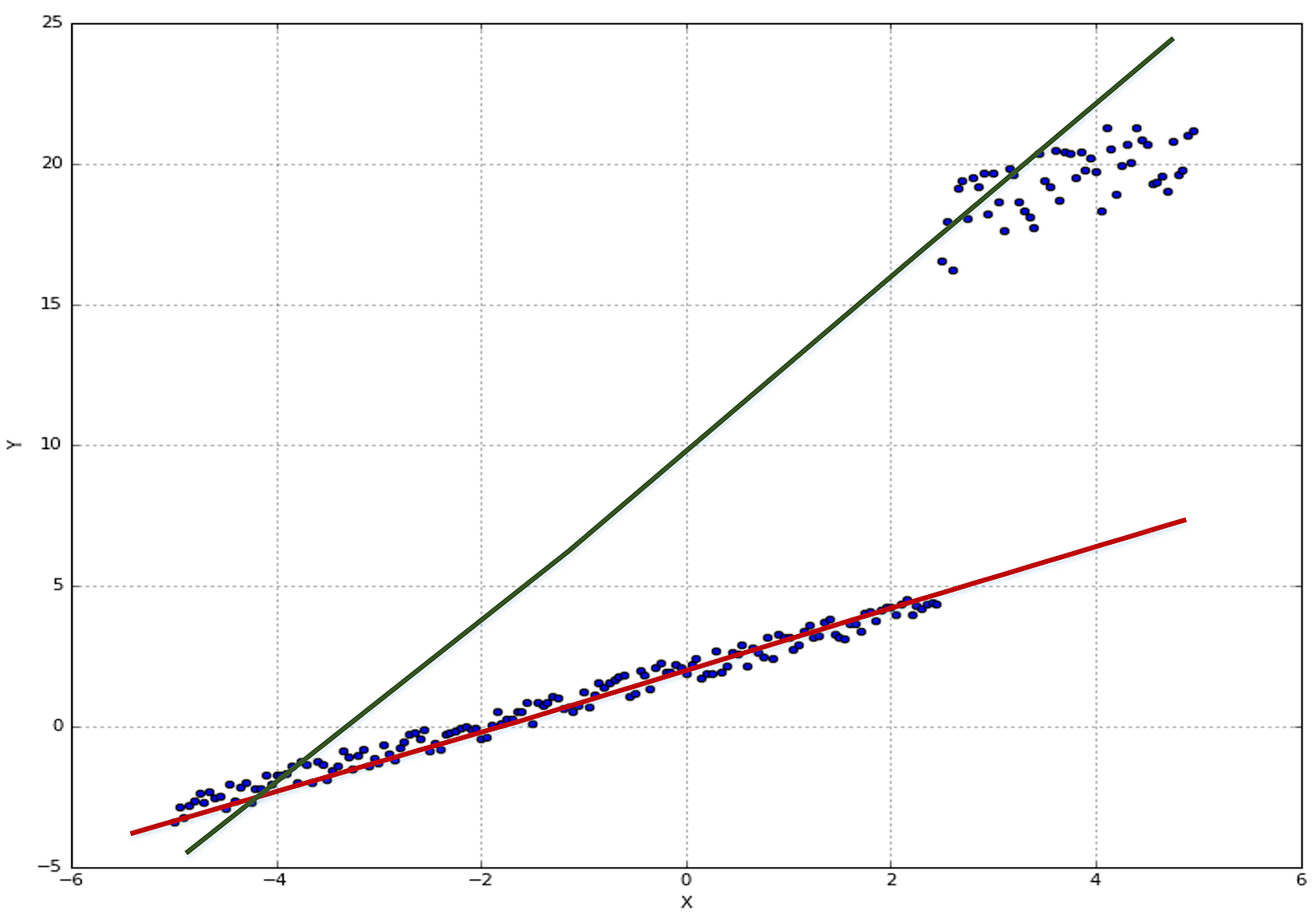

Two different models are trained with the same datasets (corresponding to the two larger lines). The former is unacceptable because it cannot generalize and capture the fastest dynamics (in terms of frequency), while the latter seems a very good compromise between the original trend and a residual ability to generalize correctly in a predictive analysis.相同数据集训练得到两个不同的模型(对应两条更粗的线条)。红线是不可接受的,因为它不能概括和捕捉最快的动态(就频率而言),而绿线似乎是原始趋势和在预测分析中正确概括的剩余能力之间的一个很好的折衷。

Two different models are trained with the same datasets (corresponding to the two larger lines). The former is unacceptable because it cannot generalize and capture the fastest dynamics (in terms of frequency), while the latter seems a very good compromise between the original trend and a residual ability to generalize correctly in a predictive analysis.相同数据集训练得到两个不同的模型(对应两条更粗的线条)。红线是不可接受的,因为它不能概括和捕捉最快的动态(就频率而言),而绿线似乎是原始趋势和在预测分析中正确概括的剩余能力之间的一个很好的折衷。

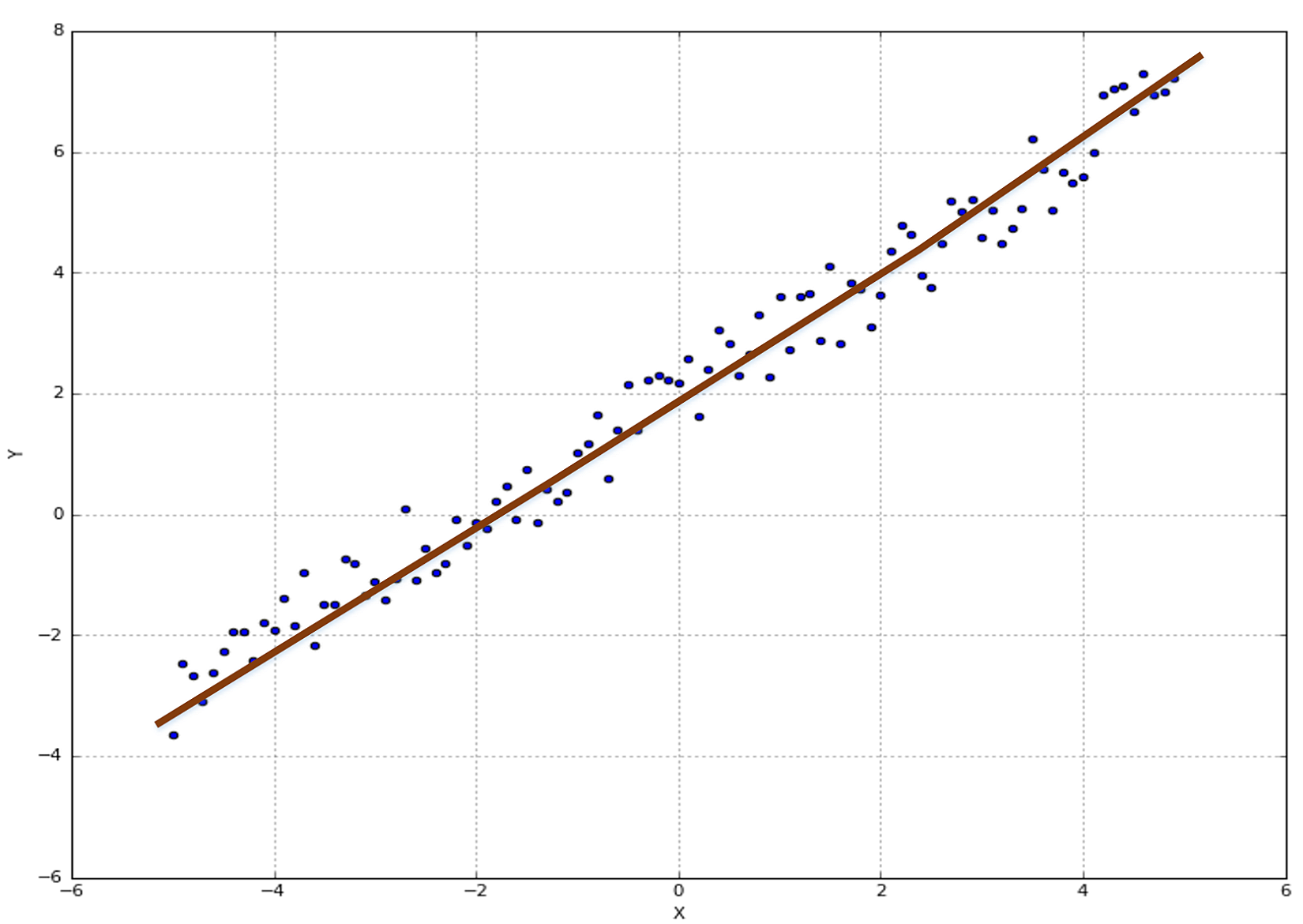

Formally, the previous example is called regression because it's based on continuous output values. Instead, if there is only a discrete number of possible outcomes (called categories), the process becomes a classification.前一个示例正式称为回归,因为它是基于连续输出值的。相反,如果输出值是离散数字(也叫分类),这个过程变成了一个分类。

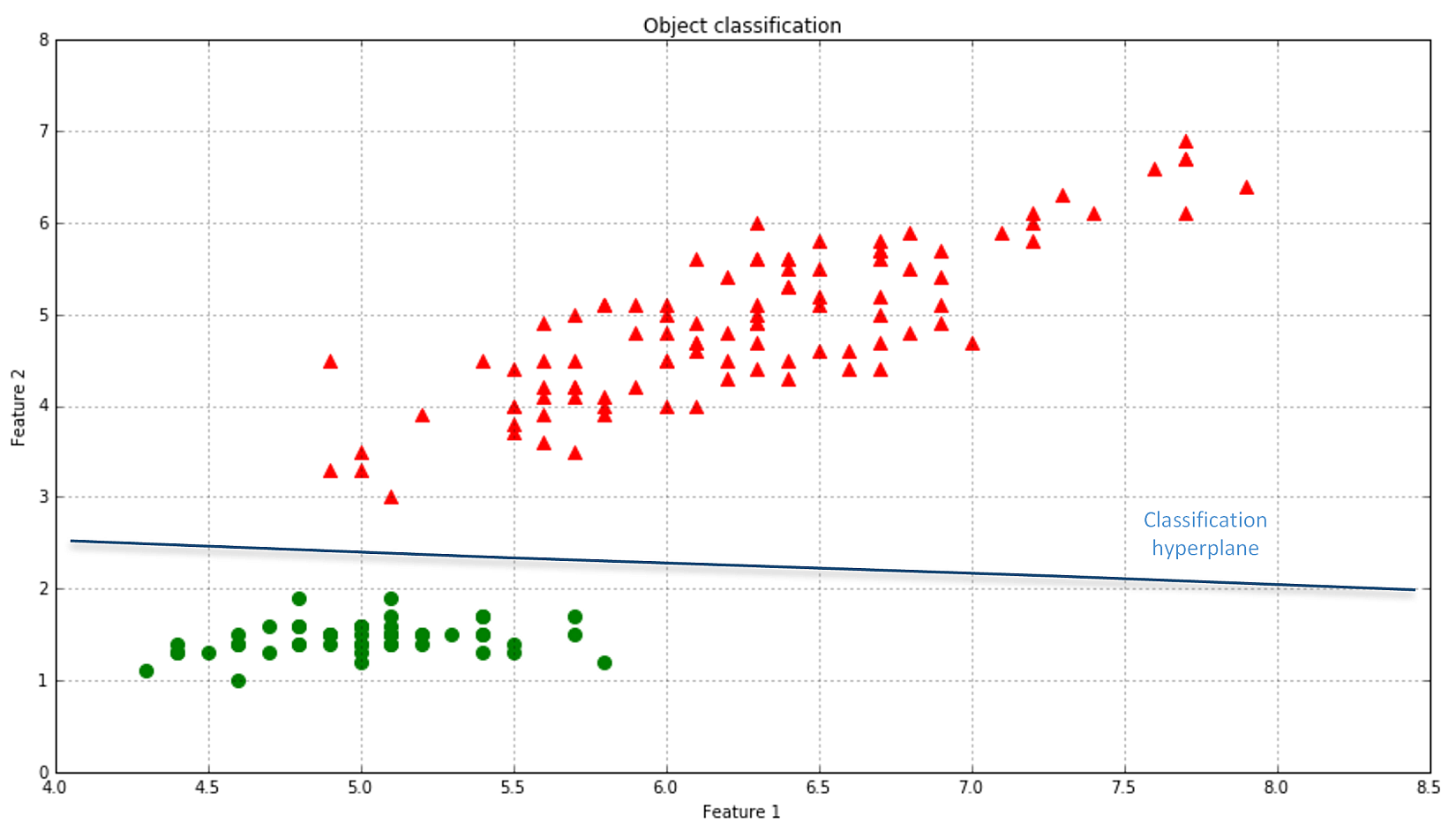

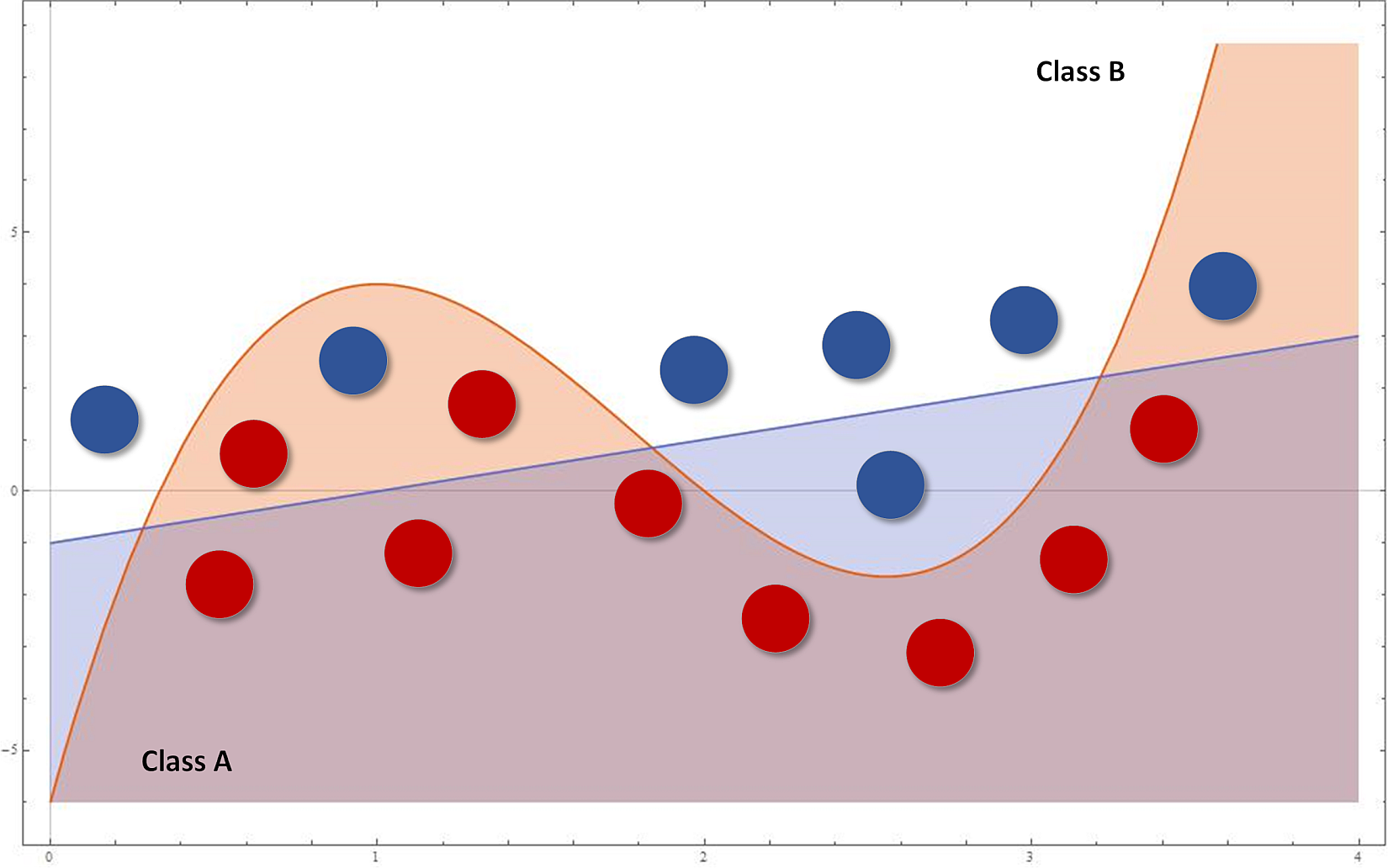

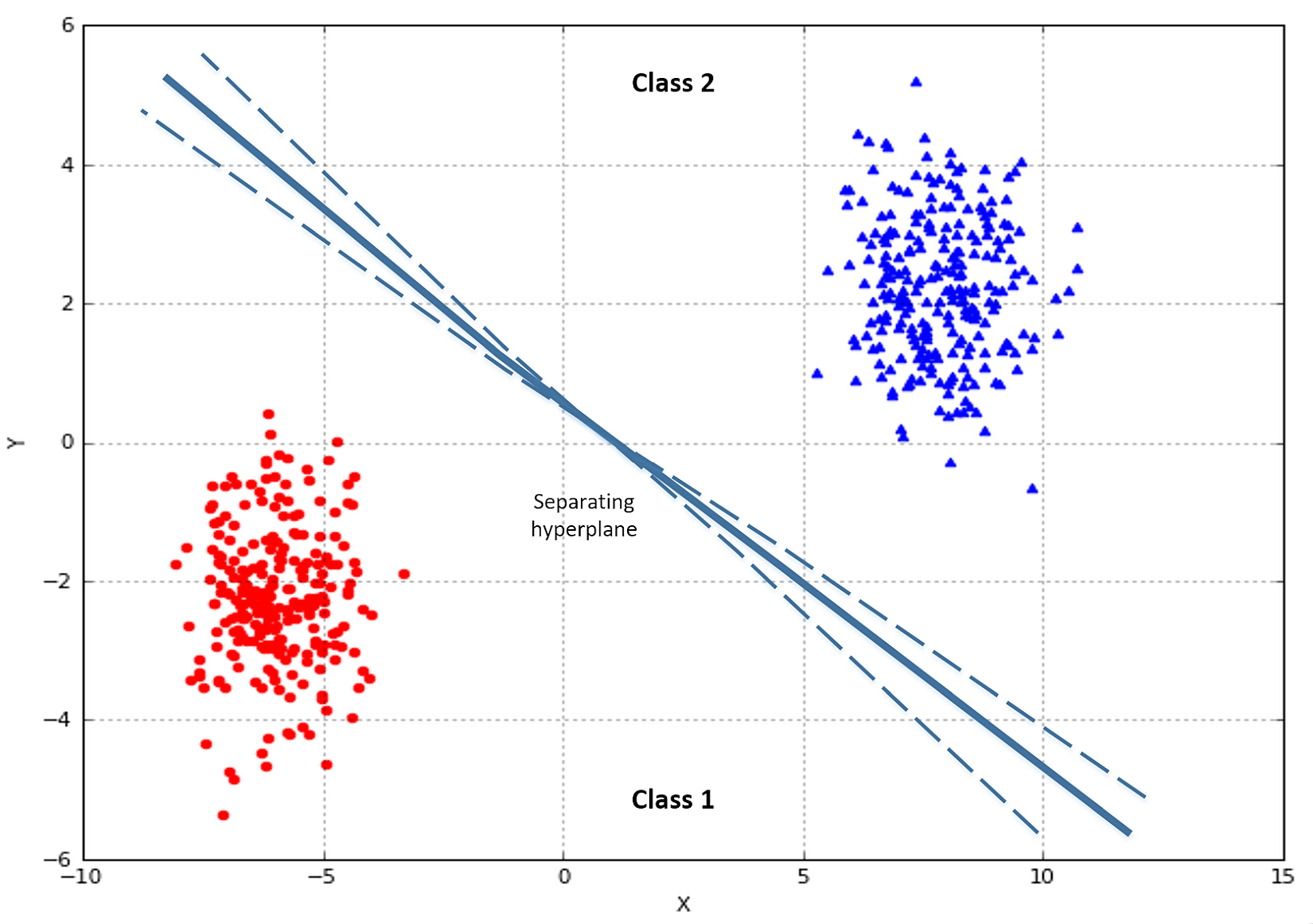

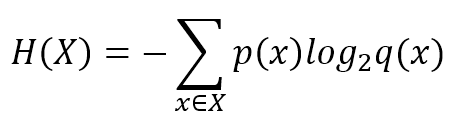

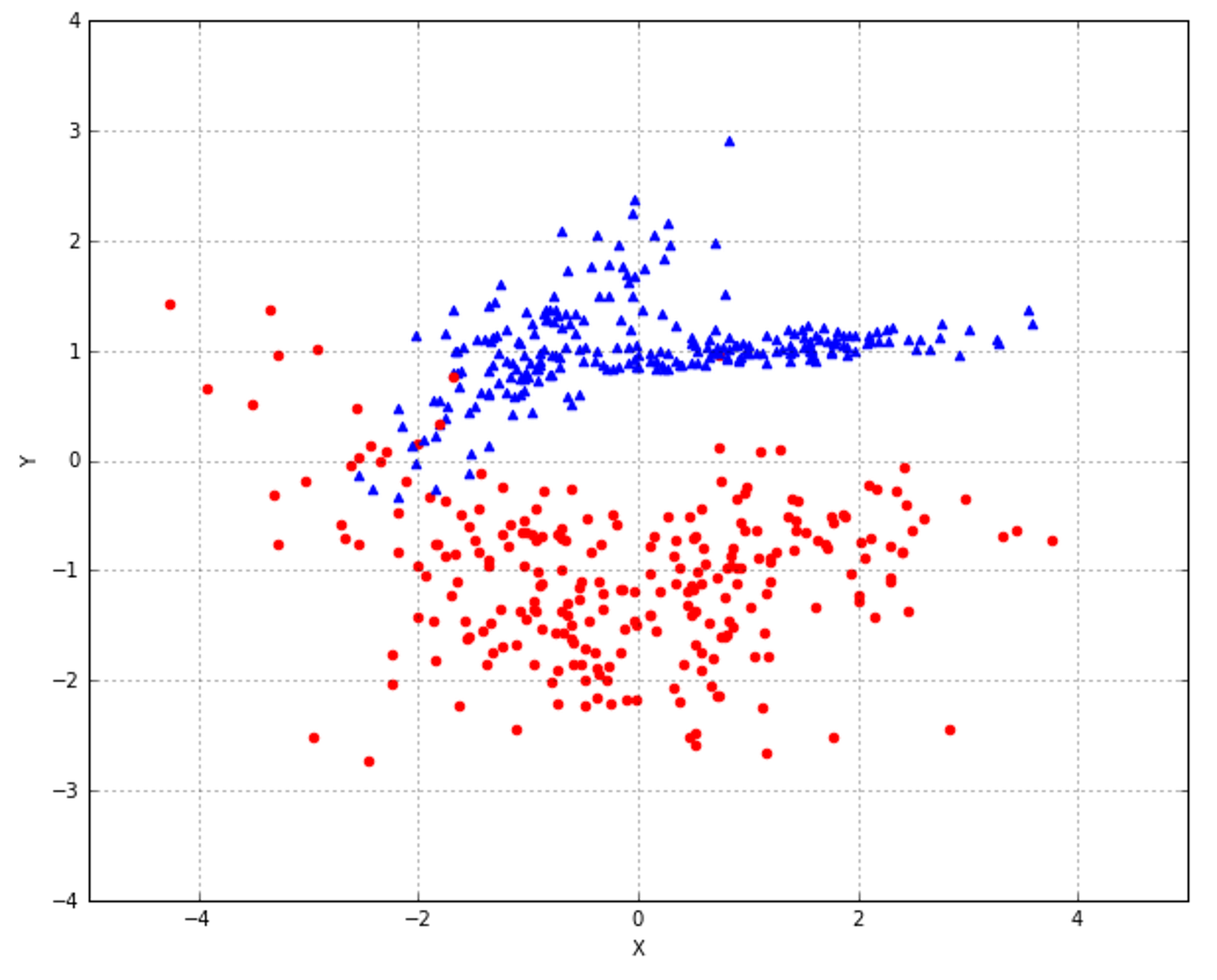

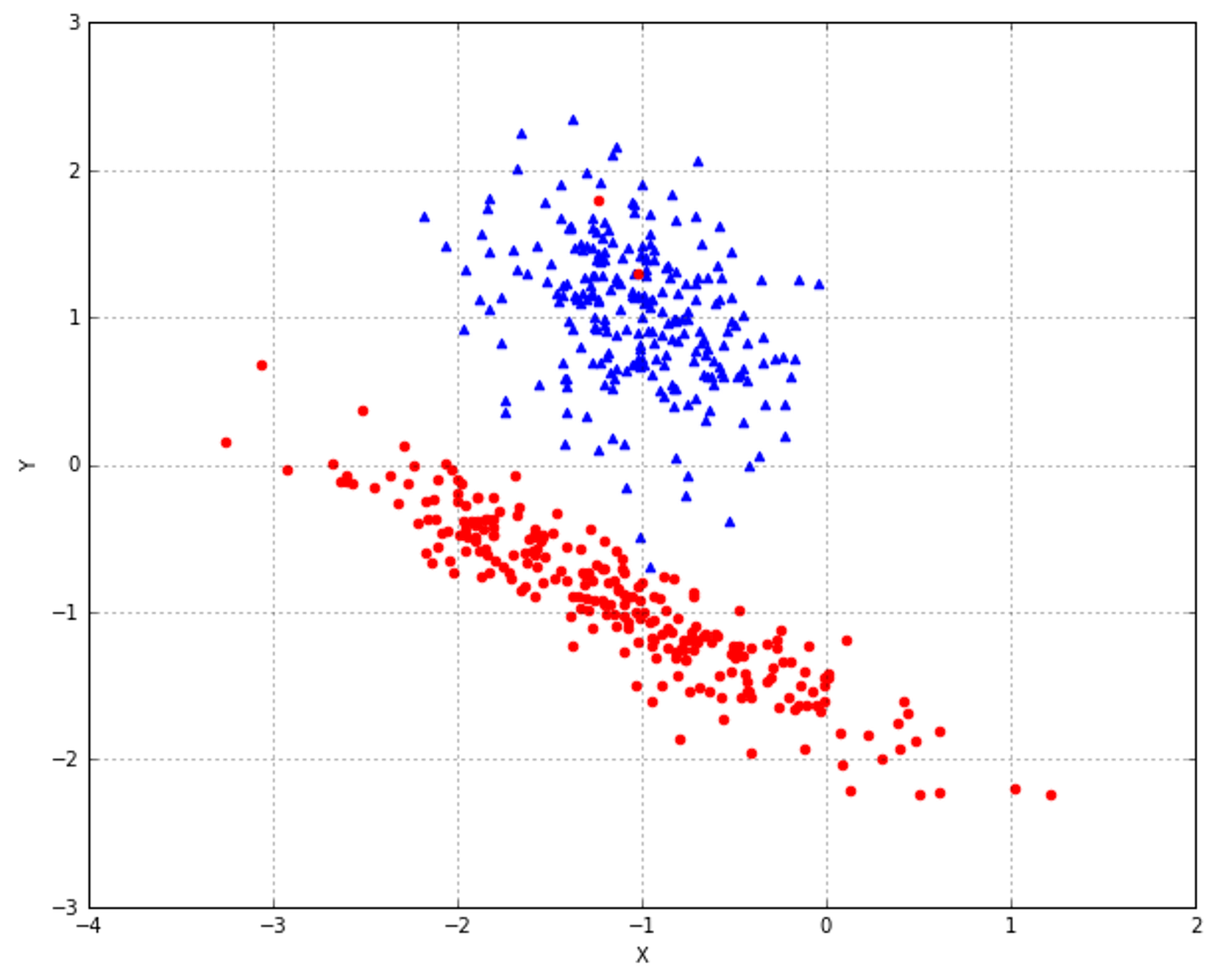

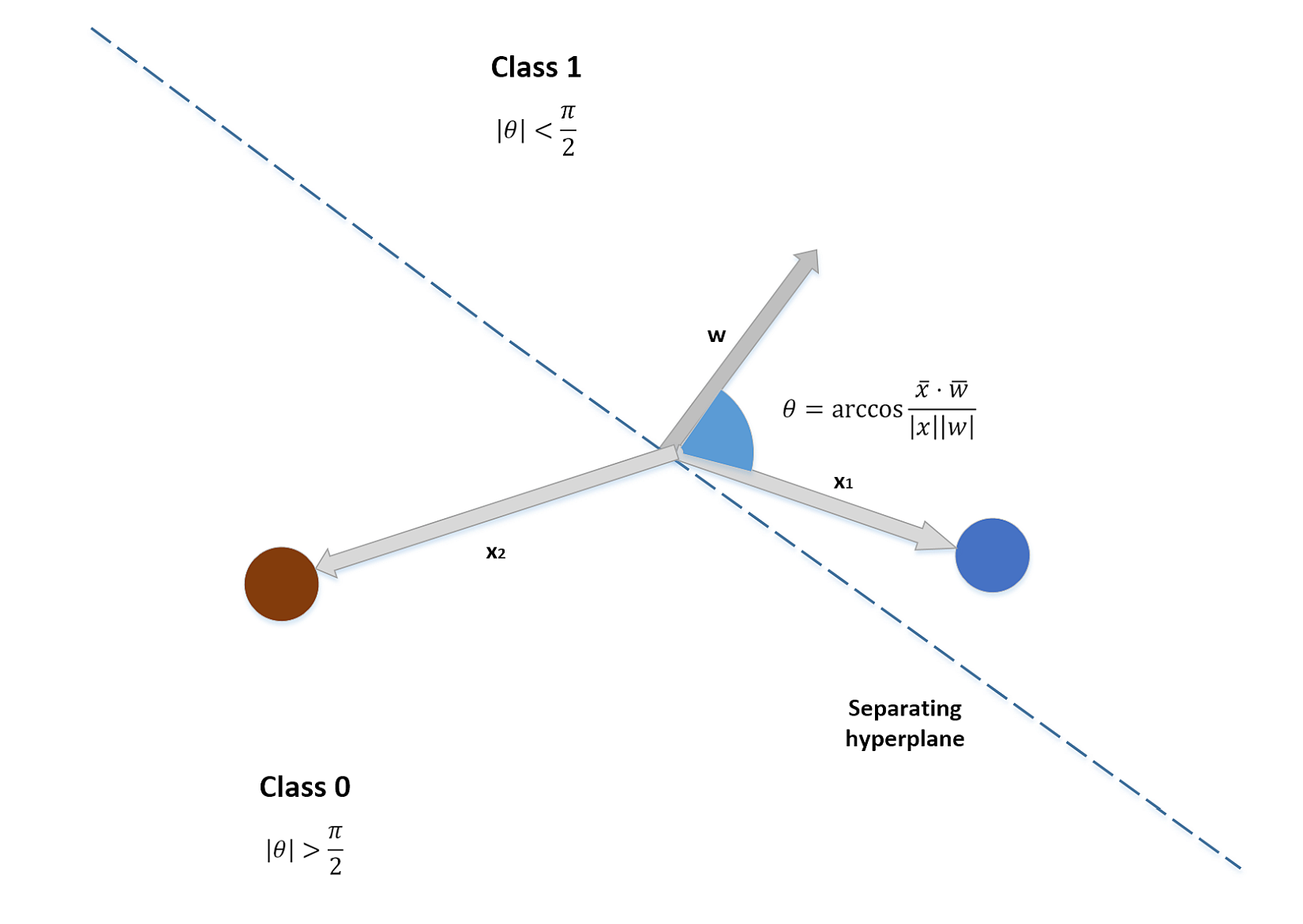

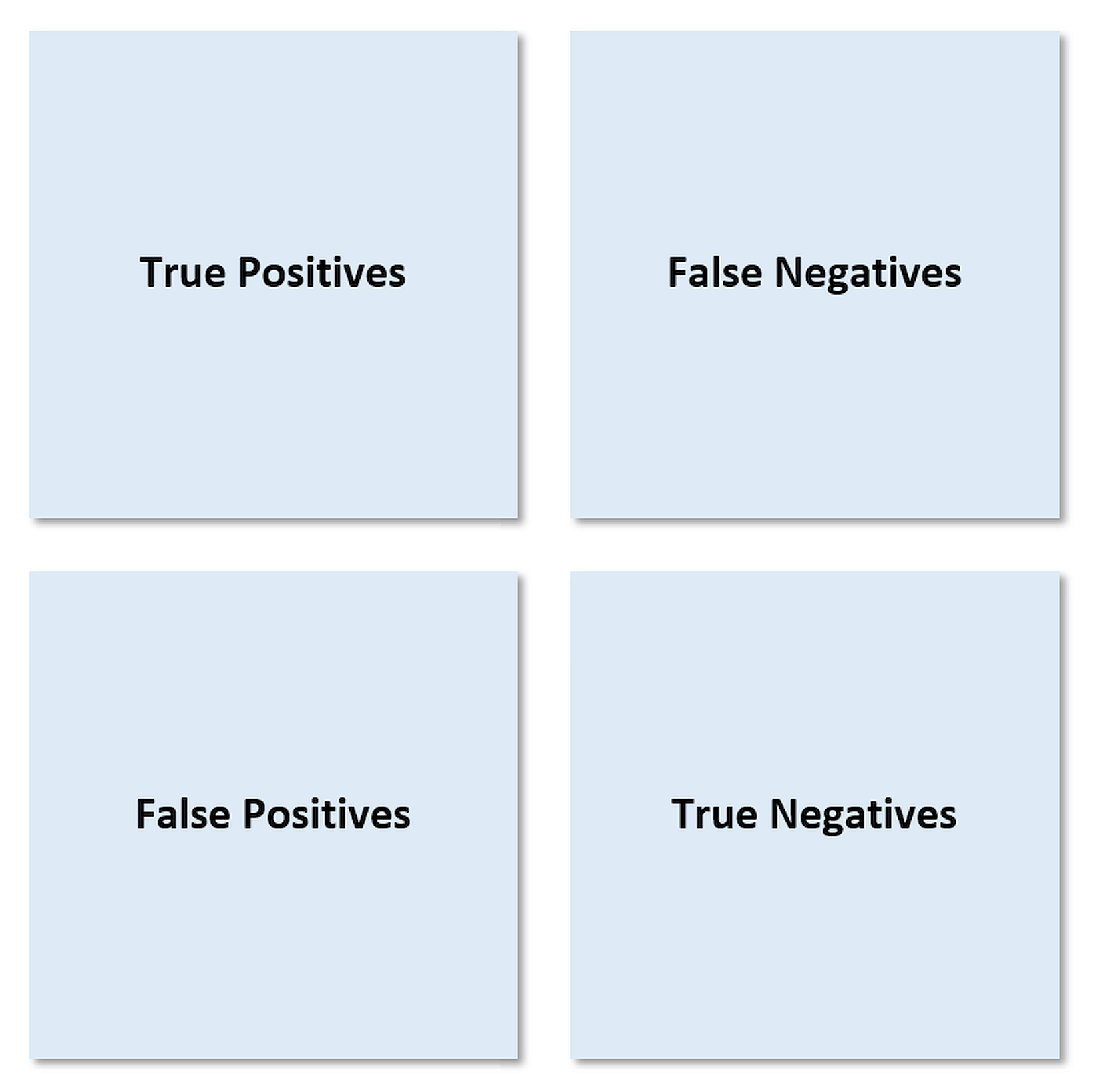

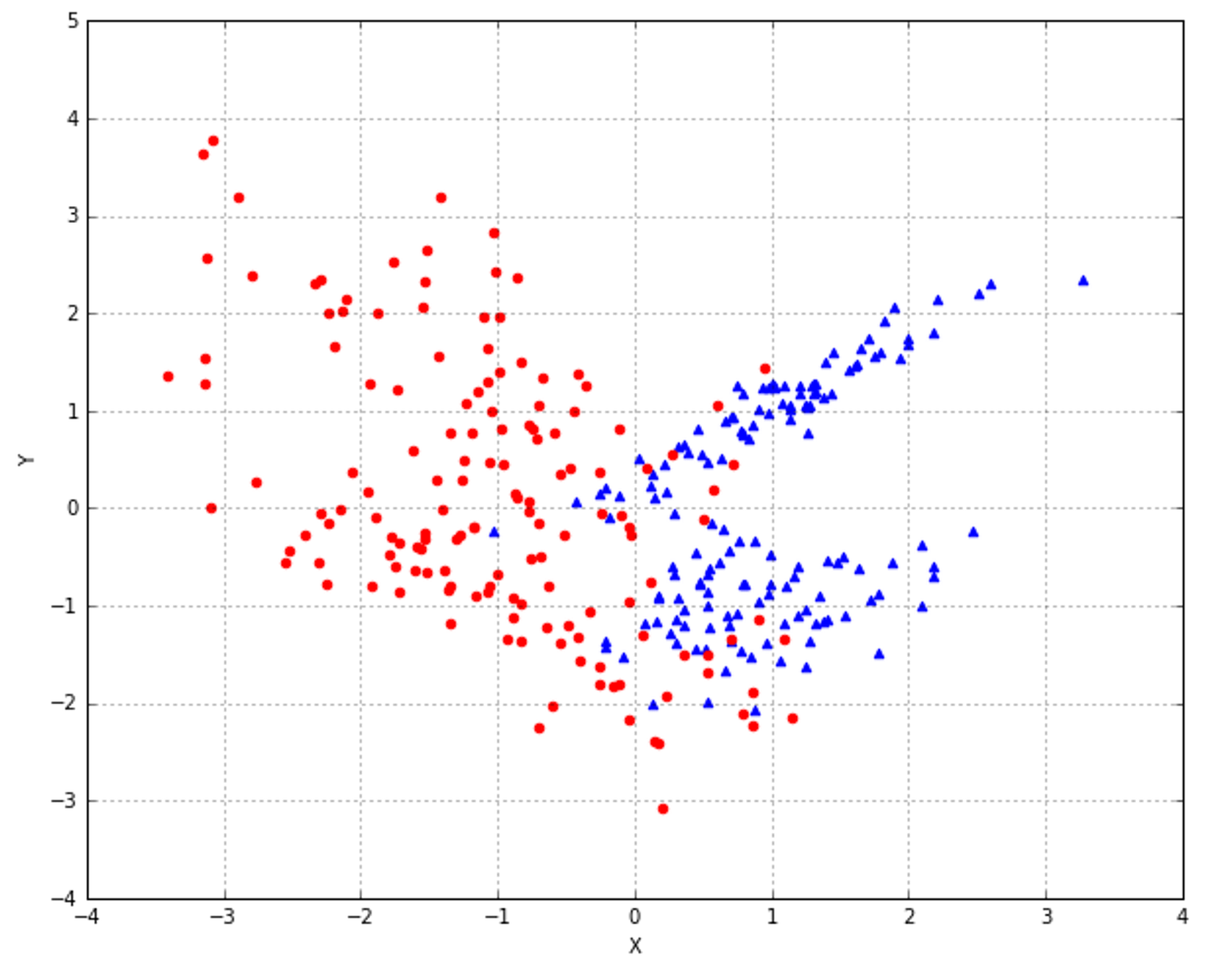

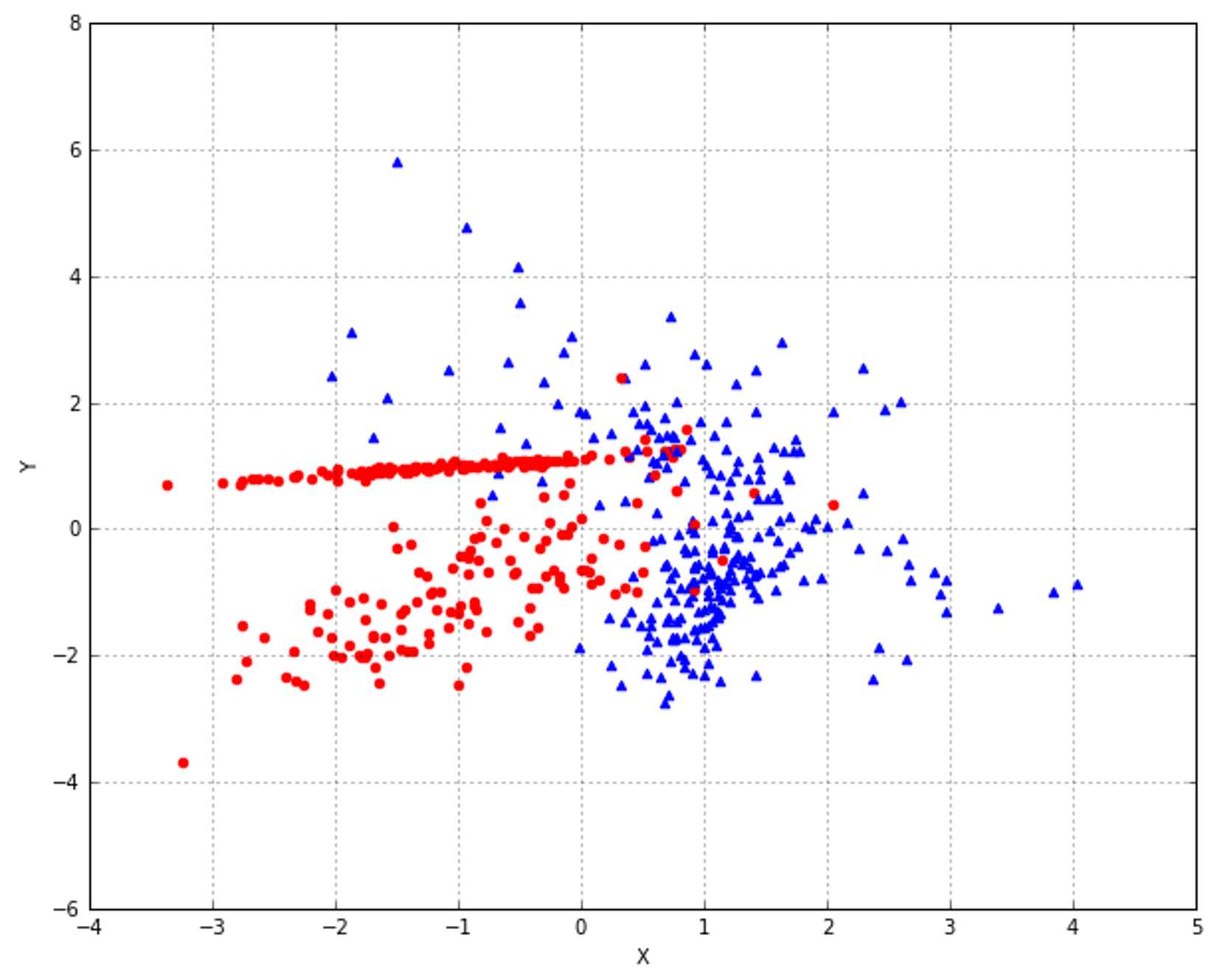

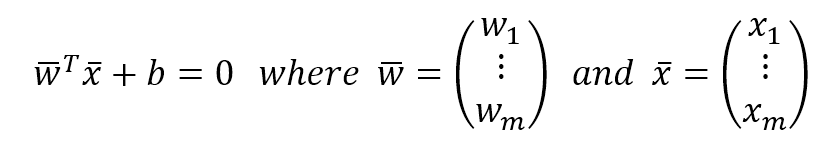

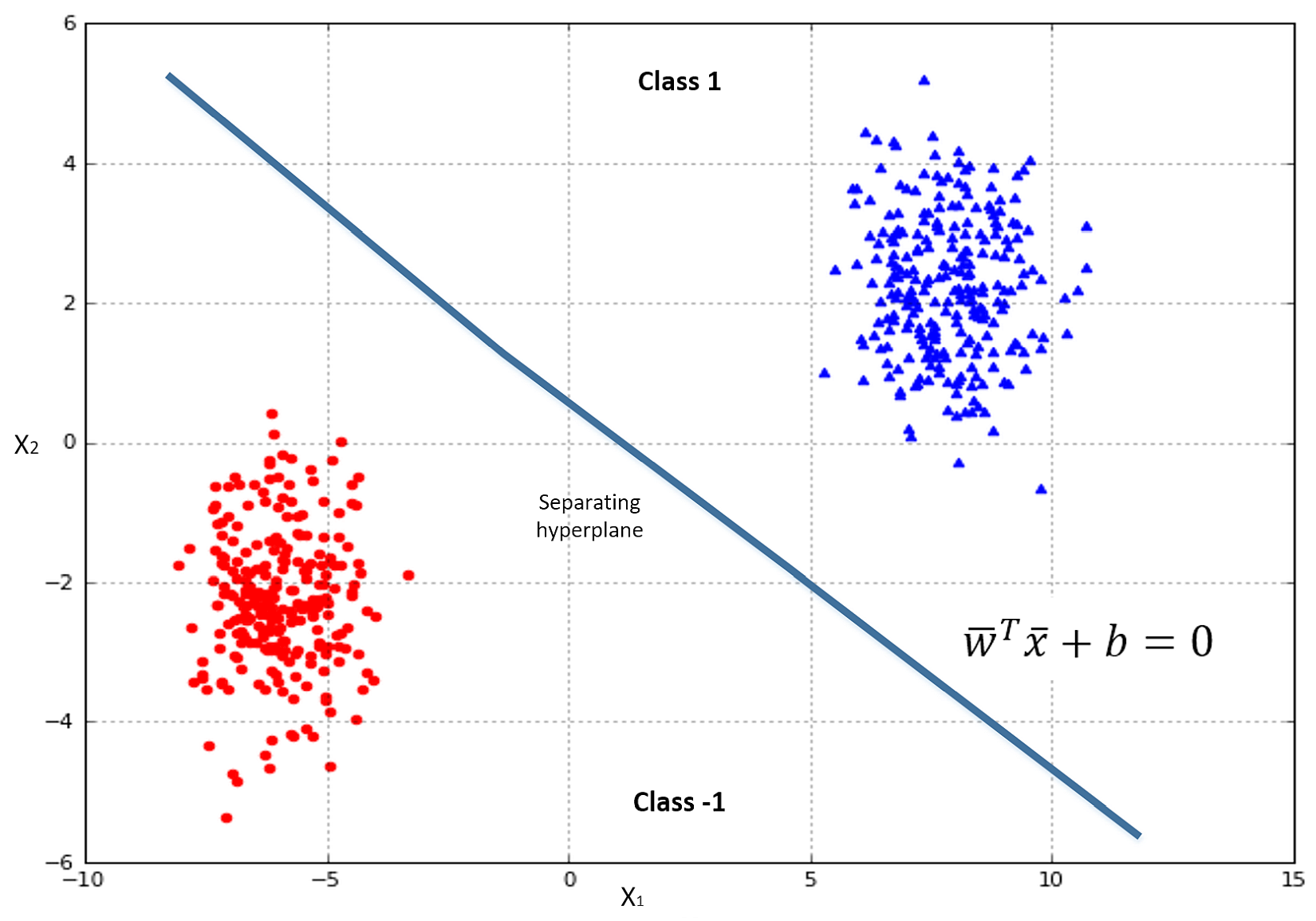

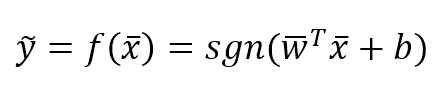

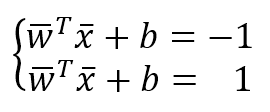

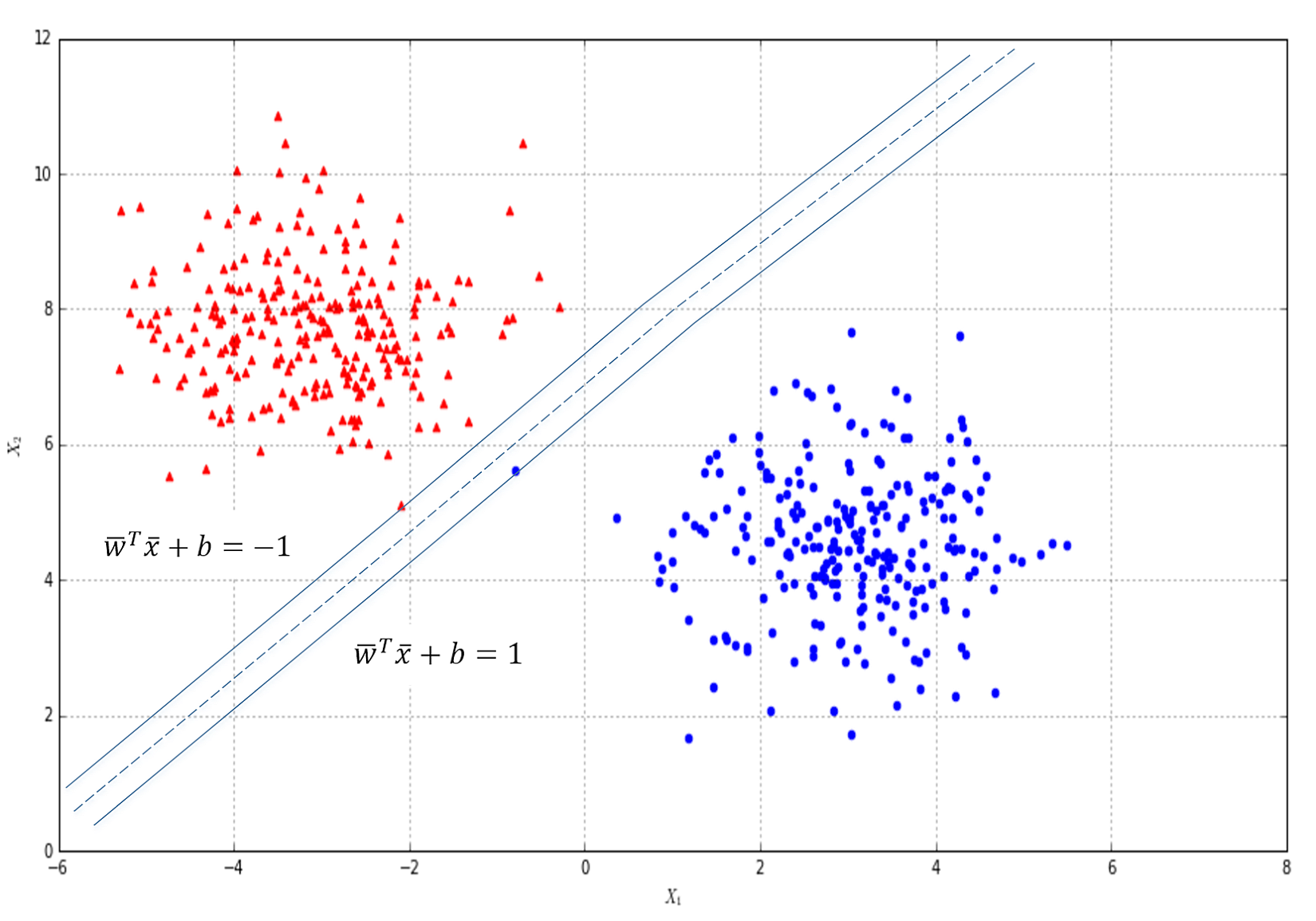

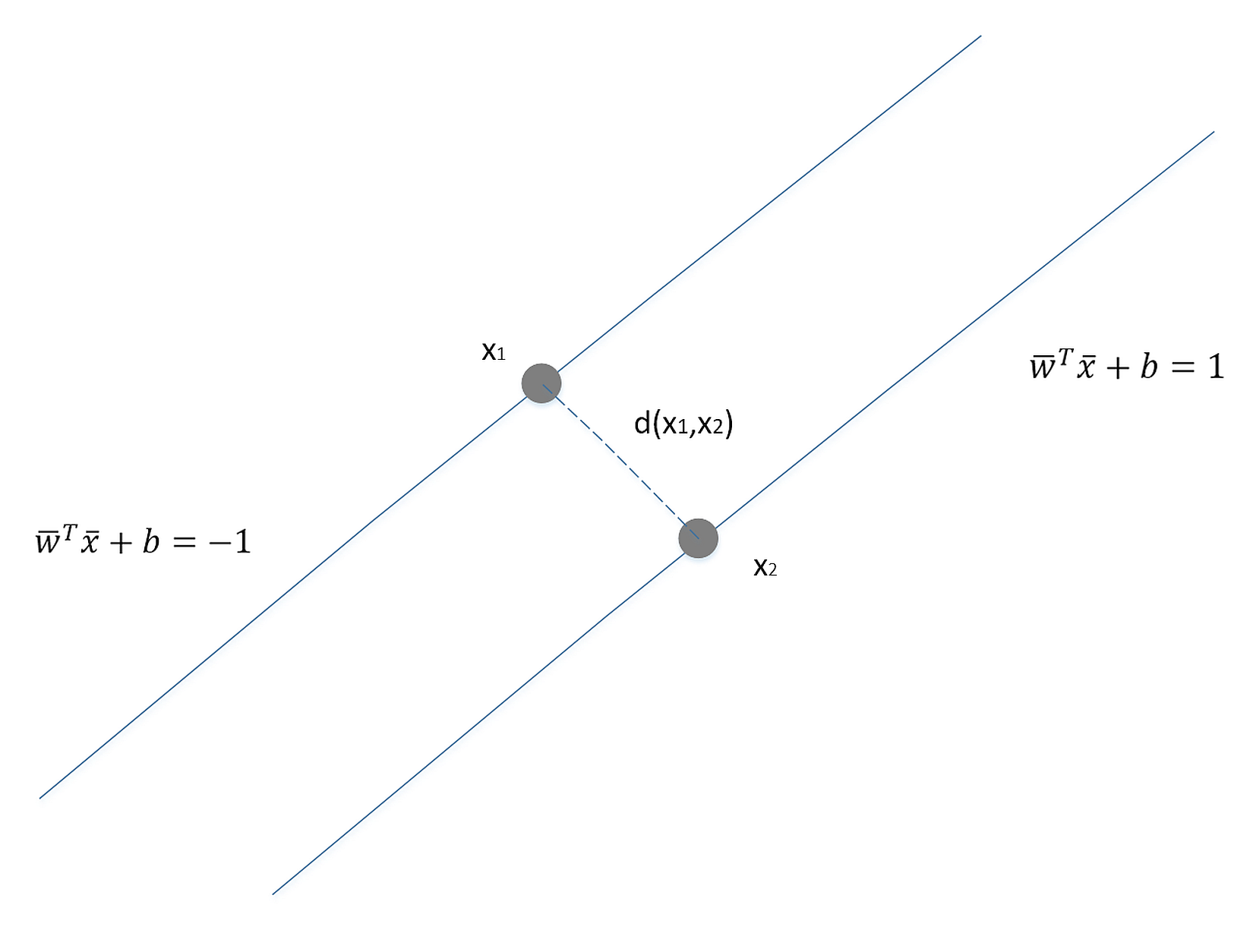

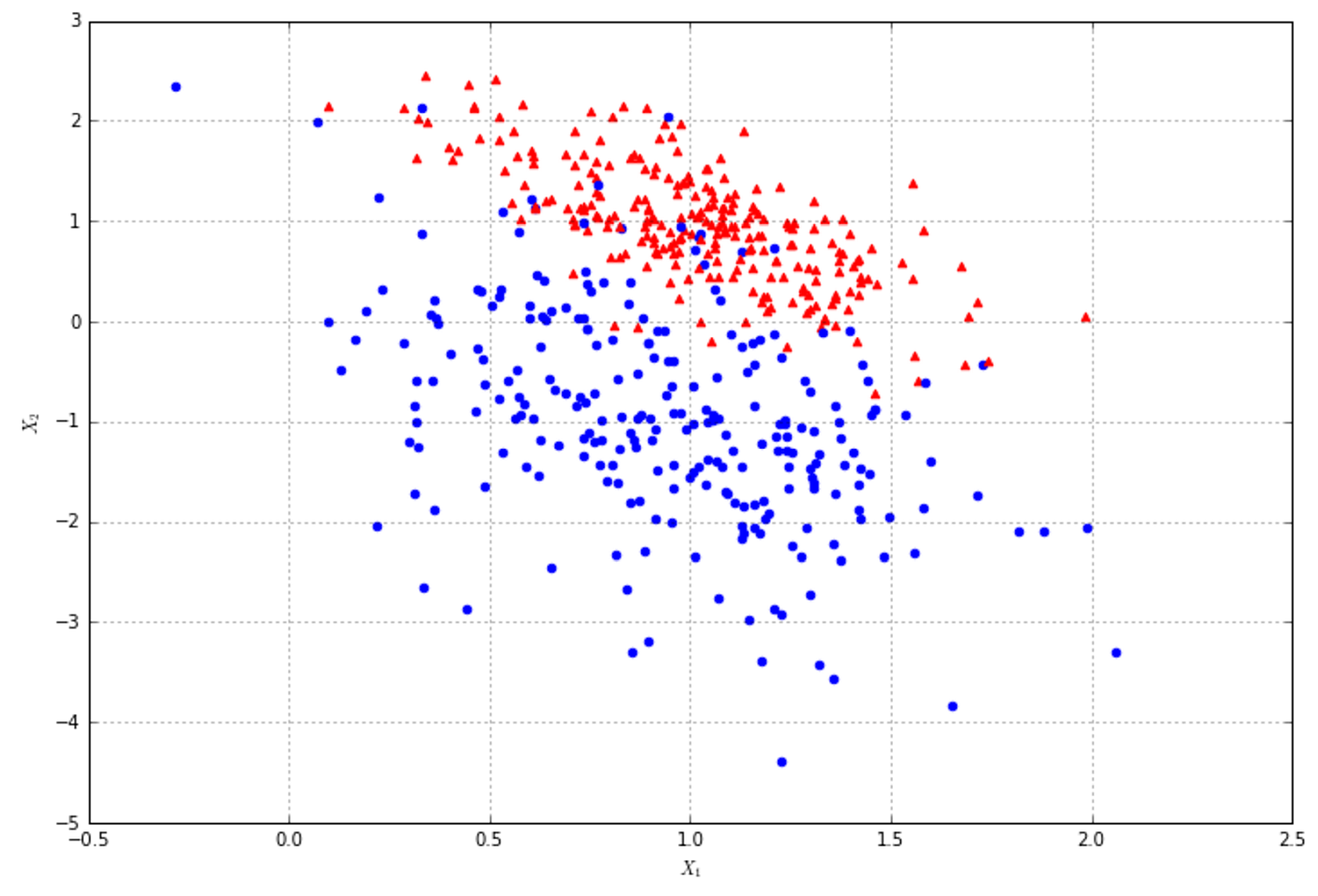

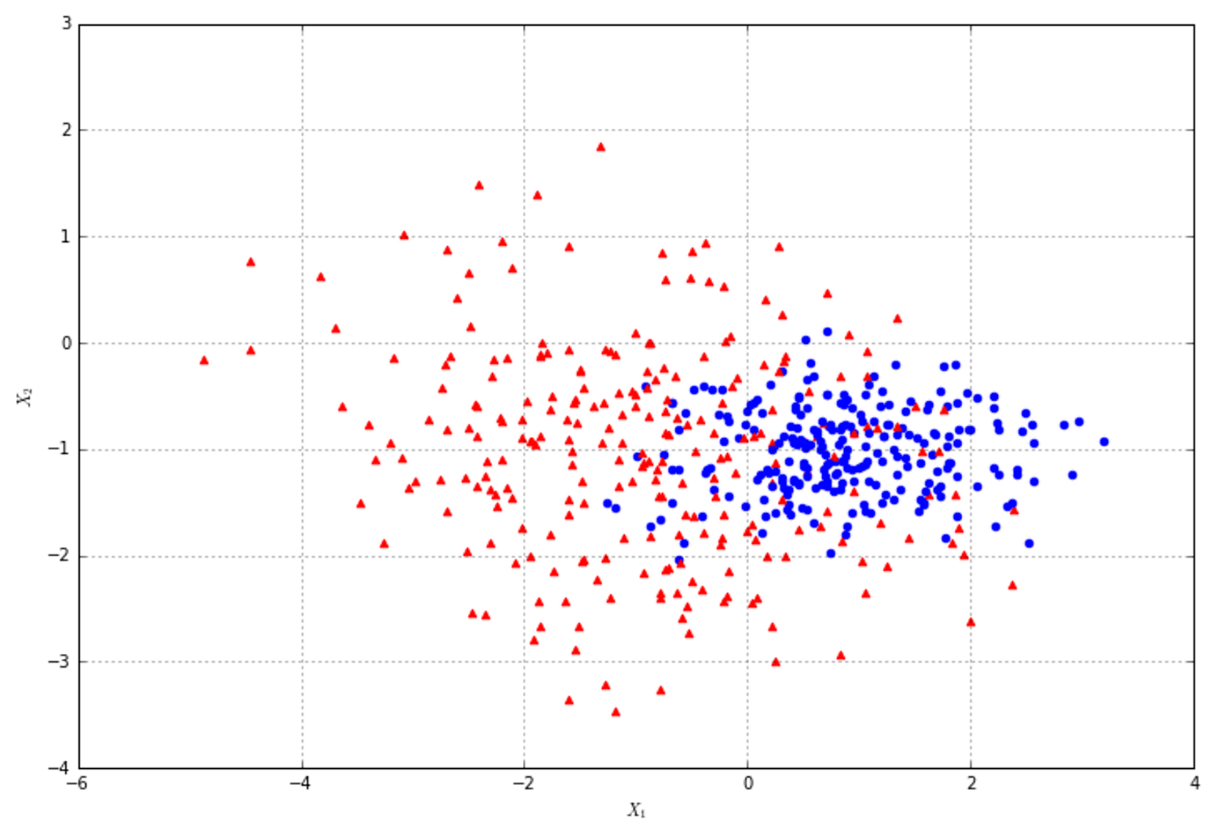

In the following figure, there's an example of classification of elements with two features. The majority of algorithms try to find the best separating hyperplane (in this case, it's a linear problem) by imposing different conditions. However, the goal is always the same: reducing the number of misclassifications and increasing the noise-robustness.在下图中,是具有两个特征的元素分类示例。大多数算法都试图通过施加不同的条件来寻找最佳的分离超平面(在这种情况下,这是一个线性问题)。但是,目标总是一样的:减少错误分类的数量,增加噪声-鲁棒性。

Common supervised learning applications include:常见的监督学习应用包括:

1. Predictive analysis based on regression or categorical classification基于回归或分类的预测分析

2. Spam detection垃圾邮件检测

3. Pattern detection模式检测

4. Natural Language Processing自然语言处理

6. Sentiment analysis情感分析

7. Automatic image classification自动图像分类

8. Automatic sequence processing (for example, music or speech)自动序列处理(例如,音乐或语音)

Common supervised learning applications include:常见的监督学习应用包括:

1. Predictive analysis based on regression or categorical classification基于回归或分类的预测分析

2. Spam detection垃圾邮件检测

3. Pattern detection模式检测

4. Natural Language Processing自然语言处理

6. Sentiment analysis情感分析

7. Automatic image classification自动图像分类

8. Automatic sequence processing (for example, music or speech)自动序列处理(例如,音乐或语音)

1.2.2 Unsupervised learning非监督学习

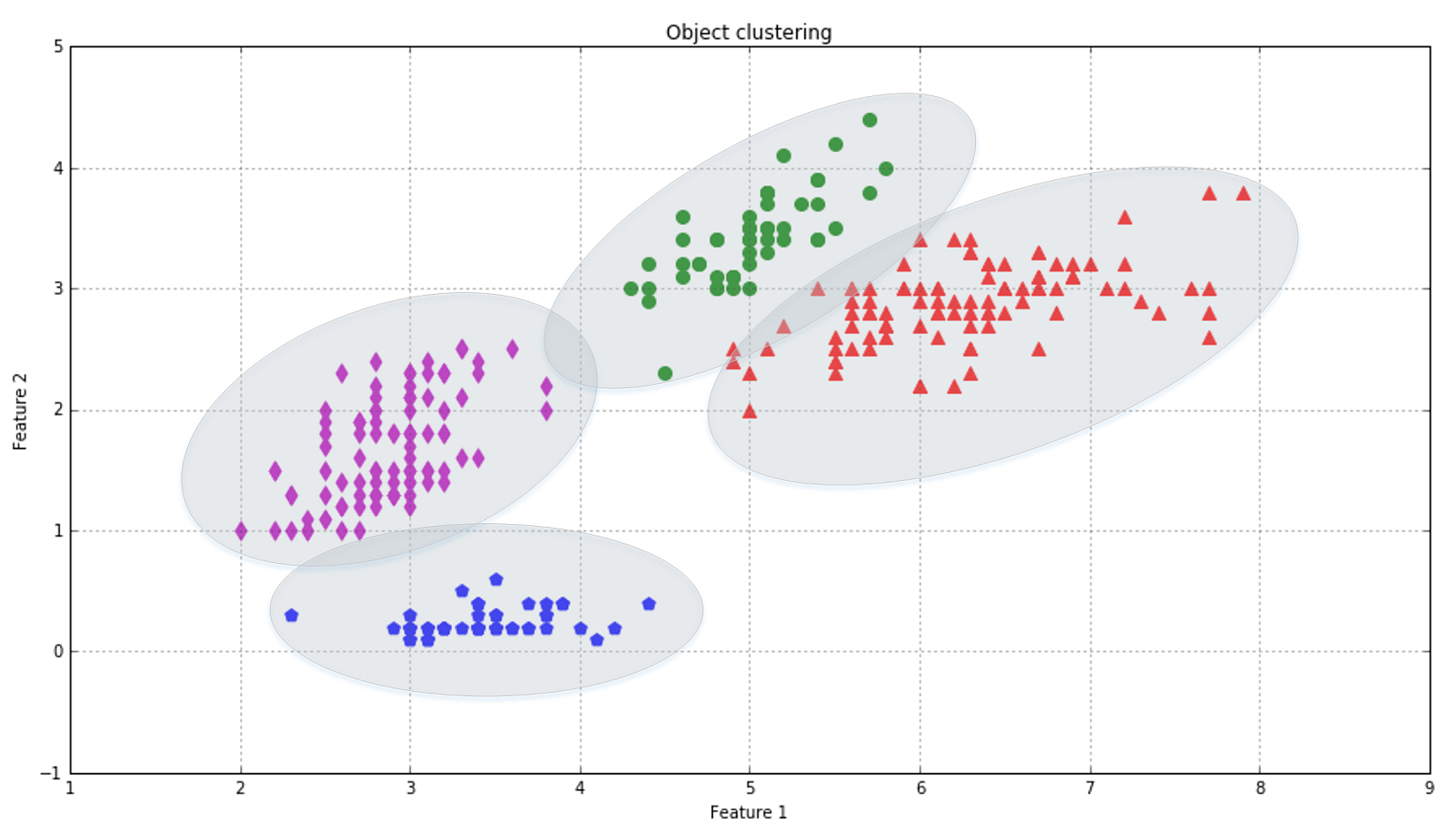

This approach is based on the absence of any supervisor and therefore of absolute error measures; it's useful when it's necessary to learn how a set of elements can be grouped (clustered) according to their similarity (or distance measure).这种方法基于没有任何监督者,因此没有绝对误差测量;当需要了解如何根据一组元素的相似性(或距离度量)对它们进行分组(集群)时,这是很有用的。

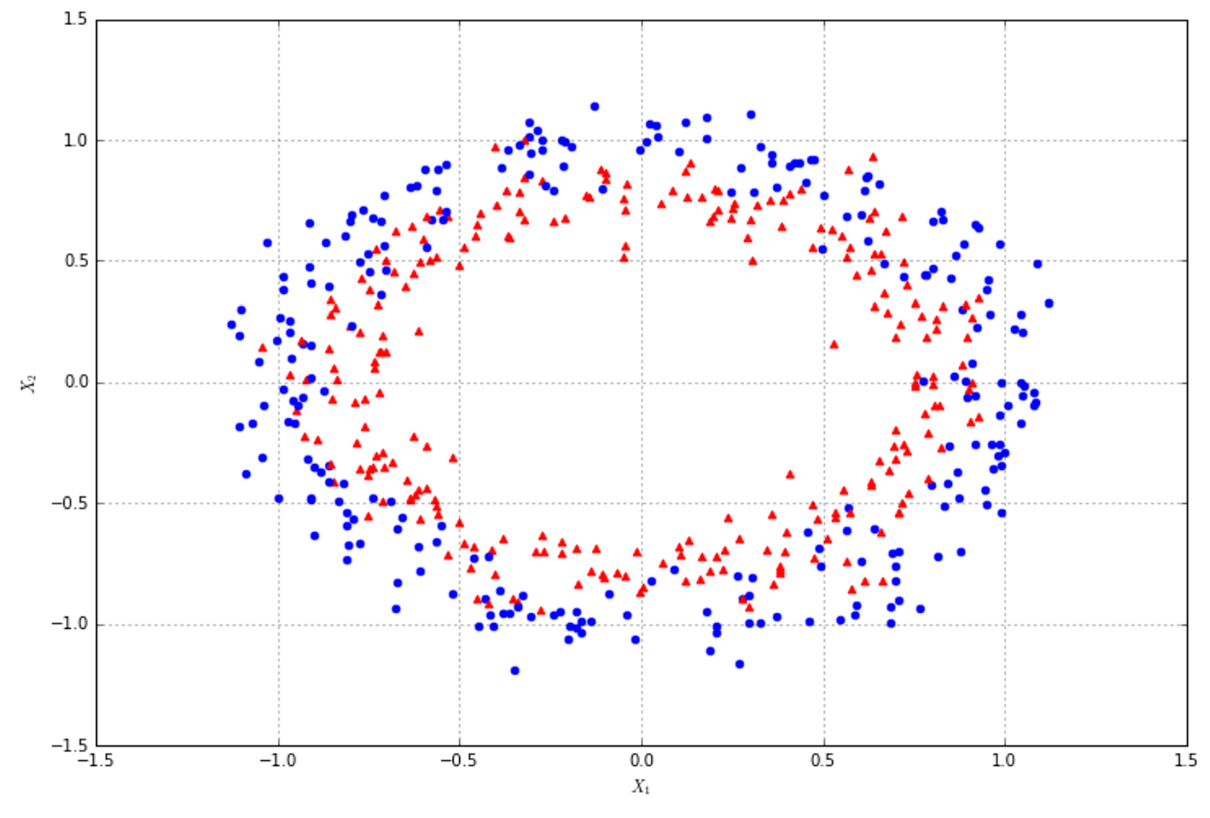

In the next figure, each ellipse represents a cluster and all the points inside its area can be labeled in the same way. There are also boundary points (such as the triangles overlapping the circle area) that need a specific criterion (normally a trade-off distance measure) to determine the corresponding cluster. Just as for classification with ambiguities (P and malformed R), a good clustering approach should consider the presence of outliers and treat them so as to increase both the internal coherence (visually, this means picking a subdivision that maximizes the local density) and the separation among clusters.在下图中,每个椭圆代表一个集群,其区域内的所有点都可以以相同的方式标记。还有一些边界点(例如重叠圆形区域的三角形)需要一个特定的标准(通常是权衡距离度量)来确定相应的集群。就像模糊分类(P和畸形的R)一样,一个好的聚类方法应该考虑离群值的存在并处理它们,以增加内部一致性(视觉上,这意味着选择一个使局部密度最大化的细分)和集群间的分离度。

Commons unsupervised applications include:常见的非监督学习包括: 1. Object segmentation (for example, users, products, movies, songs, and so on)对象分割(例如,用户、产品、电影、歌曲等) 2. Similarity detection相似度检测 3. Automatic labeling自动标记

1.2.3 Reinforcement learning强化学习

Even if there are no actual supervisors, reinforcement learning is also based on feedback provided by the environment. However, in this case, the information is more qualitative and doesn't help the agent in determining a precise measure of its error. In reinforcement learning, this feedback is usually called reward (sometimes, a negative one is defined as a penalty) and it's useful to understand whether a certain action performed in a state is positive or not.即使没有真正的监督者,强化学习也是基于环境提供的反馈。然而,在这种情况下,信息更加定性,并不能帮助代理确定其错误的精确度量。在强化学习中,这种反馈通常被称为奖励(有时,一个消极的反馈被定义为惩罚),理解在一种状态下执行的某个行为是否为积极的是有用的。

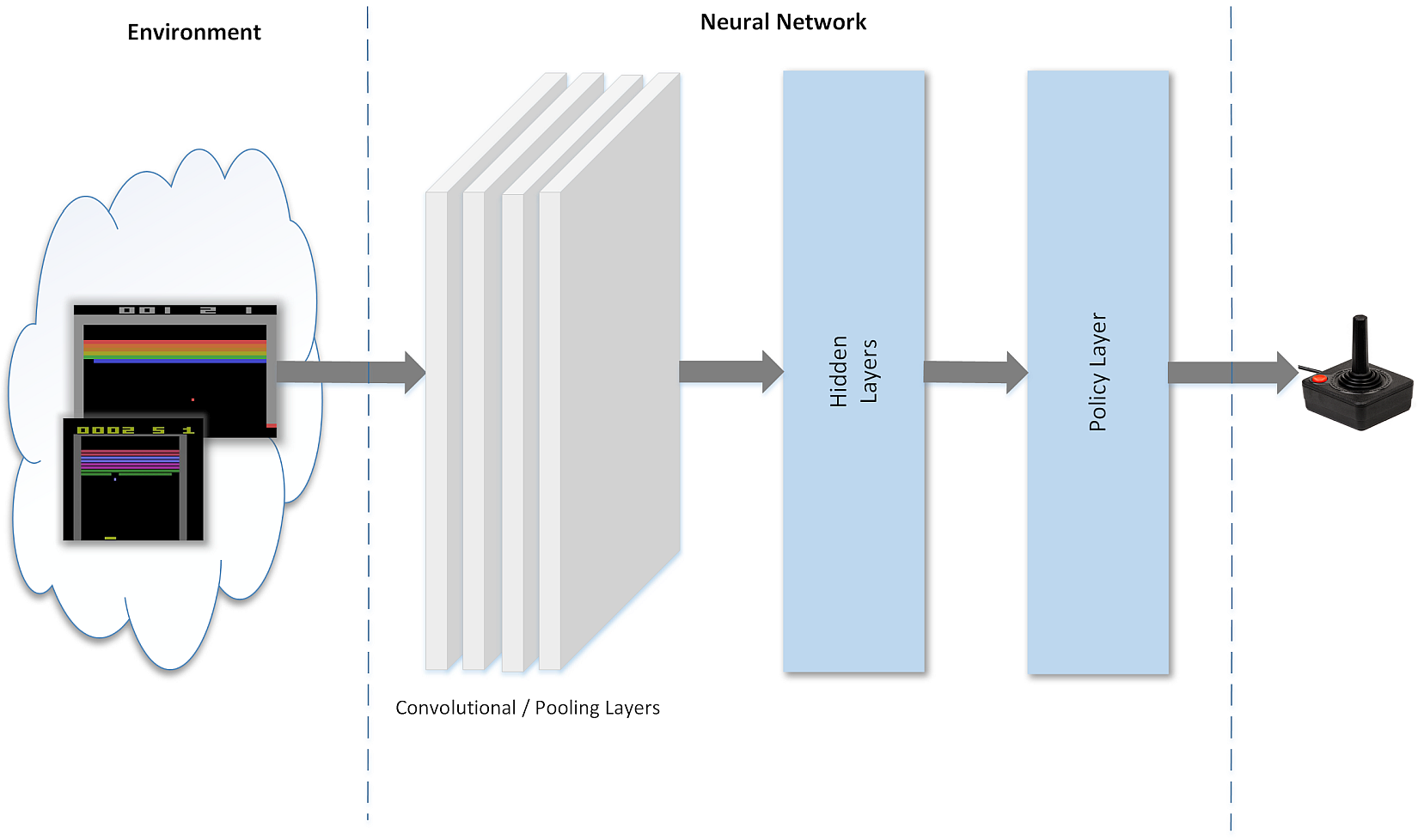

In the following figure, there's a schematic representation of a deep neural network trained to play a famous Atari game. As input, there are one or more subsequent screenshots (this can often be enough to capture the temporal dynamics as well). They are processed using different layers (discussed briefly later) to produce an output that represents the policy for a specific state transition. After applying this policy, the game produces a feedback (as a reward-penalty), and this result is used to refine the output until it becomes stable (so the states are correctly recognized and the suggested action is always the best one) and the total reward overcomes a predefined threshold.在下面的图中,有一个训练用来玩著名的雅达利游戏的深度神经网络的示意图。作为输入,有一个或多个后续屏幕截图(这通常也足以捕获当时动态)。它们使用不同的层(稍后将简要讨论)进行处理,以生成表示特定状态转换策略的输出。在应用这个策略之后,游戏会产生一个反馈(作为奖励-惩罚),这个结果被用来完善输出,直到输出变得稳定(这样状态就被正确地识别了,并且建议的动作总是最优的),并且总回报超过了预定义的阈值。

我的理解:

| 监督、非监督、强化学习 | 输入 | 原始输出 | 学习输出 | 度量标准 |

|---|---|---|---|---|

| 监督学习 | 必须有输入 | 必须有数据给出的原始输出 | 必定有预测输出 | 学习输出和原始数据输出之间的差别是客观的,但是度量标准则不是绝对的,比如线性回归可采用MSE、RMSE等各种度量。当然,应根据任务需求选择最合适的度量。 |

| 非监督学习 | 必须有输入 | 不用有数据给出的原始输出 | 必定有预测输出 | 只有学习输出而无原始数据输出,需要根据原始输入、学习输出构造度量标准;度量标准相对于监督学习更主观。 |

| 强化学习 | 必须有输入(将以图1-6代表的强化学习来举例,必须知道游戏现状。) | 不用有数据给出的原始输出(并不限定下一步动作是怎么样的) | 必定有预测输出(给出最优的建议动作) | 同样只有学习输出而无原始数据输入,同样需要根据原始输入、学习输出构造度量标准(当然,也要适应规则等),注意度量标于非监督学习仍有区别,非监督学习给出的是对总体的分值,而强化学习给出的是对总体分值的修正(奖惩)。 |

1.3 Beyond machine learning - deep learning and bio-inspired adaptive systems超越机器学习-深度学习和生物启发自适应系统

In the last decade, many researchers started training bigger and bigger models, built with several different layers (that's why this approach is called deep learning), to solve new challenging problems.在过去的十年里,许多研究者开始训练越来越大的模型,这些模型含有多个不同的层(这就是为什么这种方法被称为深度学习),以解决新的有挑战性的问题。

The idea behind these techniques is to create algorithms that work like a brain and many important advancements in this field have been achieved thanks to the contribution of neurosciences and cognitive psychology. In particular, there's a growing interest in pattern recognition and associative memories whose structure and functioning are similar to what happens in the neocortex. Such an approach also allows simpler algorithms called model-free; these aren't based on any mathematical-physical formulation of a particular problem but rather on generic learning techniques and repeating experiences.这些技术背后的理念是创造出像大脑一样工作的算法,由于神经科学和认知心理学的贡献,这一领域已经取得了许多重要的进展。特别是,人们对模式识别和联想记忆越来越感兴趣,模式识别和联想记忆的结构和功能与大脑皮层的情况类似。这种方法还允许更简单的算法,称为无模型;这些都不是基于特定问题的数学-物理公式,而是基于一般的学习技术和重复的经验。

Common deep learning applications include:常见的强化学习包括: 1. Image classification图像分类 2. Real-time visual tracking实时视觉跟踪 3. Autonomous car driving自动汽车驾驶 4. Logistic optimization物流优化 5. Bioinformatics生物信息 6. Speech recognition语音识别

1.4 Machine learning and big data机器学习与大数据

Another area that can be exploited using machine learning is big data. After the first release of Apache Hadoop, which implemented an efficient MapReduce algorithm, the amount of information managed in different business contexts grew exponentially. At the same time, the opportunity to use it for machine learning purposes arose and several applications such as mass collaborative filtering became reality.另一个可以利用机器学习的领域是大数据。在第一次发布Apache Hadoop(它实现了一种高效的MapReduce算法)之后,在不同业务上下文中管理的信息量成倍增长。与此同时,将其用于机器学习目的的机会出现了,一些应用程序如大规模协同过滤成为现实。

1.5 Further reading延伸阅读

1.6 Summary总结

2 A Important Elements in Machine Learning机器学习重要因素

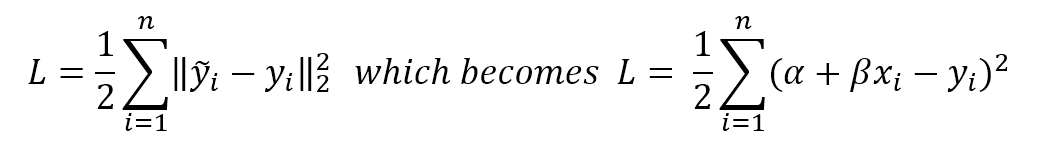

a good machine learning result is normally associated with the choice of the best loss function and the usage of the right algorithm to minimize it.好的机器学习结果通常与选择最佳损失函数并采用正确的算法最小化损失函数相关联。

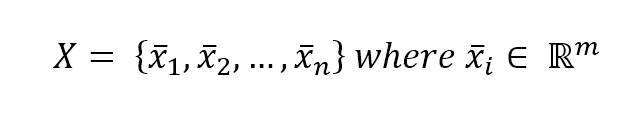

2.1 Data formats数据格式

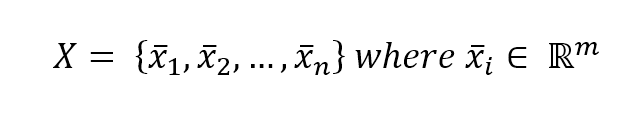

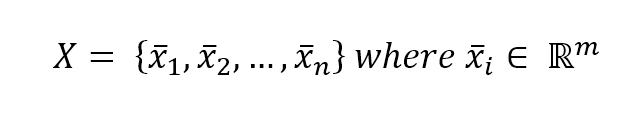

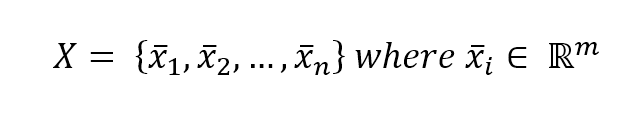

In a supervised learning problem, there will always be a dataset, defined as a finite set of real vectors with m features each:监督学习问题,总会有一个数据集,定义为实数向量(行向量)组成的有限集合,每个实数向量含m个特征:

$$\mathbf{X} = \lbrace\vec{x_1};\vec{x_2};\ldots;\vec{x_n}\rbrace \ where \ \vec{x_i} \in \mathbb{R}^m$$

也就是:

$$\mathbf{X} = \lbrace\vec{x_1};\vec{x_2};\ldots;\vec{x_n}\rbrace \\

where \ \vec{x_i} = [x_{i1},x_{i2},\ldots, x_{im}] \\

where \ x_{ij} \in \mathbb{R}$$

为什么表示成行向量的集合用{}而不是[],因为不同的行是不同的记录,不同的列代表不同的特征,我们并不关心记录出现的先后,我们关心的是特征。

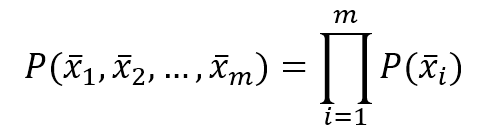

Considering that our approach is always probabilistic, we need to consider each X as drawn from a statistical multivariate distribution D. For our purposes, it's also useful to add a very important condition upon the whole dataset X: we expect all samples to be independent and identically distributed (i.i.d). This means all variables belong to the same distribution D, and considering an arbitrary subset of m values, it happens that:考虑到我们的方法总是概率,我们需要认为每个数据集X是从统计学的多变量分布D中得到的。为满足我们的目的,在整个数据集X上添加一个非常重要的条件也是很有用的;我们期待所有样本独立同分布(i.i.d)。这意味着所有变量都属于同一个分布D,考虑到m个值的任意子集,有(此时是用列向量表示):

$$P(\vec{x_1},\vec{x_2},\ldots,\vec{x_m}) = \prod_{i=1}^{m} {P(\vec{x_i})}$$

此博客环境不止怎的必须用``包裹起来方能正常显示。之后不能正确显示的话考虑加入``包裹。

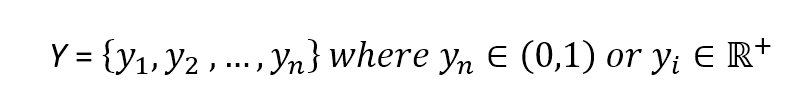

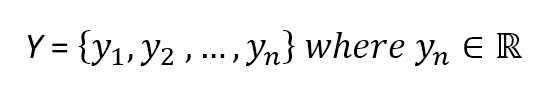

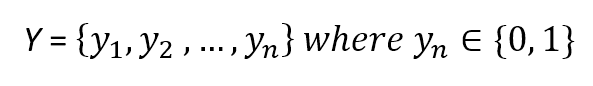

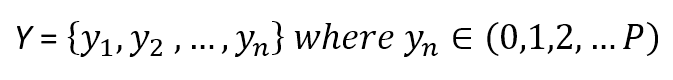

The corresponding output values can be both numerical-continuous or categorical. In the first case, the process is called regression, while in the second, it is called classification. Examples of numerical outputs are:对应输出可以是连续数值或分类。如果输出是连续数值,这个过程就是回归。而如果输出是分类属性,那就叫分类。数值输出的例子如下:

$$\mathbf{y} = \lbrace y_1 ,y_2 ,\ldots, y_n \rbrace \ where \ y_i \in (0,1) \ or \ y_i \in \mathbb{R}^+$$

这里作者是说例子,并不一定要求输出为0到1之间或正实数。

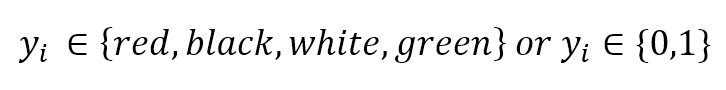

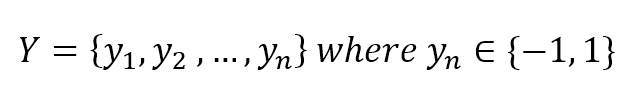

Categorical examples are:分类属性输出的例子如下:

$$\mathbf{y} = \lbrace y_1 ,y_2 ,\ldots, y_n \rbrace \ where \ y_i \in \lbrace red ,black ,white, green \rbrace \ or \ y_i \in \lbrace 0, 1 \rbrace$$

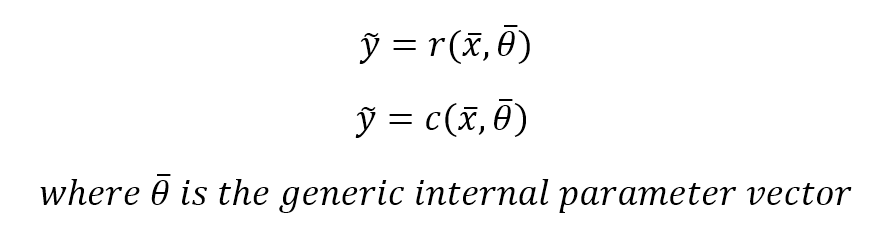

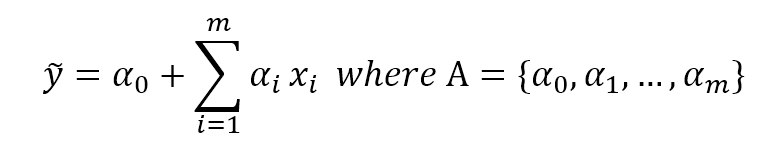

We define generic regressor, a vector-valued function which associates an input value to a continuous output and generic classifier, a vector-values function whose predicted output is categorical (discrete). If they also depend on an internal parameter vector which determines the actual instance of a generic predictor, the approach is called parametric learning:我们定义通用回归器,一个将输入值与连续输出关联的向量值函数;和一个通用分类器,一个预测输出为分类(离散的)的向量值函数。如果它们也靠内部参数向量来决定通用预测器的实际实例,这个方法称为参数学习:

$$\tilde{y} = r(\vec{x}, \vec{\theta}) \\

\tilde{y} = c(\vec{x}, \vec{\theta}) \\

where \ \vec{\theta} \ is \ the \ generic \ internel \ parameter \ vector$$

On the other hand, non-parametric learning doesn't make initial assumptions about the family of predictors (for example, defining a generic parameterized version of r(...) and c(...)). A very common non-parametric family is called instance-based learning and makes real-time predictions (without pre-computing parameter values) based on hypothesis determined only by the training samples (instance set). A simple and widespread approach adopts the concept of neighborhoods (with a fixed radius). In a classification problem, a new sample is automatically surrounded by classified training elements and the output class is determined considering the preponderant one in the neighborhood. kernel-based support vector machines are another very important algorithm family belonging to this class.相反,无参数学习并没有对预测器做初始假设(例如,例如,定义r(...)和c(...)的通用参数化版本)。一个非常常见的非参数族称为基于实例的学习,它基于仅由训练样本(实例集)确定的假设进行实时预测(不需要预计算参数值)。一种简单而广泛的方法采用了临近关系的概念(具有固定的半径)。在分类问题中,一个新的样本被已归类的训练元素自动包围,且输出分类参考占优势的临近关系来确定。基于核的支持向量机(kernel-based support vector machines)是另一种无参数学习的算法。

A generic parametric training process must find the best parameter vector which minimizes the regression error given a specific training dataset and it should also generate a predictor that can correctly generalize when unknown samples are provided.一般的参数训练过程必须找到在给定特定训练数据集的情况下使回/分类归误差最小化的最佳参数向量,并生成一个预测器,在提供未知样本时能够正确推广。显然,无参数的学习也是要优化什么?????以使误差最小化并可推广。抱歉,暂时不知道无参数的学习中对应参数学习中的参数的是什么。

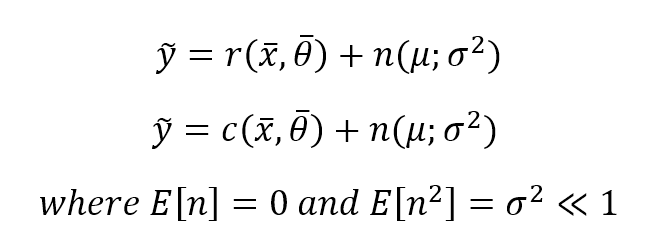

Another interpretation can be expressed in terms of additive noise:另一个解释可表达为附加的噪声:

$$\tilde{y} = r(\vec{x}, \vec{\theta}) + n(\mu;{\sigma}^2) \\

\tilde{y} = c(\vec{x}, \vec{\theta}) + n(\mu;{\sigma}^2) \\

where \ E(n)=\mu=0 \ and \ E(n^{2})={\sigma}^2 \ll 1$$

For our purposes, we can expect zero-mean and low-variance Gaussian noise added to a perfect prediction. A training task must increase the signal-noise ratio by optimizing the parameters. Of course, whenever such a term doesn't have zero mean (independently from the other X values), probably it means that there's a hidden trend that must be taken into account (maybe a feature that has been prematurely discarded). On the other hand, high noise variance means that X is dirty and its measures are not reliable.为满足我们的目的,我们期望零均值和低方差的高斯噪声来完美预测。训练任务必须通过优化参数来提高信噪比。当然,当这样一个项没有零平均值(独立于其他X值)时,可能意味着还有一个隐藏的趋势(可能是一个被过早丢弃的特性)必须考虑到。另一方面,高噪声方差意味着X是脏的,其测量不可靠。显然,无参数的学习也是要优化什么?????以使信噪比最大

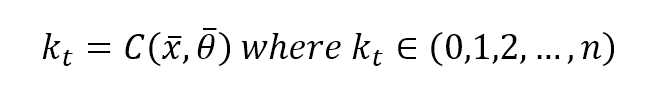

In unsupervised learning, we normally only have an input set X with m-length vectors, and we define clustering function (with n target clusters) with the following expression:在无监督学习中,我们通常只有一个长度为m的向量的输入集X,我们用下面的表达式定义聚类函数(有n个目标簇):

$$k_t = c(\vec{x}, \vec{\theta}) \ where \ k_t \in \lbrace 1,2,\ldots,n \rbrace$$

2.1.1 Multiclass strategies分类策略

When the number of output classes is greater than one, there are two main possibilities to manage a classification problem:当输出分类的数量大于1时,管理分类问题有两种主要的可能性 * One-vs-all一对多 * One-vs-one一对一

2.1.1.1 One-vs-all一对多

This is probably the most common strategy and is widely adopted by scikit-learn for most of its algorithms. If there are n output classes, n classifiers will be trained in parallel considering there is always a separation between an actual class and the remaining ones. This approach is relatively lightweight (at most, n-1 checks are needed to find the right class, so it has an O(n) complexity) and, for this reason, it's normally the default choice and there's no need for further actions.这可能是最常见的策略,并被scikit-learn的大多数算法广泛采用。如果有n个输出分类,考虑到实际分类和剩余分类之间总是存在间隔,将会并行训练n个分类器。这种方法是相对轻量级的(找到正确的分类最多需要n-1次检查,因此它具有O(n)复杂性),因此,它通常是默认选择,不需要进一步的操作。

Multiclass as One-Vs-All:

- sklearn.ensemble.GradientBoostingClassifier

- sklearn.gaussian_process.GaussianProcessClassifier (setting multi_class = “one_vs_rest”)

- sklearn.svm.LinearSVC (setting multi_class=”ovr”)

- sklearn.linear_model.LogisticRegression (setting multi_class=”ovr”)

- sklearn.linear_model.LogisticRegressionCV (setting multi_class=”ovr”)

- sklearn.linear_model.SGDClassifier

- sklearn.linear_model.Perceptron

- sklearn.linear_model.PassiveAggressiveClassifier

2.1.1.1 One-vs-one一对一

The alternative to one-vs-all is training a model for each pair of classes. The complexity is no longer linear (it's $O(n^2)$ indeed) and the right class is determined by a majority vote. In general, this choice is more expensive and should be adopted only when a full dataset comparison is not preferable.另一种方法是一对一,即每队分类训练一个模型。复杂度不再是线性的(而是$O(n^2)$),并且正确的分类取决于大多数投票结果。一般来说,这种选择比较昂贵,只有当完整的数据集比较不可取时才应该采用。

Multiclass as One-Vs-One:

- sklearn.svm.NuSVC

- sklearn.svm.SVC.

- sklearn.gaussian_process.GaussianProcessClassifier (setting multi_class = “one_vs_one”)

2.2 Learnability可学习性

A parametric model can be split into two parts: a static structure and a dynamic set of parameters. The former is determined by choice of a specific algorithm and is normally immutable (except in the cases when the model provides some re-modeling functionalities), while the latter is the objective of our optimization. Considering n unbounded parameters, they generate an n-dimensional space (imposing bounds results in a sub-space without relevant changes in our discussion) where each point, together with the immutable part of the estimator function, represents a learning hypothesis H (associated with a specific set of parameters):参数模型可分为两部分:一种静态结构和一组动态参数。前者由特定算法的选择决定,通常是不可变的(除非模型提供一些重新建模功能),而后者是我们优化的目标。考虑到n个无界参数,它们会生成一个n维空间(在我们的讨论中,在没有相关更改的情况下,将边界设置在子空间中),空间中每个点与估计函数的不可变部分一起表示一个学习假设H(与一组特定参数相关联):

$$\mathbf{H} = [{\theta}_{1},{\theta}_{2},\ldots,{\theta}_{n}]$$

各参数之间肯定是有顺序的,所以我改用[]表示。不要因此认为参数个数与数据点个数相等!

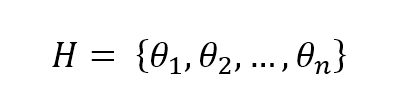

In the following figure, there's an example of a dataset whose points must be classified as red (Class A) or blue (Class B). Three hypotheses are shown: the first one (the middle line starting from left) misclassifies one sample, while the lower and upper ones misclassify 13 and 23 samples respectively:在下面的图中,有一个数据集的示例,其点必须分类为红色(类a)或蓝色(类B)。有三个假设:第一个假设(左起中间的线条)对1个样本进行了错误分类,而下面的假设和上面的假设分别对13个样本和23个样本进行了错误分类:

We say that the dataset X is linearly separable (without transformations) if there exists a hyperplane which divides the space into two subspaces containing only elements belonging to the same class.如果存在超平面,它将空间分成两个子空间,子空间只包含属于同一个类的元素,我们就说数据集X是线性可分的(没有变换)。

We say that the dataset X is linearly separable (without transformations) if there exists a hyperplane which divides the space into two subspaces containing only elements belonging to the same class.如果存在超平面,它将空间分成两个子空间,子空间只包含属于同一个类的元素,我们就说数据集X是线性可分的(没有变换)。

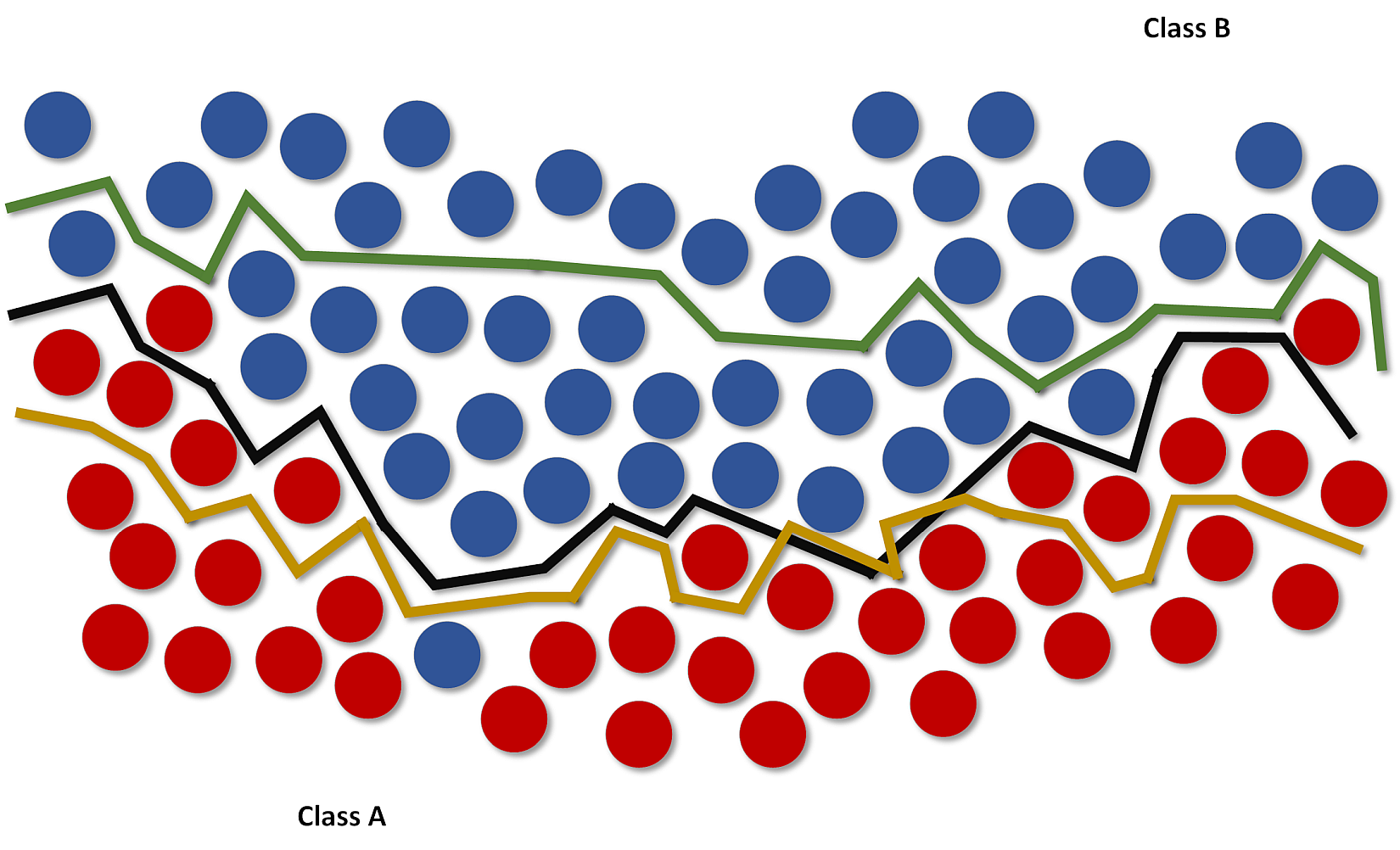

Consider the example shown in the following figure:考虑下图的例子:

The blue classifier is linear while the red one is cubic. At a glance, non-linear strategy seems to perform better, because it can capture more expressivity, thanks to its concavities. However, if new samples are added following the trend defined by the last four ones (from the right), they'll be completely misclassified. In fact, while a linear function is globally better but cannot capture the initial oscillation between 0 and 4, a cubic approach can fit this data almost perfectly but, at the same time, loses its ability to keep a global linear trend. Therefore, there are two possibilities:蓝色分类器是线性的而红色的是三次方的。乍一看,非线性策略似乎表现得更好,因为它可以捕捉到更多的表现力,这要归功于它的复杂性。但是,如果按照最后四个(从右边)定义的趋势添加新样本,它们将完全被错误分类。实际上,虽然线性函数在全局上更好,但不能捕捉到0和4之间的初始振荡,但三次方的方法可以几乎完美地拟合这些数据,但同时,失去了保持全局线性趋势的能力。有两种可能性:

The blue classifier is linear while the red one is cubic. At a glance, non-linear strategy seems to perform better, because it can capture more expressivity, thanks to its concavities. However, if new samples are added following the trend defined by the last four ones (from the right), they'll be completely misclassified. In fact, while a linear function is globally better but cannot capture the initial oscillation between 0 and 4, a cubic approach can fit this data almost perfectly but, at the same time, loses its ability to keep a global linear trend. Therefore, there are two possibilities:蓝色分类器是线性的而红色的是三次方的。乍一看,非线性策略似乎表现得更好,因为它可以捕捉到更多的表现力,这要归功于它的复杂性。但是,如果按照最后四个(从右边)定义的趋势添加新样本,它们将完全被错误分类。实际上,虽然线性函数在全局上更好,但不能捕捉到0和4之间的初始振荡,但三次方的方法可以几乎完美地拟合这些数据,但同时,失去了保持全局线性趋势的能力。有两种可能性:

- If we expect future data to be exactly distributed as training samples, a more complex model can be a good choice, to capture small variations that a lower-level one will discard. In this case, a linear (or lower-level) model will drive to underfitting, because it won't be able to capture an appropriate level of expressivity.如果我们期望未来的数据与训练数据完全一样分布,那么一个更复杂的模型可能是一个不错的选择,它可以捕获指数较低的模型将丢弃的小变动。在这种情况下,线性(或指数较低)模型会导致欠拟合,因为它无法捕捉到合适的表达水平。

- If we think that future data can be locally distributed differently but keeps a global trend, it's preferable to have a higher residual misclassification error as well as a more precise generalization ability. Using a bigger model focusing only on training data can drive to overfitting.如果我们认为未来的数据可能会局趋势相同但局部分布不同,那么最好是有更高的残差分类误差和更精确的泛化能力。使用一个只关注训练数据的更大的模型会导致过拟合。

2.2.1 Underfitting and overfitting欠拟合和过拟合

The purpose of a machine learning model is to approximate an unknown function that associates input elements to output ones (for a classifier, we call them classes). Unfortunately, this ideal condition is not always easy to find and it's important to consider two different dangers:机器学习模型的目的是近似一个未知函数,该函数将输入元素与输出元素关联起来(对于分类器,我们将其称为类)。不幸的是,这种理想状态并不总是容易找到的,考虑两种不同的危险是很重要的:

- Underfitting: It means that the model isn't able to capture the dynamics shown by the same training set (probably because its capacity is too limited).欠拟合:即模型不能捕捉训练集表现出的动态(很可能因为模型能力有限)。

- Overfitting: the model has an excessive capacity and it's not more able to generalize considering the original dynamics provided by the training set. It can associate almost perfectly all the known samples to the corresponding output values, but when an unknown input is presented, the corresponding prediction error can be very high.过拟合:即模型能力过大,因为(过多)考虑到训练集提供的原始动态,它并不能更泛化。它几乎可以将所有已知的样本与相应的输出值完美地关联起来,但当出现未知输入时,相应的预测误差可能非常高。

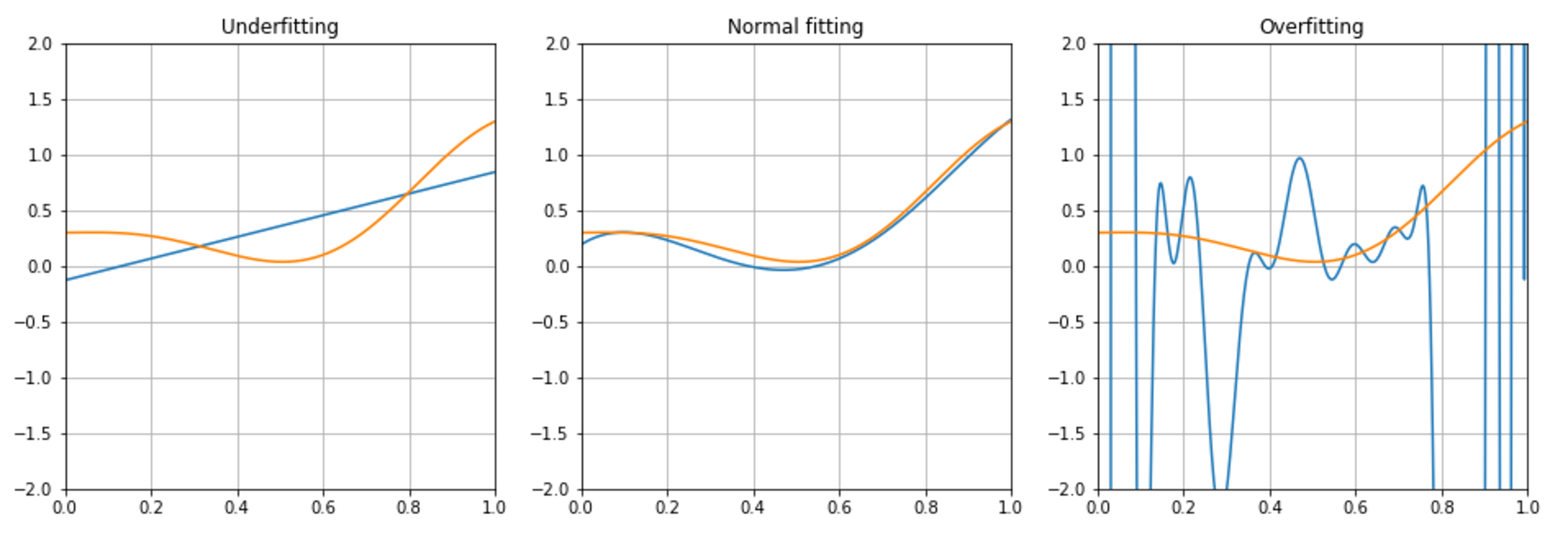

In the following picture, there are examples of interpolation with low-capacity (underfitting), normal-capacity (normal fitting), and excessive capacity (overfitting):下图中,是低能力(欠拟合)、正常能力(正常拟合)和过高能力(过拟合)插值的例子:

Underfitting is easier to detect considering the prediction error, while overfitting may prove to be more difficult to discover as it could be initially considered the result of a perfect fitting.考虑到预测误差,欠拟合更容易检测,而过度拟合可能被证明更难发现,因为它本来最初被认为是一个完美拟合的结果。

Underfitting is easier to detect considering the prediction error, while overfitting may prove to be more difficult to discover as it could be initially considered the result of a perfect fitting.考虑到预测误差,欠拟合更容易检测,而过度拟合可能被证明更难发现,因为它本来最初被认为是一个完美拟合的结果。

Cross-validation and other techniques that we're going to discuss in the next chapters can easily show how our model works with test samples never seen during the training phase. That way, it would be possible to assess the generalization ability in a broader context (remember that we're not working with all possible values, but always with a subset that should reflect the original distribution).交叉验证和我们将在下一章中讨论的其他技术可以很容易地展示我们的模型在训练阶段未见过的测试样本情况下表现如何。这样,就有可能在更广泛的背景下评估泛化能力(请记住,我们并不是使用所有可能的值,而是始终使用一个反映原始分布的子集)。

However, a generic rule of thumb says that a residual error is always necessary to guarantee a good generalization ability, while a model that shows a validation accuracy of 99.999... percent on training samples is almost surely overfitted and will likely be unable to predict correctly when never-seen input samples are provided.然而,一个通用的经验法则说,为了保证良好的泛化能力,残差总是必要的,而一个在训练样本上验证精度为99.999……百分比的模型几乎肯定是过拟合的,并且可能无法对提供的未见输入样本进行正确预测。

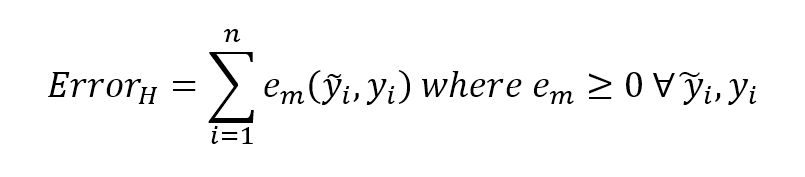

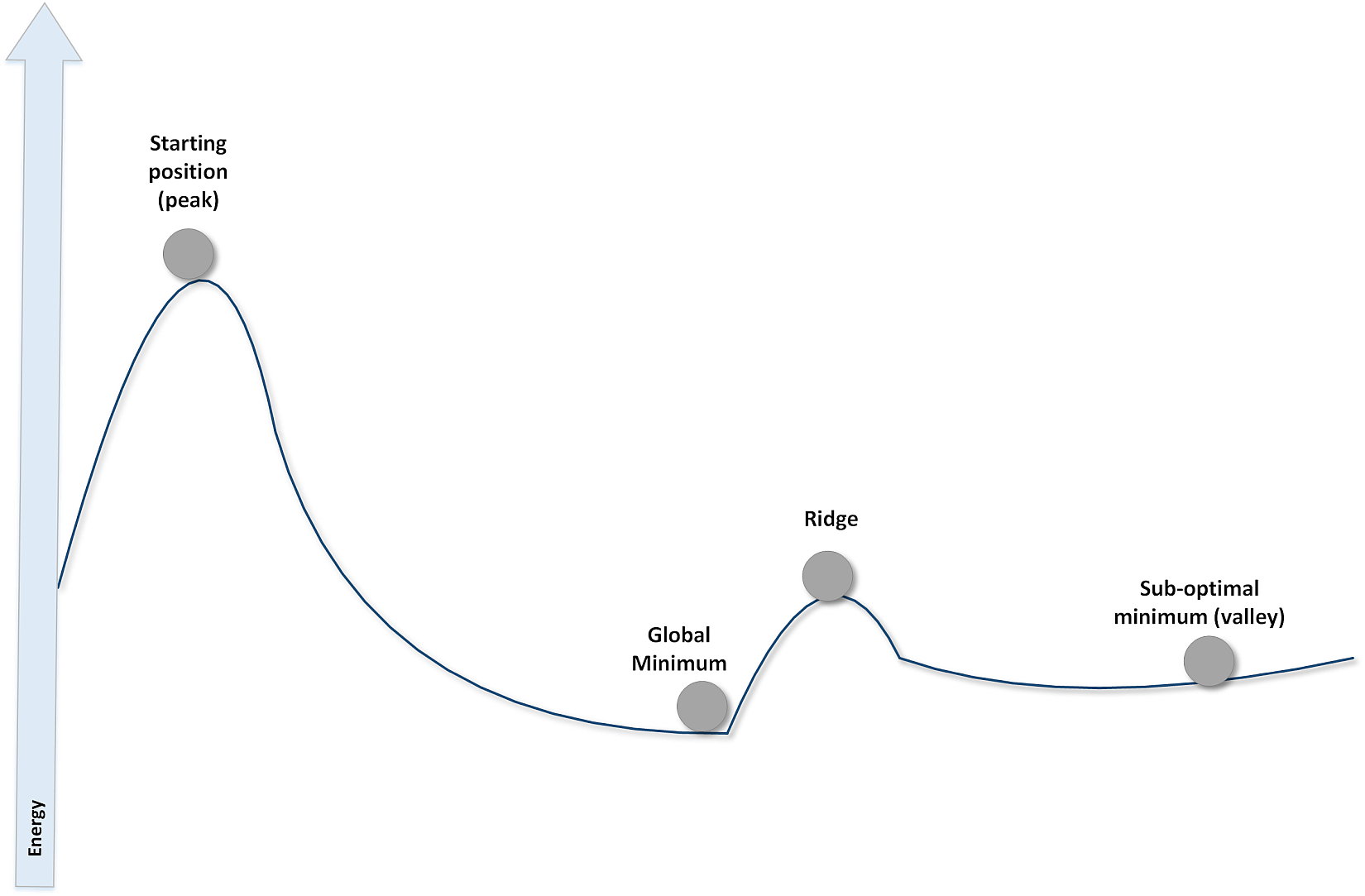

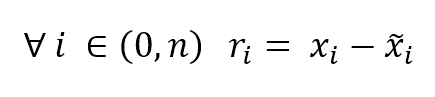

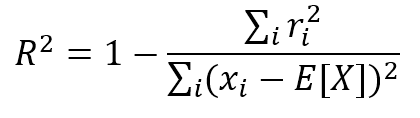

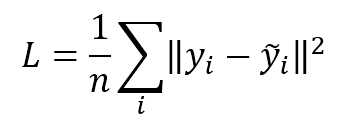

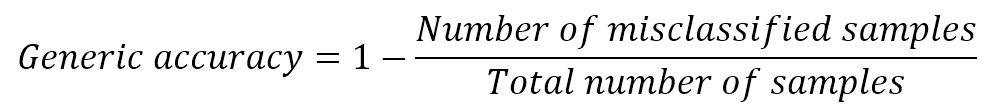

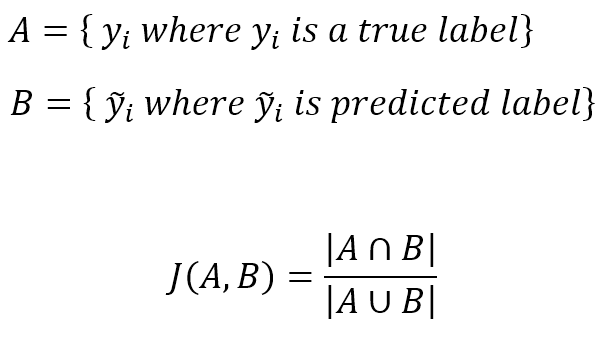

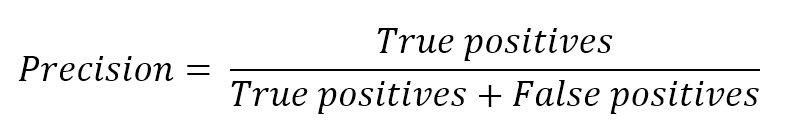

2.2.2 Error measures误差度量

In general, when working with a supervised scenario, we define a non-negative error measure $e_m$ which takes two arguments (expected and predicted output) and allows us to compute a total error value over the whole dataset (made up of n samples):通常,在使用有监督场景时,我们定义一个非负误差度量$e_m$,它接受两个参数(预期输出和预期输出),并允许我们计算整个数据集(由n个样本组成)的总误差值:

$${Error}_{H}=\sum_{i=1}^{n} {e_{m}(\tilde{y_{i}},y_{i})} \ where \ e_{m} \ge 0 \ \forall \ \tilde{y_{i}},y_{i}$$

This value is also implicitly dependent on the specific hypothesis H through the parameter set, therefore optimizing the error implies finding an optimal hypothesis (considering the hardness of many optimization problems, this is not the absolute best one, but an acceptable approximation). In many cases, it's useful to consider the mean square error (MSE):这个值也通过参数集隐含地依赖于特定的假设H,因此优化误差意味着找到一个最优假设(考虑到许多优化问题的难度,这不是绝对最好的,而是一个可接受的近似)。在许多情况下,考虑均方误差(MSE)是很有用的:

$${Error}_{H}=\frac{1} {n} \sum_{i=1}^{n} {(\tilde{y_{i}} - y_{i})^2}$$

Its initial value represents a starting point over the surface of a n-variables function. A generic training algorithm has to find the global minimum or a point quite close to it (there's always a tolerance to avoid an excessive number of iterations and a consequent risk of overfitting). This measure is also called loss function because its value must be minimized through an optimization problem. When it's easy to determine an element which must be maximized, the corresponding loss function will be its reciprocal.它的初始值表示一个n变量函数表面的起点。一个通用的训练算法必须找到全局最小值或非常接近它的点(总是要有一个容忍度来避免过多的迭代和随之而来的过度拟合风险)。这种度量也称为损失函数,因为它的值必须通过优化问题最小化。当很容易确定一个必须最大化的元素时,相应的损失函数将是它的倒数(或负值,这样损失函数仍可通过最小化来优化)。

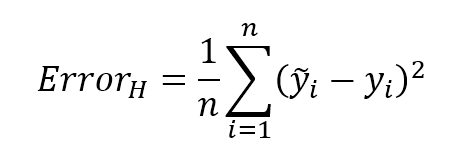

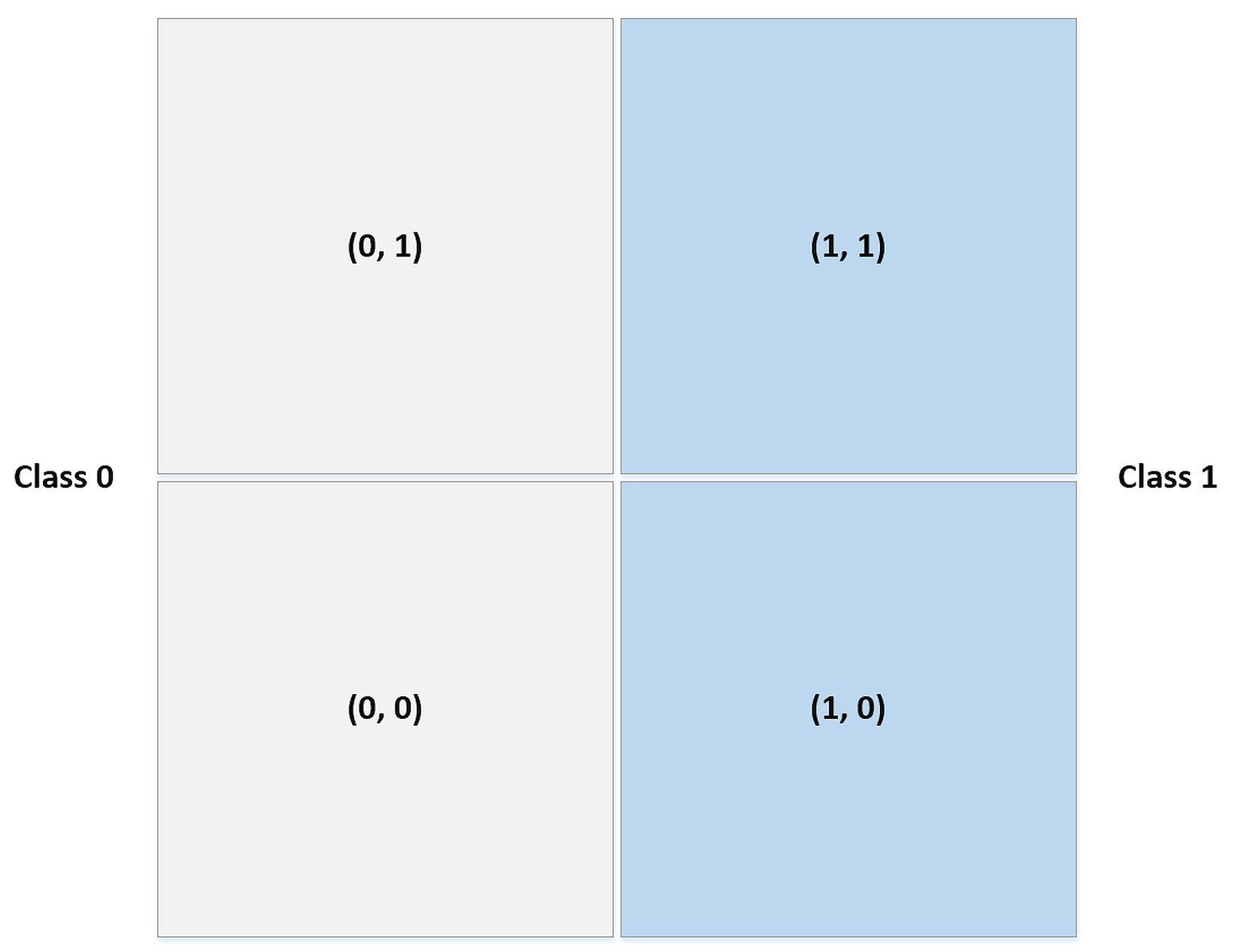

Another useful loss function is called zero-one-loss and it's particularly efficient for binary classifications (also for one-vs-rest multiclass strategy):另一有用损失函数叫零一损失,特别对二分类问题(也对一对多多分类策略)特别有效:

$${L}_{0/1H}(\tilde{y_{i}},y_{i})=\begin{cases} 0, & \text{if $\tilde{y_{i}} = y_{i}$} \\ 1, & \text{if $\tilde{y_{i}} \ne y_{i}$} \end{cases}$$

This function is implicitly an indicator and can be easily adopted in loss functions based on the probability of misclassification.该函数是一个隐式的指示器,在基于误分类概率的损失函数中很容易使用。

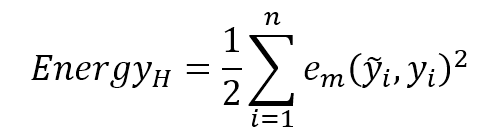

A helpful interpretation of a generic (and continuous) loss function can be expressed in terms of potential energy:一个通用的(连续的)损失函数的有用解释可以用势能项来表示:

$${Energy}_{H}=\frac{1} {2} \sum_{i=1}^{n} {{e_{m}}^2(\tilde{y_{i}} , y_{i})}$$

The predictor is like a ball upon a rough surface: starting from a random point where energy (=error) is usually rather high, it must move until it reaches a stable equilibrium point where its energy (relative to the global minimum) is null. In the following figure, there's a schematic representation of some different situations:预测器犹如粗糙表面上的球:从能量(也就是误差)通常相当高的随机点开始,移动到能量为零(相对全局最小)的稳定的平衡点方止。下图中,有一些不同情况的示意图:

Just like in the physical situation, the starting point is stable without any external perturbation, so to start the process, it's needed to provide initial kinetic energy. However, if such an energy is strong enough, then after descending over the slope the ball cannot stop in the global minimum. The residual kinetic energy can be enough to overcome the ridge and reach the right valley. If there are not other energy sources, the ball gets trapped in the plain valley and cannot move anymore. There are many techniques that have been engineered to solve this problem and avoid local minima. However, every situation must always be carefully analyzed to understand what level of residual energy (or error) is acceptable, or whether it's better to adopt a different strategy.就像在物理情况下,起始点是稳定的,没有任何外部扰动,所以要开始这个过程,需要提供初始动能。然而,如果这样的能量足够强大,那么在下降到斜坡之后,球就不能在全球最小值停止。剩余动能足以克服山脊,到达右边的山谷。如果没有其他能源,球就会被困在平缓的山谷,无法移动。有许多技术已经被设计来解决这个问题并避免局部极小值。然而,每一种情况都必须仔细分析,以了解什么程度的剩余能量(或误差)是可以接受的,或者采用不同的策略是否更好。

Just like in the physical situation, the starting point is stable without any external perturbation, so to start the process, it's needed to provide initial kinetic energy. However, if such an energy is strong enough, then after descending over the slope the ball cannot stop in the global minimum. The residual kinetic energy can be enough to overcome the ridge and reach the right valley. If there are not other energy sources, the ball gets trapped in the plain valley and cannot move anymore. There are many techniques that have been engineered to solve this problem and avoid local minima. However, every situation must always be carefully analyzed to understand what level of residual energy (or error) is acceptable, or whether it's better to adopt a different strategy.就像在物理情况下,起始点是稳定的,没有任何外部扰动,所以要开始这个过程,需要提供初始动能。然而,如果这样的能量足够强大,那么在下降到斜坡之后,球就不能在全球最小值停止。剩余动能足以克服山脊,到达右边的山谷。如果没有其他能源,球就会被困在平缓的山谷,无法移动。有许多技术已经被设计来解决这个问题并避免局部极小值。然而,每一种情况都必须仔细分析,以了解什么程度的剩余能量(或误差)是可以接受的,或者采用不同的策略是否更好。

注意到误差公式和能量公式之间的关系了吗?不考虑求和记号,其实后者求导即得前者。

2.2.3 PAC learning PAC学习理论

In many cases machine learning seems to work seamlessly, but is there any way to determine formally the learnability of a concept? In 1984, the computer scientist L. Valiant proposed a mathematical approach to determine whether a problem is learnable by a computer. The name of this technique is PAC, or probably approximately correct.在许多情况下,机器学习似乎可以无缝地工作,但有什么方法可以正式确定一个概念的可学性?1984年,计算机科学家L. Valiant提出了一种数学方法来确定问题是否可以通过计算机学习。这种技术叫可能近似正确(PAC)。

The original formulation (you can read it in Valiant L., A Theory of the Learnable, Communications of the ACM, Vol. 27, No. 11 , Nov. 1984) is based on a particular hypothesis, however, without a considerable loss of precision, we can think about a classification problem where an algorithm A has to learn a set of concepts. In particular, a concept is a subset of input patterns X which determine the same output element. Therefore, learning a concept (parametrically) means minimizing the corresponding loss function restricted to a specific class, while learning all possible concepts (belonging to the same universe), means finding the minimum of a global loss function.最初的公式(你可从Valiant L., A Theory of the Learnable, Communications of the ACM, Vol. 27, No. 11 , Nov. 1984阅读)是基于一个特定的假设,然而,在没有很大的精度损失的情况下,我们可以考虑一个分类问题,其中算法A必须学习一组概念。特别是,概念是输入模式X的子集,X决定相同输出元素。因此,学习一个概念(参数化)意味着将相应的损失函数限制在一个特定的分类中,而学习所有可能的概念(属于同一个全局)意味着找到全局损失函数的最小值。

However, given a problem, we have many possible (sometimes, theoretically infinite) hypotheses and a probabilistic trade-off is often necessary. For this reason, we accept good approximations with high probability based on a limited number of input elements and produced in polynomial time.然而,给定一个问题,我们有许多可能的(有时,理论上是无限的)假设,一个概率权衡通常是必要的。因此,我们接受基于有限数量输入元素并在多项式时间内产生的高概率近似。

Therefore, an algorithm A can learn the class C of all concepts (making them PAC learnable) if it's able to find a hypothesis H with a procedure $O(n^k)$ so that A, with a probability p, can classify all patterns correctly with a maximum allowed error $m_e$. This must be valid for all statistical distributions on X and for a number of training samples which must be greater than or equal to a minimum value depending only on p and me.因此,如果算法能够通过过程$O(n^k)$找到假设H,那么A,在概率p的情况下,可以用最大允许误差$m_e$正确地分类所有模式,那么一个算法A可以学习所有概念构成的分类C(使它们成为PAC可学习的)。

The constraint to computation complexity is not a secondary matter, in fact, we expect our algorithms to learn efficiently in a reasonable time also when the problem is quite complex. An exponential time could lead to computational explosions when the datasets are too large or the optimization starting point is very far from an acceptable minimum. Moreover, it's important to remember the so-called curse of dimensionality, which is an effect that often happens in some models where training or prediction time is proportional (not always linearly) to the dimensions, so when the number of features increases, the performance of the models (that can be reasonable when the input dimensionality is small) gets dramatically reduced. Moreover, in many cases, in order to capture the full expressivity, it's necessary to have a very large dataset and without enough training data, the approximation can become problematic (this is called Hughes phenomenon). For these reasons, looking for polynomial-time algorithms is more than a simple effort, because it can determine the success or the failure of a machine learning problem. For these reasons, in the next chapters, we're going to introduce some techniques that can be used to efficiently reduce the dimensionality of a dataset without a problematic loss of information.计算复杂度的限制并不是次要问题,事实上,当问题相当复杂的时候,我们希望我们的算法在合理的时间内高效地学习。当数据集太大或者优化起点离可接受的最小值太远时,指数级时间可能导致计算爆炸。此外,重要的是要记住所谓的维度诅咒,训练和预测时间与维度成正比(不总是线性)的模型经常有这类问题,所以当特征数量的增加,模型的性能(输入维度小时模型的性能才合理)被大大降低了。此外,在许多情况下,为了获得完整的表达能力,需要有一个非常大的数据集,而没有足够的训练数据,近似就会成为问题(这被称为休斯现象)。由于这些原因,寻找多项式时间算法不仅仅是一项简单的工作,因为它可以决定机器学习问题的成败。为防止维度诅咒和休斯现象,需要在不损失信息的前提下有效降低维度。

我的总结:进行T次运算,以p的概率实现$m_e$的误差实现算法A,如果T不大于$O(n^k)$且p和$m_e$可框定范围,那么算法A就是分类C(分类C包含算法A的全部可能的概念或者说可能的参数)的PAC可学算法。

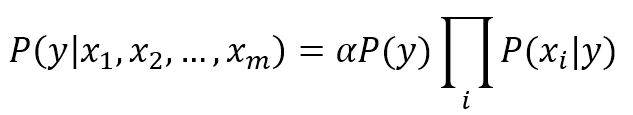

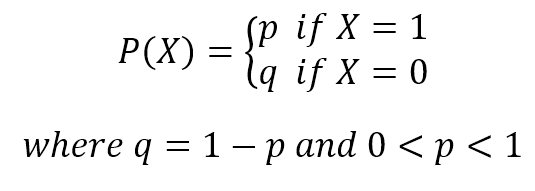

2.3 Statistical learning approaches统计学习法

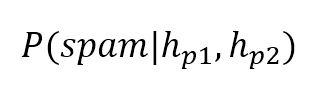

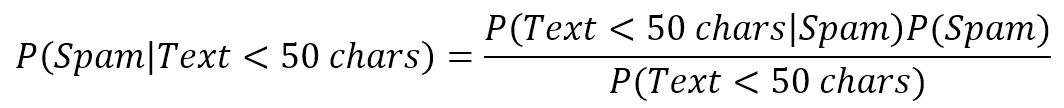

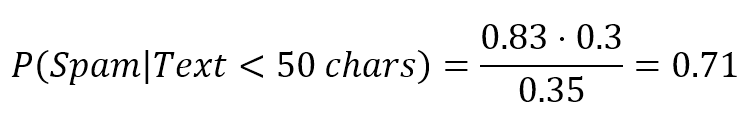

Imagine that you need to design a spam-filtering algorithm starting from this initial (over-simplistic) classification based on two parameters:假设您需要设计一个基于两个参数的初始(过于简单)分类的垃圾邮件过滤算法:

| Parameter | Spam emails (X1) | Regular emails (X2) |

|---|---|---|

| p1 - Contains > 5 blacklisted words | 80 | 20 |

| p2 - Message length < 20 characters | 75 | 25 |

We have collected 200 email messages (X) (for simplicity, we consider p1 and p2 mutually exclusive) and we need to find a couple of probabilistic hypotheses (expressed in terms of p1 and p2), to determine:我们收集了200封电子邮件(X)(为了简单起见,我们认为p1和p2互斥,只为了保证上表和刚好为200,无其他意义。),我们需要找到一对概率假设(用p1和p2表示)来确定:

$$P(spam \mid h_{p1}, h_{p2})$$

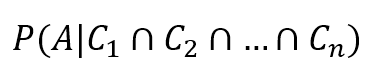

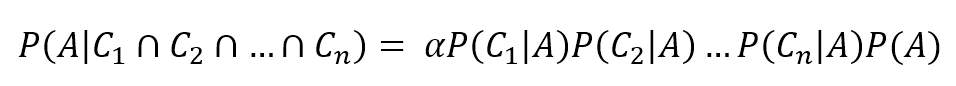

We also assume the conditional independence of both terms (it means that $h_{p1}$ and $h_{p2}$ contribute conjunctly to spam in the same way as they were alone).我们还假设这两项都是条件独立的(这意味着$h_{p1}$和$h_{p2}$以单独的方式共同导致垃圾邮件)。

For example, we could think about rules (hypotheses) like: "If there are more than five blacklisted words" or "If the message is less than 20 characters in length" then "the probability of spam is high" (for example, greater than 50 percent). However, without assigning probabilities, it's difficult to generalize when the dataset changes (like in a real world antispam filter). We also want to determine a partitioning threshold (such as green, yellow, and red signals) to help the user in deciding what to keep and what to trash.例如,我们可想象规则(假设)如:“若有超过超过5个黑名单单词”或“若信息长度少于20字符”那么“是垃圾邮件的可能性高”(例如,超过50%)。但是,如果不分配概率,就很难在数据集发生变化时进行概括(就像在实际的反垃圾邮件过滤器中那样)。我们还希望确定分区阈值(例如绿色、黄色和红色信号),以帮助用户决定保留哪些内容和销毁哪些内容。

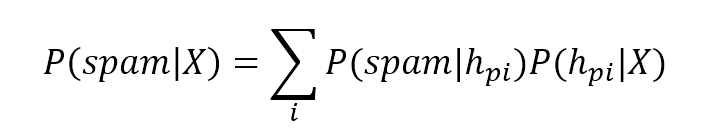

As the hypotheses are determined through the dataset $\mathbf{X}$, we can also write (in a discrete form):由于假设是通过数据集$\mathbf{X}$确定的,我们还可以(以离散形式)写成:

$$P(spam \mid \mathbf{X})=\sum_{i} {P(spam \mid h_{pi})P(h_{pi} \mid \mathbf{X})}$$

【

记$A=spam \mid \mathbf{X}$并$B_{i}=h_{pi} \mid \mathbf{X}$,若$B_{i}$的全体组成完备事件组,即它们两两互不相容,其和为全集,那么由全概率公式:

$$P(A)=\sum_{i} {P(A \mid B_{i})P(B_{i})}$$

即:

$$P(spam \mid \mathbf{X})=\sum_{i} {P(spam \mid h_{pi})P(h_{pi} \mid \mathbf{X})}$$

注意:条件p1与p2互斥则其两者不可能独立,作者原文是p1的条件概率与p2的条件概率互斥。 】

【 公式右边,第一项很好求,就如上表。公式第二项可以采用以下3种方法:

贝叶斯方法。假设多时很耗时,因为要穷尽计算每一项然后求和。

MAP learning。根据后验概率选择最可能的假设,其他假设忽略。缺点是依赖于先验概率。

Maximum-likelihood learning。即用极大似然代替。

此备注暂留,但由于摘抄时未详细信息,已不知道所指是何公式了。 】

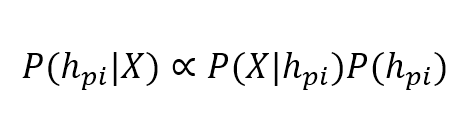

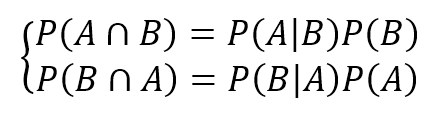

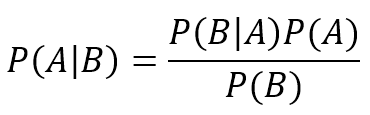

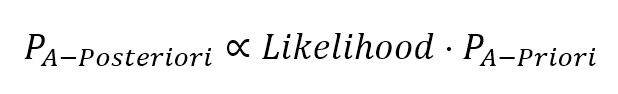

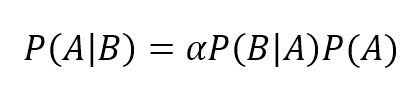

In this example, it's quite easy to determine the value of each term. However, in general, it's necessary to introduce the Bayes formula (which will be discussed in Chapter 6, Naive Bayes):这个例子中,决定每项的值很容易。然而,引入贝叶斯公式是必要的(将在第六章讨论)。

$$P(h_{pi} \mid \mathbf{X}) \propto P(\mathbf{X} \mid h_{pi})P(h_{pi})$$

The proportionality is necessary to avoid the introduction of the marginal probability P(X), which acts only as a normalization factor (remember that in a discrete random variable, the sum of all possible probability outcomes must be equal to 1).为了避免引入边际概率P(X),比例是必要的。边际概率P(X)仅作为一个标准化因子(记住,在离散随机变量中,所有可能的概率结果之和必须等于1)。

In the previous equation, the first term is called a posteriori (which comes after) probability, because it's determined by a marginal Apriori (which comes first) probability multiplied by a factor which is called likelihood. To understand the philosophy of such an approach, it's useful to take a simple example: tossing a fair coin. Everybody knows that the marginal probability of each face is equal to 0.5, but who decided that? It's a theoretical consequence of logic and probability axioms (a good physicist would say that it's never 0.5 because of several factors that we simply discard). After tossing the coin 100 times, we observe the outcomes and, surprisingly, we discover that the ratio between heads and tails is slightly different (for example, 0.46). How can we correct our estimation? The term called likelihood measures how much our actual experiments confirm the Apriori hypothesis and determines another probability (a posteriori) which reflects the actual situation. The likelihood, therefore, helps us in correcting our estimation dynamically, overcoming the problem of a fixed probability.在前面的方程中,第一项被称为后验概率(因为已见数据),因为它是由边际先验概率(因为没见数据)乘以一个被称为似然的因子决定的。要理解这种方法的哲学,我们可以举一个简单的例子:抛一枚均匀的硬币。大家都知道每个面的边际概率是0.5,但谁决定的?这是逻辑和概率公理的一个理论推论(一个好的物理学家会说,它永远不会是0.5,因为有几个因素我们只是简单地忽略了)。抛100次硬币之后,我们观察了结果,令人惊讶的是,我们发现正面和反面的比例略有不同(例如,0.46)。我们怎样才能修正我们的估计?所谓的似然测量我们的实际实验在多大程度上证实了先验假设,并决定了反映实际情况的另一种可能性(后验)。因此,这种可能性有助于我们动态地修正估计,克服固定概率的问题。

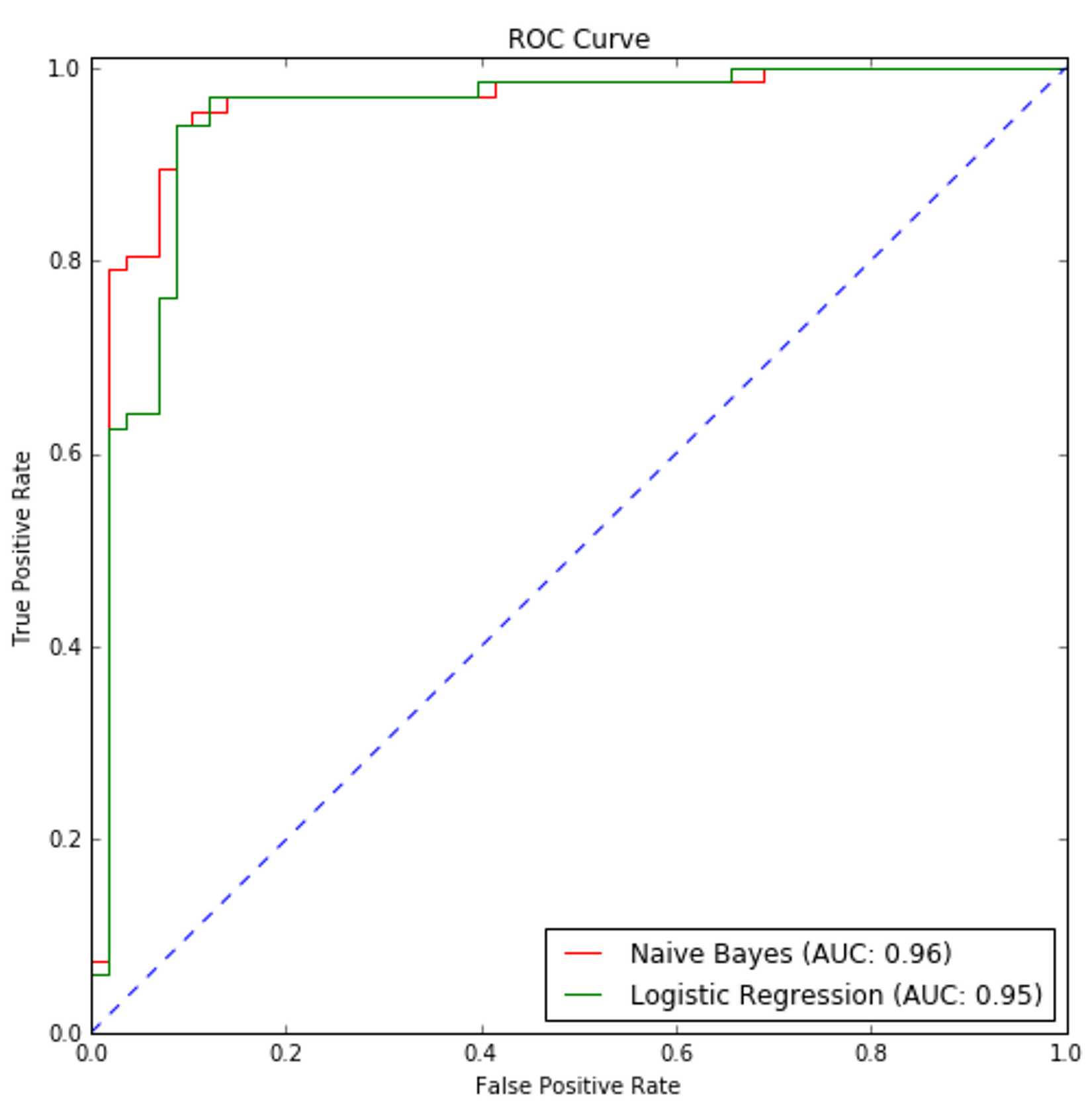

In Chapter 6, Naive Bayes, dedicated to naive Bayes algorithms, we're going to discuss these topics deeply and implement a few examples with scikit-learn, however, it's useful to introduce here two statistical learning approaches which are very diffused.第六章朴素贝叶斯,对于朴素贝叶斯算法,我们将深入讨论这些话题,并使用scikit-learn实现一些示例,然而,在这里介绍两种非常分散的统计学习方法是很有用的。

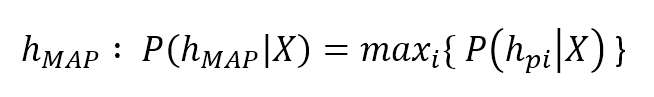

2.3.1 MAP learning 最大后验(MAP)学习

When selecting the right hypothesis, a Bayesian approach is normally one of the best choices, because it takes into account all the factors and, as we'll see, even if it's based on conditional independence, such an approach works perfectly when some factors are partially dependent. However, its complexity (in terms of probabilities) can easily grow because all terms must always be taken into account. For example, a real coin is a very short cylinder, so, in tossing a coin, we should also consider the probability of even. Let's say, it's 0.001. It means that we have three possible outcomes: P(head) = P(tail) = (1.0 - 0.001) / 2.0 and P(even) = 0.001. The latter event is obviously unlikely, but in Bayesian learning it must be considered (even if it'll be squeezed by the strength of the other terms).选择正确假设时,贝叶斯方法通常是最好方法之一,因为其计入所有因子并,我们将发现,即使这种方法是基于条件独立假设,当某些因子部分依赖时,这种方法也能很好地工作。然而,它的复杂度(就概率而言)很容易增加,因为所有的项都必须考虑在内。例如,一枚真正的硬币是一个非常短的圆柱体,所以,在抛硬币时,我们也应该考虑竖起来(这里even可翻译成相等,也就是硬币竖起来)的概率。比如是0.001。那么会有三种结果:P(head)=P(tail)=(1.0-0.001)/2.0、P(even)=0.001。后者明显不怎么可能,但在贝叶斯学习中,它必须被考虑(即使它会被其他术语的强度挤压)。

An alternative is picking the most probable hypothesis in terms of a posteriori probability:另一种选择是根据后验概率选择最可能的假设:

$$h_{MAP}:P(h_{MAP} \mid \mathbf{X})=max_{i}\{P(h_{pi} \mid \mathbf{X})\}$$

This approach is called MAP (maximum a posteriori) and it can really simplify the scenario when some hypotheses are quite unlikely (for example, in tossing a coin, a MAP hypothesis will discard P(even)). However, it still does have an important drawback: it depends on Apriori probabilities (remember that maximizing the a posteriori implies considering also the Apriori). As Russel and Norvig (Russel S., Norvig P., Artificial Intelligence: A Modern Approach, Pearson) pointed out, this is often a delicate part of an inferential process, because there's always a theoretical background which can drive to a particular choice and exclude others. In order to rely only on data, it's necessary to have a different approach.这种方法被称为MAP(后验极大值),它可以简化某些假设不太可能发生的情况(例如,在抛硬币时,MAP假设会丢弃P(even))。然而,它仍然有一个重要的缺点:它依赖于先验概率(记住,最大化后验意味着同时考虑先验)。Russel和Norvig指出,这通常是推理过程中一个微妙的部分,因为总有一个理论背景可以驱使人们做出特定的选择而排除其他选择。为了只依赖于数据,有必要使用不同的方法。

作者在这里并没有说到如何做到只依赖于数据,或者其他章节会讲到。或者我以前学的Bayesian Analysis with Python会讲到。我连这个都不是很确定,说明这本书我还是没学懂大概!

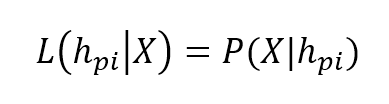

2.3.2 Maximum-likelihood learning最大似然学习

We have defined likelihood as a filtering term in the Bayes formula. In general, it has the form of:我们在贝叶斯公式中将似然定义为过滤器项。一般来说,它的形式是:

$$L(h_{pi} \mid \mathbf{X})=P(\mathbf{X} \mid h_{pi})$$

第i个假设的似然的表达式

Here the first term expresses the actual likelihood of a hypothesis, given a dataset X. As you can imagine, in this formula there are no more Apriori probabilities, so, maximizing it doesn't imply accepting a theoretical preferential hypothesis, nor considering unlikely ones. A very common approach, known as expectation-maximization and used in many algorithms (we're going to see an example in logistic regression), is split into two main parts:在这里,对于给定数据集X,第一项表示假设的似然。你会发现公式中不再有先验概率,因此,最大化它并不用接受一个理论上的优先假设,也不用考虑不可能的假设。一种非常常见的方法被称为期望最大化,并在许多算法中使用(我们将在逻辑回归中看到一个例子),它被分为两个主要部分:

- Determining a log-likelihood expression based on model parameters (they will be optimized accordingly)根据模型参数确定对数似然表达式(将相应地进行优化)

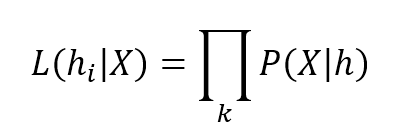

- Maximizing it until residual error is small enough最大化它,直到剩余误差足够小

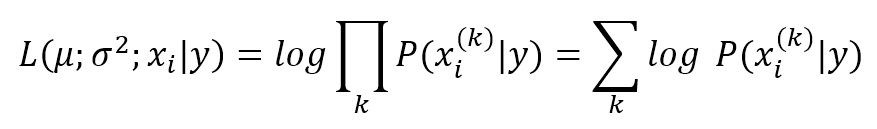

A log-likelihood (normally called L) is a useful trick that can simplify gradient calculations. A generic likelihood expression is:对数似然(logL)是简化梯度计算的有用技巧。通用似然表达式(注意L是未取对数时候)如下:

$$L(h_{i} \mid \mathbf{X}) = \prod_{k} {P(\mathbf{X}_k \mid h_{i})}$$

注意:L是未取对数前的表达式,取对数是因为概率计算很多时候假设条件独立,那么概率计算将是乘法,取对数可将乘法转为加法更方便。上面表达式中$\mathbf{X}_k$指$\mathbf{X}$取第k个值。当然,假设特征之间相互独立,也可以认为是第k个特征。

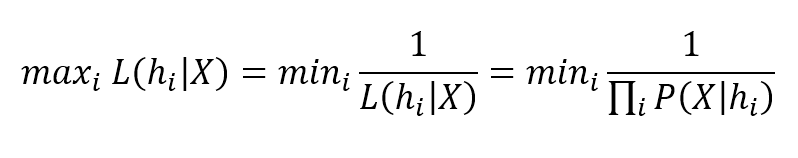

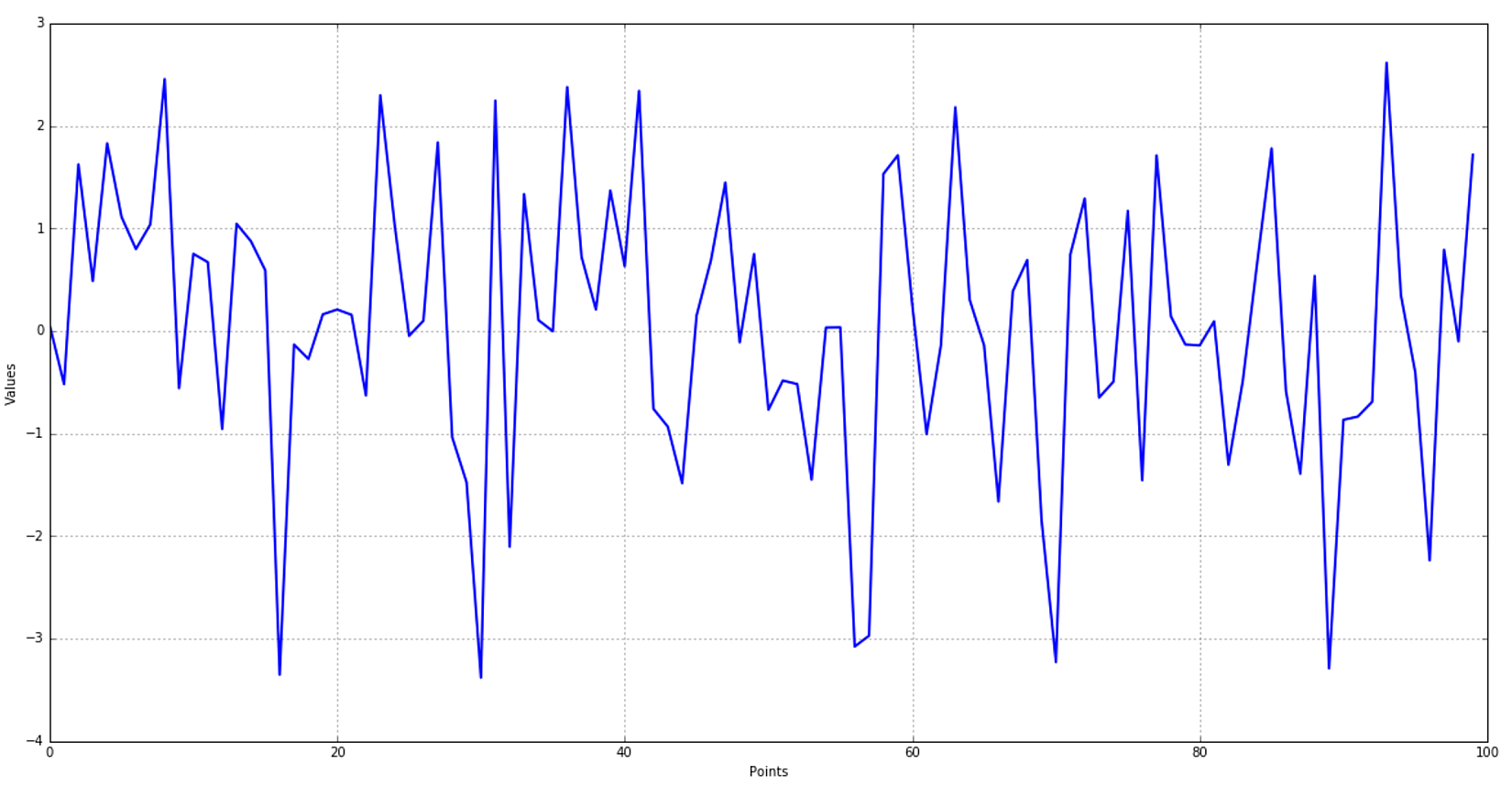

As all parameters are inside $h_i$, the gradient is a complex expression which isn't very manageable. However our goal is maximizing the likelihood, but it's easier minimizing its reciprocal:由于所有的参数都在$h_i$内,梯度是一个复杂的表达式,不是很容易处理。但是我们的目标是最大化可能性,但是最小化它的倒数会更容易:

$${max}_{i}L(h_{i} \mid \mathbf{X}) = {min}_{i} \frac {1} {L(h_{i} \mid \mathbf{X})} = {min}_{i} \frac {1} {\prod_{k} {P(\mathbf{X}_k \mid h_{i})}}$$

This can be turned into a very simple expression by applying natural logarithm (which is monotonic):取对数(对数是单调的)将得到很简单的表达式:

$${max}_{i}logL(h_{i} \mid \mathbf{X}) = {min}_{i} -logL(h_{i} \mid \mathbf{X}) = {min}_{i} \sum_{k} {-logP(\mathbf{X}_k \mid h_{i})}$$

The last term is a summation which can be easily derived and used in most of the optimization algorithms. At the end of this process, we can find a set of parameters which provides the maximum likelihood without any strong statement about prior distributions. This approach can seem very technical, but its logic is really simple and intuitive. To understand how it works, I propose a simple exercise, which is part of Gaussian mixture technique discussed also in Russel S., Norvig P., Artificial Intelligence: A Modern Approach, Pearson.最后一项是一个求和,可以很容易地使用在大多数优化算法(优化算法是找到最优参数的算法,关于优化算法请参阅wiki-Optimization algorithms)。在这个过程的最后,我们可以找到一组参数,它们提供了最大的可能性,而不需要任何关于先验分布的强声明。这种方法看起来专业,但它的逻辑非常简单和直观。为了理解它是如何工作的,我提出了一个简单的练习,这个练习是高斯混合技术的一部分。

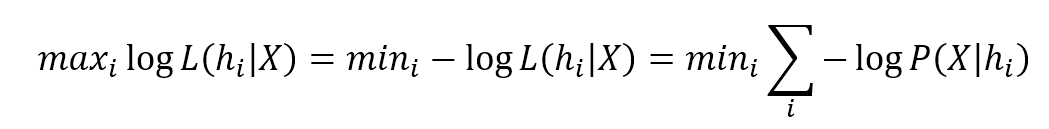

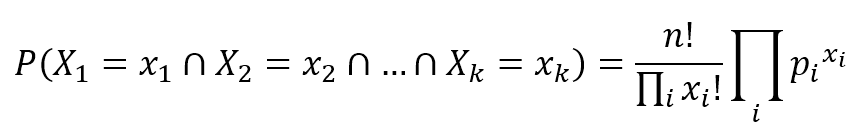

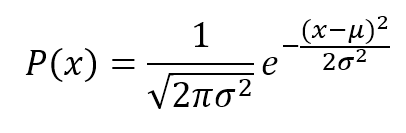

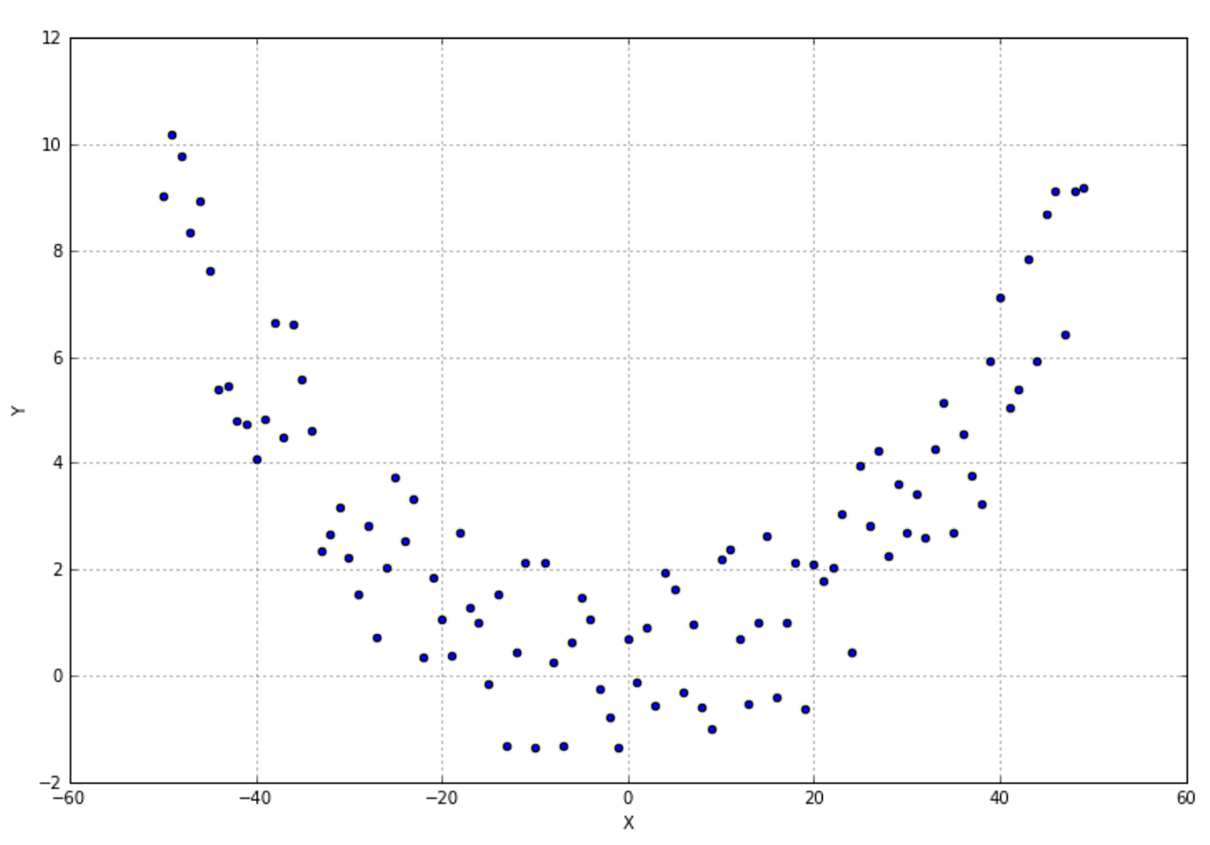

Let's consider 100 points drawn from a Gaussian distribution with zero mean and a standard deviation equal to 2.0 (quasi-white noise made of independent samples). The plot is shown next:让我们考虑从一个均值为零、标准差为2.0的高斯分布中抽取的100个点(由独立样本构成的准白噪声)。作图如下:

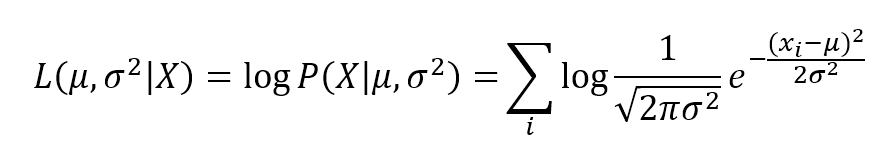

In this case, there's no need for a deep exploration (we know how they are generated), however, after restricting the hypothesis space to the Gaussian family (the most suitable considering only the graph), we'd like to find the best value for mean and variance. First of all, we need to compute the log-likelihood (which is rather simple thanks to the exponential function):在这种情况下,不需要进行深入的探索(我们知道数据是如何产生的),但是,在将假设空间限制在高斯族(考虑作图最容易)之后,我们希望找到均值和方差的最佳值(注意下式最左边其实应该是logL)。首先,我们需要计算对数似然(由于指数方程计算过程很简单):

In this case, there's no need for a deep exploration (we know how they are generated), however, after restricting the hypothesis space to the Gaussian family (the most suitable considering only the graph), we'd like to find the best value for mean and variance. First of all, we need to compute the log-likelihood (which is rather simple thanks to the exponential function):在这种情况下,不需要进行深入的探索(我们知道数据是如何产生的),但是,在将假设空间限制在高斯族(考虑作图最容易)之后,我们希望找到均值和方差的最佳值(注意下式最左边其实应该是logL)。首先,我们需要计算对数似然(由于指数方程计算过程很简单):

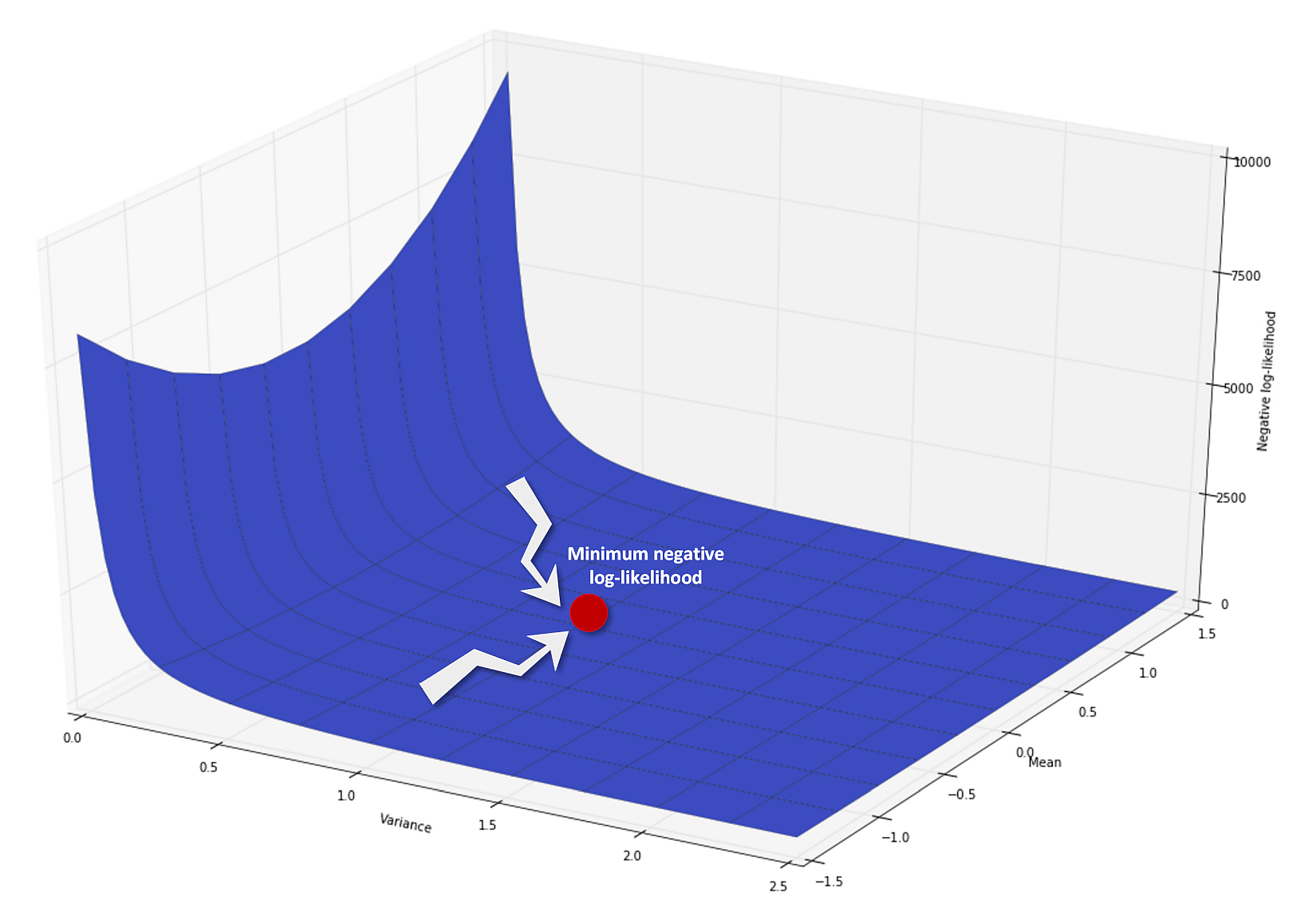

$$logL(\mu,{\sigma}^2 \mid \mathbf{X}) = logP(\mathbf{X} \mid \mu,{\sigma}^2) = \sum_{i}{log {\frac {1} {\sqrt{2\pi{\sigma}^2}} e^{- \frac {(x_i - \mu)^2} {2{\sigma}^2 } } }}$$

A graph of the negative log-likelihood function is plotted next. The global minimum of this function corresponds to an optimal likelihood given a certain distribution. It doesn't mean that the problem has been completely solved, because the first step of this algorithm is determining an expectation, which must be always realistic. The likelihood function, however, is quite sensitive to wrong distributions because it can easily get close to zero when the probabilities are low. For this reason, maximum-likelihood (ML) learning is often preferable to MAP learning, which needs Apriori distributions and can fail when they are not selected in the most appropriate way:接下来绘制负对数似然函数的图形。该函数的全局极小值对应于特定分布的最优似然。这并不意味着问题已经完全解决了,因为这个算法的第一步是确定一个期望,这个期望必须始终是现实的。然而,似然函数对错误的分布非常敏感,因为当概率很低时,它很容易接近于零。因此,最大似然(ML)学习通常比MAP学习更可取,MAP学习需要先验分布,如果没有以最适当的方式进行选择,则可能失败:

This approach has been applied to a specific distribution family (which is indeed very easy to manage), but it also works perfectly when the model is more complex. Of course, it's always necessary to have an initial awareness about how the likelihood should be determined because more than one feasible family can generate the same dataset. In all these cases, Occam's razor is the best way to proceed: the simplest hypothesis should be considered first. If it doesn't fit, an extra level of complexity can be added to our model. As we'll see, in many situations, the easiest solution is the winning one, and increasing the number of parameters or using a more detailed model can only add noise and a higher possibility of overfitting.这种方法已经应用于特定的分布族(特定分布族下确实很容易处理),但是当模型更复杂时,它也能很好地工作。当然,总是有必要对如何确定似然有一个初步的认识,因为多个可行的族可以生成相同的数据集。在所有这些情况下,奥卡姆剃刀是最好的方法:即首先应该考虑最简单的假设。如果它不适合,我们的模型可以增加额外的复杂性。正如我们将看到的,在许多情况下,最简单的解决方案是获胜的方案,增加参数的数量或使用更详细的模型只会增加噪音和过度拟合的可能性。

This approach has been applied to a specific distribution family (which is indeed very easy to manage), but it also works perfectly when the model is more complex. Of course, it's always necessary to have an initial awareness about how the likelihood should be determined because more than one feasible family can generate the same dataset. In all these cases, Occam's razor is the best way to proceed: the simplest hypothesis should be considered first. If it doesn't fit, an extra level of complexity can be added to our model. As we'll see, in many situations, the easiest solution is the winning one, and increasing the number of parameters or using a more detailed model can only add noise and a higher possibility of overfitting.这种方法已经应用于特定的分布族(特定分布族下确实很容易处理),但是当模型更复杂时,它也能很好地工作。当然,总是有必要对如何确定似然有一个初步的认识,因为多个可行的族可以生成相同的数据集。在所有这些情况下,奥卡姆剃刀是最好的方法:即首先应该考虑最简单的假设。如果它不适合,我们的模型可以增加额外的复杂性。正如我们将看到的,在许多情况下,最简单的解决方案是获胜的方案,增加参数的数量或使用更详细的模型只会增加噪音和过度拟合的可能性。

2.4 Elements of information theory信息理论因素

A machine learning problem can also be analyzed in terms of information transfer or exchange. Our dataset is composed of n features, which are considered independent (for simplicity, even if it's often a realistic assumption) drawn from n different statistical distributions. Therefore, there are n probability density functions $p_i (x)$ which must be approximated through other n $q_i (x)$ functions. In any machine learning task, it's very important to understand how two corresponding distributions diverge and what is the amount of information we lose when approximating the original dataset.机器学习问题也可以从信息传递或交换的角度进行分析。我们的数据集是由n个特征组成的,它们被认为是独立的(为了简单起见,即使这通常是一个现实的假设),这些特征来自于n个不同的统计分布。因此,有n个概率密度函数$p_i (x)$必须通过其他n个$q_i (x)$函数逼近。在任何机器学习任务中,理解两个对应的分布是如何偏离的以及在接近原始数据集时我们丢失了多少信息量是非常重要的。

【 这里补充信息量的概念。 首先是信息量。假设我们听到了两件事,分别如下:

- 事件A:巴西队进入了2018世界杯决赛圈。

- 事件B:中国队进入了2018世界杯决赛圈。

仅凭直觉来说,显而易见事件B的信息量比事件A的信息量要大。究其原因,是因为事件A发生的概率很大,事件B发生的概率很小。所以当越不可能的事件发生了,我们获取到的信息量就越大。越可能发生的事件发生了,我们获取到的信息量就越小。那么信息量应该和事件发生的概率有关。

假设X是一个离散型随机变量,其取值集合为$\chi$,概率分布函数$p(x)=Pr(X=x),x∈\chi$,则定义事件$X=x_0$的信息量为:

$$I(x_0)=-log(p(x_0))$$

概率为1的事件发生毫无信息量,概率为0时间发生无穷信息量,概率大的事件发生的信息量小于概率小的事件发生的信息量。所以信息量定义的函数需要单调递减且f(0)=∞、f(1)=0。

熵其实是信息量的期望。 】

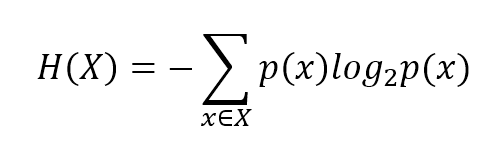

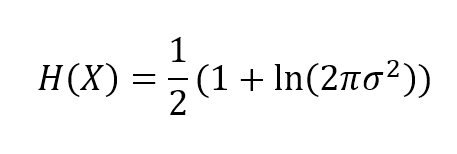

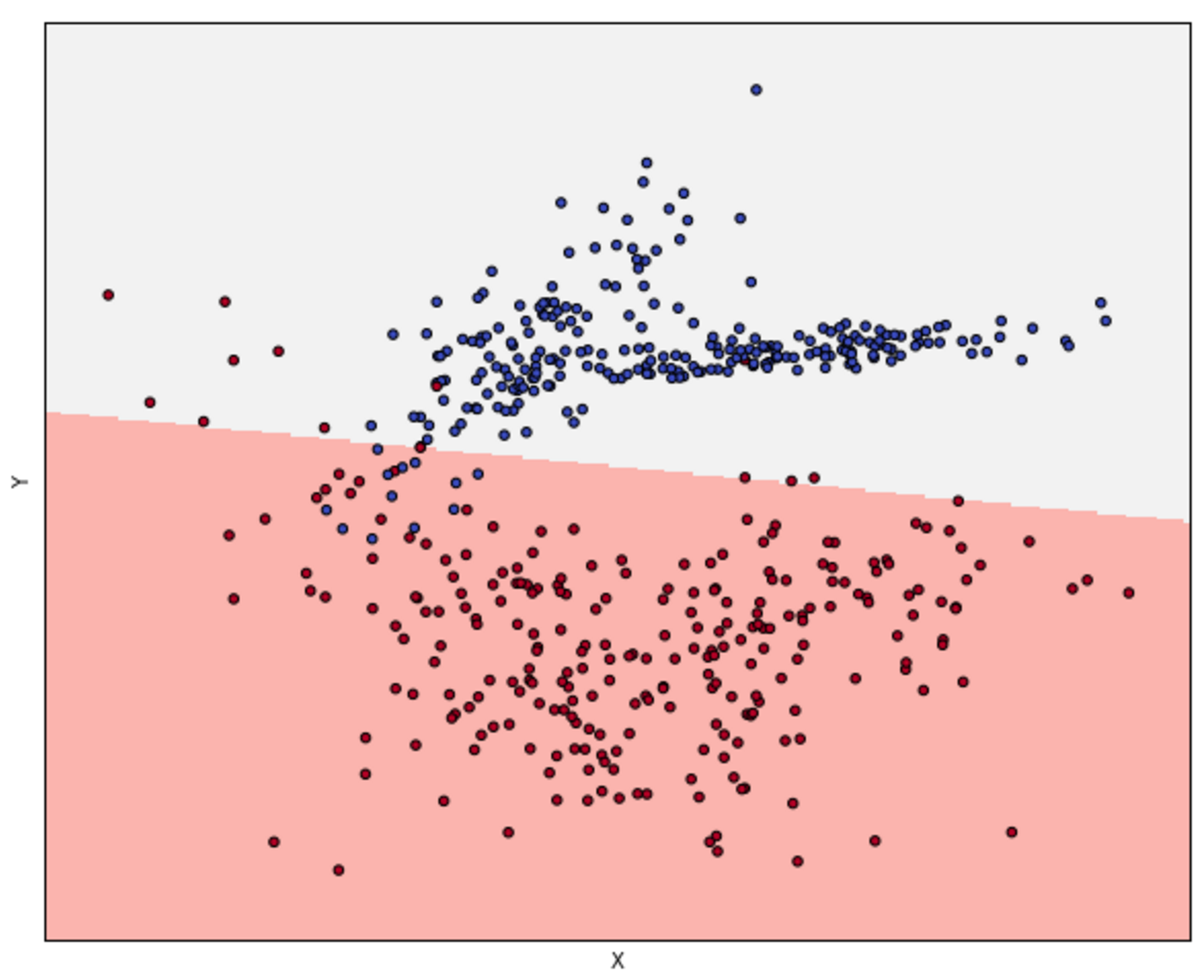

The most useful measure is called entropy:最有用的测量叫做熵:

$$H(X)=-\sum_{x \in X}{p(x)\log p(x)}$$

This value is proportional to the uncertainty of X and it's measured in bits (if the logarithm has another base, this unit can change too). For many purposes, a high entropy is preferable, because it means that a certain feature contains more information. For example, in tossing a coin (two possible outcomes), H(X) = 1 bit, but if the number of outcomes grows, even with the same probability, H(X) also does because of a higher number of different values and therefore increased variability. It's possible to prove that for a Gaussian distribution (using natural logarithm):熵值与X的不确定性成正比(作者这里的意思是用熵来代表不确定度。但请注意,熵不涉及变量的大小,甚至,比如变量是分类的时候,变量可以用非数值表示,此时方差不存在,那么谈不上熵与方差有什么关系。比如:等可能取红球和黑球,那么p(红球)=p(黑球)=0.5,那么对数底取2时熵为1,此时并没有什么方差。即使分类变量有确定的数值表示形式,也不一定熵高者方差大。比如,等可能取整数1到9,那么方差为20/3,对数底取2时熵为9ln9;等可能取整数1、9,那么方差为16,熵为2ln2;等可能取整数1到3,那么方差为2/3,熵为3ln3。对于连续变量,若服从高斯分布,那么,方差大者熵大,熵大者方差也大。),它是以位来度量的(也可采用其他单位来度量,取决于以什么为对数底了,比方也可以e为底)。在很多情况下,高熵是可取的,因为它意味着某个特性包含更多的信息。例如,在抛硬币(两种可能的结果)时,H(X)=1位,但是如果结果的数量增加了,即使概率相同,H(X)也会增加,因为不同值的数量增加了,因此变异性也增加了(抛硬币不是合适的例子,况且,抛硬币例子结果只有正面、反面、竖起来,结果数量最大为3,结果数量从2变为3概率不可能相同。我举一个更恰当的例子吧。情况1, X等可能取-1、1;情况2,Y等可能取自然数-4到4。那么H(X)=1、H(Y)=3。这里,结果数量越多(变异越大),则熵越大。)。(用自然对数)可以证明对高斯分布有:

$$H(X)=\frac {1}{2}(1+\ln{(2\pi{\sigma}^2)})$$

【

高斯分布熵与方差关系之证明:

$$p(x)=\frac {1} {\sqrt{2\pi{\sigma}^2}} e^{- \frac {(x_i - \mu)^2} {2{\sigma}^2}}$$

$$\int_{-\infty}^{+\infty} { e^{-x^2}} \ dx = \sqrt{\pi}$$

$$\begin{align}

H(X) & = -\int { p(x)\ln p(x)} \ dx \\

& = -\int { \frac {1} {\sqrt{2\pi{\sigma}^2}} e^{- \frac {(x - \mu)^2} {2{\sigma}^2}}\ln {\frac {1} {\sqrt{2\pi{\sigma}^2}} e^{- \frac {(x - \mu)^2} {2{\sigma}^2}}}} \ dx \\

& = -\frac {1} {\sqrt{2\pi{\sigma}^2}}\int { e^{- \frac {(x - \mu)^2} {2{\sigma}^2}}\ln {\frac {1}{\sqrt{2\pi{\sigma}^2}} e^{- \frac {(x - \mu)^2} {2{\sigma}^2}}}} \ dx \\

& = \frac {\ln {\sqrt{2\pi{\sigma}^2}}} {\sqrt{2\pi{\sigma}^2}} \int {e^{- \frac {(x - \mu)^2} {2{\sigma}^2}}} \ dx + \frac {1} {\sqrt{2\pi{\sigma}^2}}\int { \frac {(x - \mu)^2} {2{\sigma}^2} e^{-\frac {(x - \mu)^2} {2{\sigma}^2}}} \ dx \\

& = \frac {\ln {\sqrt{2\pi{\sigma}^2}}} {\sqrt{2\pi{\sigma}^2}} \int {e^{- y^2}} \ d(\sqrt{2}\sigma y + \mu) + \frac {1} {\sqrt{2\pi{\sigma}^2}}\int { y^2 e^{-y^2}} \ d(\sqrt{2}\sigma y + \mu) \\

& = \frac {\ln {\sqrt{2\pi{\sigma}^2}}} {\sqrt{\pi}} \int_{-\infty}^{+\infty} {e^{- y^2}} \ dy + \frac {1} {\sqrt{\pi}} \int_{-\infty}^{+\infty} { y^2 e^{-y^2}} \ dy \\

& = \ln {\sqrt{2\pi{\sigma}^2}} + \frac {1} {\sqrt{\pi}} \int_{-\infty}^{+\infty} { -\frac{1}{2} y } \ d(e^{-y^2}) \\

& = \ln {\sqrt{2\pi{\sigma}^2}} + \frac {1} {\sqrt{\pi}}(-\frac{1}{2}) \int_{-\infty}^{+\infty} { y } \ d(e^{-y^2}) \\

& = \ln {\sqrt{2\pi{\sigma}^2}} + \frac {1} {\sqrt{\pi}}(-\frac{1}{2})(\left. ye^{-y^2} \right|_{-\infty}^{+\infty} - \int_{-\infty}^{+\infty} {e^{-y^2}} \ dy) \\

& = \ln {\sqrt{2\pi{\sigma}^2}} + \frac {1} {\sqrt{\pi}}(-\frac{1}{2})(0 - \sqrt{\pi}) \\

& = \frac {1}{2}(1+\ln{(2\pi{\sigma}^2)})

\end{align}$$

用到了分部积分知识。

】

【 对于经验分布,证明方差大者熵大:可证明方差增大时熵增大,以证明方差大者熵大。 】

【

注意:

1、熵只依赖于随机变量的分布,与随机变量取值无关,所以也可以将X的熵记作H(p)。

2、令0log0=0(因为某个取值概率可能为0)。

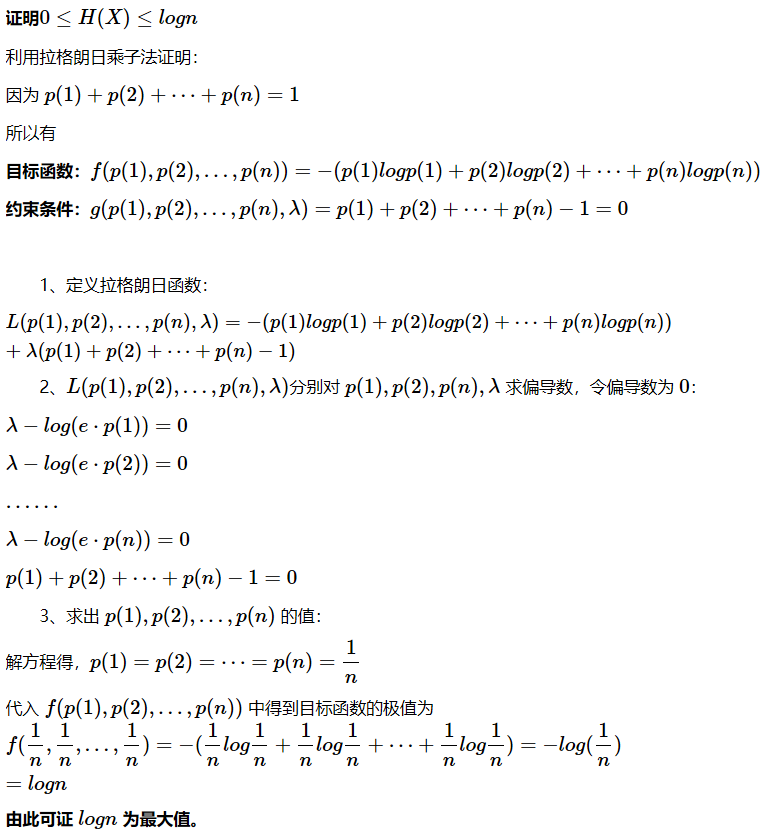

3、对于离散型随机变量,随机变量的取值个数越多,状态数也就越多,信息熵就越大,混乱程度就越大。当随机分布为均匀分布时,熵最大,且0≤H(X)≤logn。(P(X)在0到1之间那么显然熵大于等于0,当X只有一个取值时,熵取最小值0;熵小于等于$\log n$,当均匀分布时熵取最大值$\log n$(可用拉格朗日乘子法证明https://www.cnblogs.com/kyrieng/p/8694705.html )。)

4、对于连续性随机变量,熵等于概率密度与对数概率密度的乘积的积分。

】

4、对于连续性随机变量,熵等于概率密度与对数概率密度的乘积的积分。

】

So, the entropy is proportional to the variance, which is a measure of the amount of information carried by a single feature. In the next chapter, we're going to discuss a method for feature selection based on variance threshold. Gaussian distributions are very common, so this example can be considered just as a general approach to feature filtering: low variance implies low information level and a model could often discard all those features.所以,(对于高斯分布,)熵与方差成正比,方差是衡量单个特征携带的信息量的一个指标。在下一章中,我们将讨论一种基于方差阈值的特征选择方法。高斯分布是非常常见的,所以这个例子可以看作是一种特征过滤的通用方法:低方差意味着低信息水平,一个模型常常会丢弃所有这些特征(即低方差低信息水平的特征)。

对于服从高斯分布的特征,自然有充足理由根据方差选择特征,因为高斯分布方差大者信息量高。连续变量或多或少均近似高斯分布。那么对于含有计数count特征、分类特征的数据是否可以根据特征方差大小选择特征呢?。这仍然是一个需要深究的话题。留待时间充裕再深究吧!!!! 可以肯定的是,可以根据特征的熵选择特征。无论是连续变量特征、计数特征、和分类特征,根据数据集计算熵都是很方便的(连续特征体现在数据集上仍是离散的,某个指标,获取数据阶段精度不可能无限。),熵更大的特征更重要。

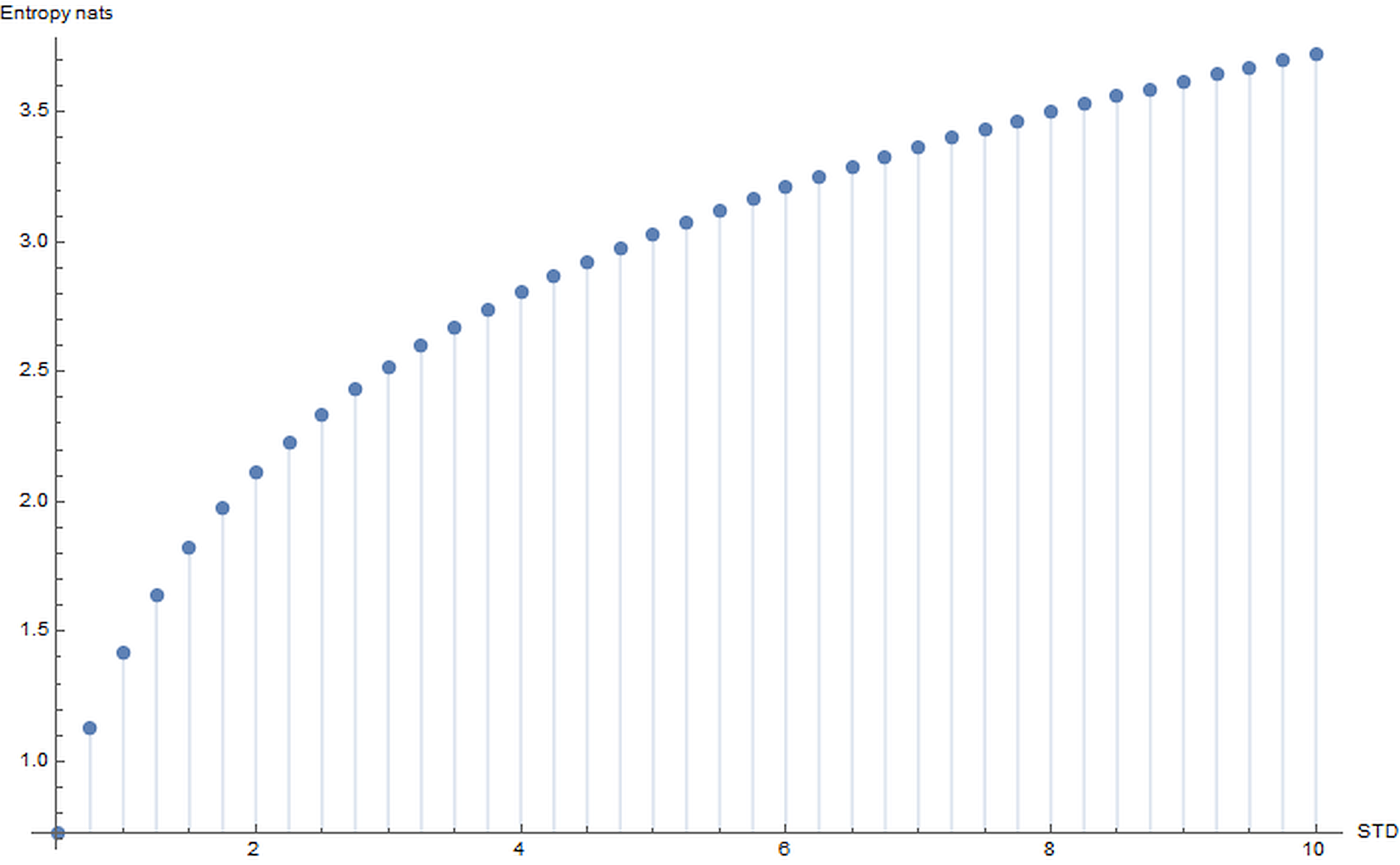

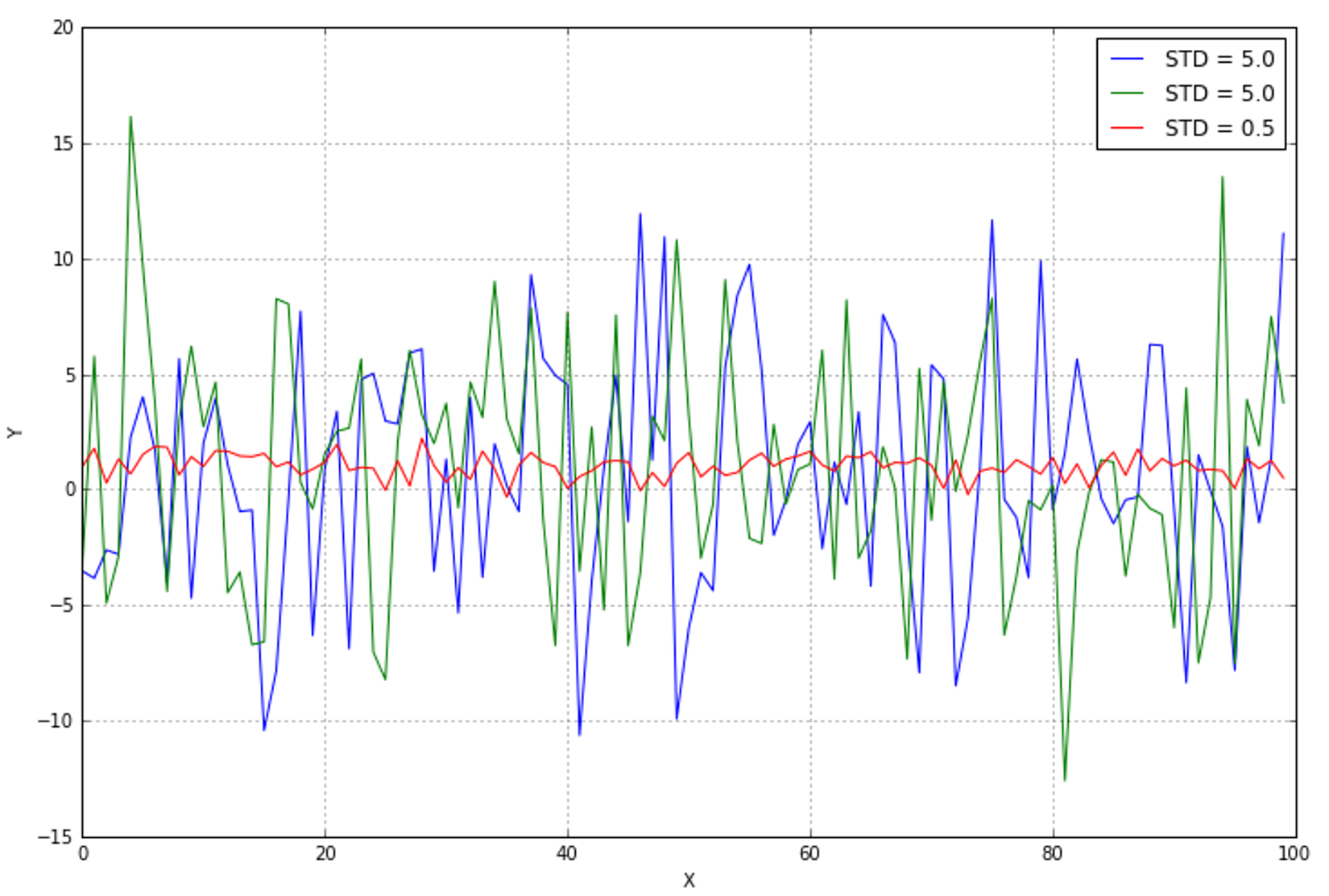

In the following figure, there's a plot of H(X) for a Gaussian distribution expressed in nats (which is the corresponding unit measure when using natural logarithms):下图是高斯分布的H(X)曲线,以nats表示(这是使用自然对数时的对应单位度量):

For example, if a dataset is made up of some features whose variance (here it's more convenient talking about standard deviation) is bounded between 8 and 10 and a few with STD < 1.5, the latter could be discarded with a limited loss in terms of information. These concepts are very important in real-life problems when large datasets must be cleaned and processed in an efficient way.例如,如果一个数据集是由一些特征组成的,这些特征的方差(在这里讨论标准差更方便)在8到10之间,一些STD < 1.5,后者可以被丢弃,信息损失有限。信息熵概念对大数据集很重要,大数据集必须有效清洗处理。

For example, if a dataset is made up of some features whose variance (here it's more convenient talking about standard deviation) is bounded between 8 and 10 and a few with STD < 1.5, the latter could be discarded with a limited loss in terms of information. These concepts are very important in real-life problems when large datasets must be cleaned and processed in an efficient way.例如,如果一个数据集是由一些特征组成的,这些特征的方差(在这里讨论标准差更方便)在8到10之间,一些STD < 1.5,后者可以被丢弃,信息损失有限。信息熵概念对大数据集很重要,大数据集必须有效清洗处理。

用信息理论怎么理解特征缩放呢,很多算法要求特征缩放,显然这将混淆各特征的信息量? 我是这么理解的,暂时不知道这个理解正确与否。基于协方差矩阵过滤特征(如PCA)是采用信息理论选取特征;也可以根据模型(或者说根据目标变量)来确定特征信息含量比例(确定每个特征对应的参数),有些算法需要对特征进行缩放,有些也不一定要缩放,但无论如何,缩放没有坏处,将改善条件数,使算法加速收敛。缩放后的特征具有相同的方差,这等于放弃未见目标变量前的先入为主信息。各特征对目标变量的信息贡献比例由缩放比例和算法中特征对应的参数共同决定。

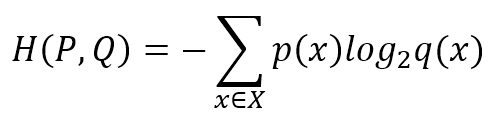

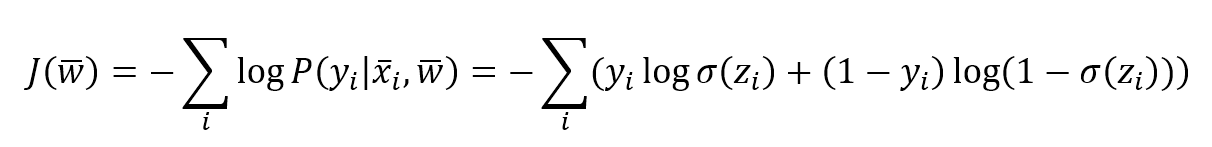

If we have a target probability distribution p(x), which is approximated by another distribution q(x), a useful measure is cross-entropy between p and q (we are using the discrete definition as our problems must be solved using numerical computations):如果我们有一个目标概率分布p(x),用另一个分布q(x)近似,一个有用的度量是p和q之间的交叉熵(我们使用离散定义,因为我们的问题必须用数值计算来解决):

$$H(P,Q) = -\sum_{x \in X}{p(x)log_2q(x)}$$

If the logarithm base is 2, it measures the number of bits requested to decode an event drawn from P when using a code optimized for Q. In many machine learning problems, we have a source distribution and we need to train an estimator to be able to identify correctly the class of a sample. If the error is null, P = Q and the cross-entropy is minimum (corresponding to the entropy H(P)). However, as a null error is almost impossible when working with Q, we need to pay a price of H(P, Q) bits, to determine the right class starting from a prediction. Our goal is often to minimize it, so to reduce this price under a threshold that cannot alter the predicted output if not paid. In other words, think about a binary output and a sigmoid function: we have a threshold of 0.5 (this is the maximum price we can pay) to identify the correct class using a step function (0.6 -> 1, 0.1 -> 0, 0.4999 -> 0, and so on). As we're not able to pay this price, since our classifier doesn't know the original distribution, it's necessary to reduce the cross-entropy under a tolerable noise-robustness threshold (which is always the smallest achievable one).如果以2为底的对数,上式测量的是用Q解码取自P的事件的位数。在许多机器学习问题中,我们有一个源分布,我们需要训练估计器来正确地识别样本的类。若误差是零,那P=Q且交叉熵最小(即等于熵H(P))。然而,当使用Q时零错误几乎是不可能的,我们需要付出H(P,Q)位的代价,以预测确定正确的类。我们的目标通常是最小化交叉熵H(P,Q),以减少代价到特定阈值,如不付出这个代价就不能得到预测输出。换句话说,设想二分类输出和sigmoid函数:我们有一个0.5的阈值(这是我们可以支付的最大代价),使用一个步骤函数(0.6-> 1、0.1->0、0.4999->0等等)来识别正确的分类。由于我们无法支付这个代价,既然分类器不知道原始分布,所以有必要在一个可容忍的噪声-鲁棒性阈值(通常是最小可实现的阈值)下降低交叉熵。

【 为什么交叉熵足够小Q就可很好描述P?

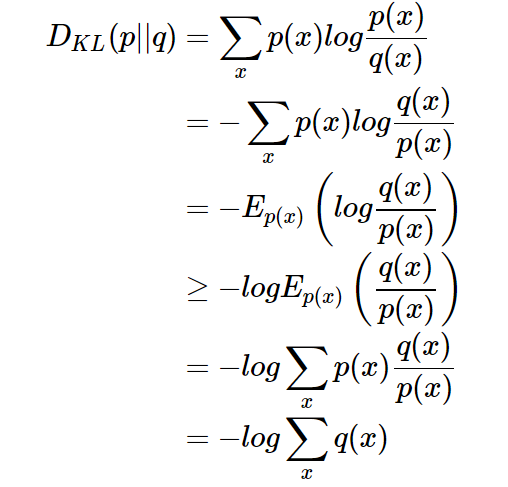

3 相对熵(KL散度)

相对熵又称KL散度,如果我们对于同一个随机变量X有两个单独的概率分布P(X)和Q(X),我们可以使用KL散度(Kullback-Leibler (KL) divergence)来衡量这两个分布的差异。

wiki对相对熵的定义:

> In the context of machine learning, $D_{KL} (P‖Q)$ is often called the information gain achieved if P is used instead of Q.

即如果用P而不是用Q来描述问题,得到的信息增益。

在机器学习中,假设P表示实际分布,比如[1,0,0]表示当前样本属于第一类。Q用来表示模型所预测的分布,比如[0.6,0.2,0.2]。

显然,如果用P来描述样本,那非常完美。而用Q来描述,虽可大致描述,但是不是那么的完美,信息量不足,需要额外的一些“信息增益”才能达到和P一样完美的描述。如果我们的Q通过反复训练,也能完美的描述样本,那么就不再需要额外的“信息增量”,Q等价于P。

KL散度的计算公式:

$$D_{KL}(P||Q) = \sum_{x \in X}{p(x)log{\frac{p(x)}{q(x)}}} = E_{p(x)}{\frac{p(x)}{q(x)}}$$

性质: 1、如果p(x)和q(x)两个分布相同,那么相对熵等于0

2、$D_{KL} (P‖Q) \ne D_{KL} (Q‖P)$,相对熵具有不对称性。大家可以举个简单例子算一下。

3、$D_{KL} (P‖Q)\ge 0$证明如下(利用Jensen不等式https://en.wikipedia.org/wiki/Jensen%27s_inequality) :

而概率和为1即

而概率和为1即$\sum{p(x_i)}=1$,证毕。

4、$D_{KL} (P‖Q)=H(P,Q)-H(P)$,KL散度=交叉熵-实际分布的熵。实际分布的熵是正的常数,交叉熵和KL散度是变量,那么当P=Q时交叉熵和KL散度同时取得最小值。

由性质4可知:交叉熵足够小Q就可很好描述P。 】

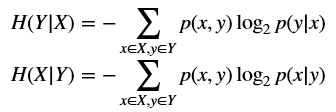

In order to understand how a machine learning approach is performing, it's also useful to introduce a conditional entropy or the uncertainty of Y given the knowledge of X:为理解机器学习方法的表现,引入条件熵(或称之为已知X时Y的不确定性)很有用:

$$H(Y|X)=-\sum_{x \in X, y \in Y}{p(x,y)\log_2 {p(y|x)}}$$

$$H(X|Y)=-\sum_{x \in X, y \in Y}{p(x,y)\log_2 {p(x|y)}}$$

【

条件熵H(Y|X)表示在已知随机变量X的条件下随机变量Y的不确定性。条件熵H(Y|X)定义为X给定条件下Y的条件概率分布的熵对X的数学期望:

$$

\begin{align}

H(Y|X) &=\sum_{x \in X}{p(x)H(Y|X=x)} \\

& =-\sum_{x \in X}{p(x)\sum_{y \in Y}{p(y|x)\log_2 {p(y|x)}}} \\

& =-\sum_{x \in X}{\sum_{y \in Y}{p(x,y)\log_2 {p(y|x)}}} \\

& =-\sum_{x \in X,y \in Y}{p(x,y)\log_2 {p(y|x)}} \\

\end{align}

$$

$$

\begin{align}

H(X|Y) &=\sum_{y \in Y}{p(y)H(X|Y=y)} \\

& =-\sum_{y \in Y}{p(y)\sum_{x \in X}{p(x|y)\log_2 {p(x|y)}}} \\

& =-\sum_{y \in Y}{\sum_{x \in X}{p(x,y)\log_2 {p(x|y)}}} \\

& =-\sum_{x \in X,y \in Y}{p(x,y)\log_2 {p(x|y)}} \\

\end{align}

$$

联合熵、条件熵、边际熵关系:

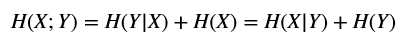

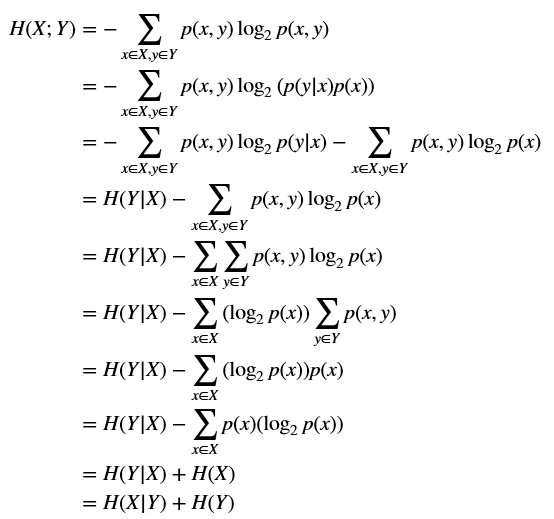

$$H(X;Y)=H(Y|X)+H(X)=H(X|Y)+H(Y)$$

联合熵、条件熵、边际熵关系之证明:

$$

\begin{align}

H(X;Y) &=-\sum_{x \in X, y \in Y}{p(x,y)\log_2 {p(x,y)}} \\

& =-\sum_{x \in X, y \in Y}{p(x,y)\log_2 {(p(y|x)p(x))}} \\

& =-\sum_{x \in X, y \in Y}{p(x,y)\log_2 {p(y|x)}}-\sum_{x \in X, y \in Y}{p(x,y)\log_2 {p(x)}} \\

& =H(Y|X)-\sum_{x \in X, y \in Y}{p(x,y)\log_2 {p(x)}} \\

& =H(Y|X)-\sum_{x \in X}{\sum_{y \in Y}{p(x,y)\log_2 {p(x)}}} \\

& =H(Y|X)-\sum_{x \in X}{(\log_2 {p(x))}\sum_{y \in Y}{p(x,y)}} \\

& =H(Y|X)-\sum_{x \in X}{(\log_2 p(x))p(x)} \\

& =H(Y|X)-\sum_{x \in X}{p(x)(\log_2 p(x))} \\

& =H(Y|X)+H(X) \\

& =H(X|Y)+H(Y) \\

\end{align}

$$

】

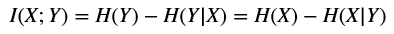

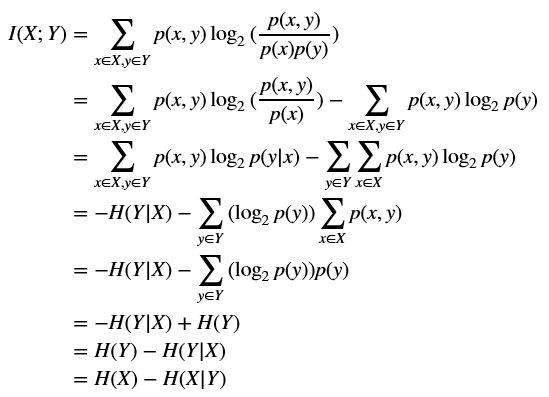

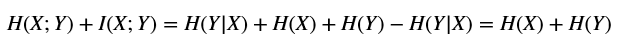

Through this concept, it's possible to introduce the idea of mutual information I(X;Y), which is the amount of information shared by both variables and therefore, the reduction of uncertainty about Y provided by the knowledge of X:通过这个概念,可以引入互信息I(X;Y)的概念,这是两个变量共享的信息量,即,Y的不确定减去已知X时Y的不确定性:

$$I(X;Y)=H(Y)-H(Y|X)=H(X)-H(X|Y)$$

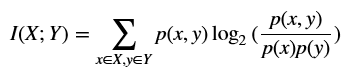

【

互信息的定义:

在概率论和信息论中,两个随机变量的互信息(Mutual Information,简称MI)或转移信息(transinformation)是变量间相互依赖性的量度。

$$I(X;Y)=\sum_{x \in X, y \in Y}{p(x,y)\log_2 {(\frac{p(x,y)}{p(x)p(y)})}}$$

互信息、边际熵、条件熵关系的证明:

$$

\begin{align}

I(X;Y) &=\sum_{x \in X, y \in Y}{p(x,y)\log_2 {(\frac{p(x,y)}{p(x)p(y)})}} \\

& =\sum_{x \in X, y \in Y}{p(x,y)\log_2 {(\frac{p(x,y)}{p(x)})}}-\sum_{x \in X, y \in Y}{p(x,y)\log_2 {p(y)}} \\

& =\sum_{x \in X, y \in Y}{p(x,y)\log_2 {p(y|x)}}-\sum_{y \in Y}{\sum_{x \in X}{p(x,y)\log_2 {p(y)}}} \\

& =-H(Y|X)-\sum_{y \in Y}{(\log_2 {p(y)})\sum_{x \in X}{p(x,y)}} \\

& =-H(Y|X)-\sum_{y \in Y}{(\log_2 {p(y)})p(y)} \\

& =-H(Y|X)+H(Y) \\

& =H(Y)-H(Y|X) \\

& =H(X)-H(X|Y) \\

\end{align}

$$

联合熵与互信息之和等于边际熵之和:

$$H(X;Y)+I(X;Y)=H(Y|X)+H(X)+H(Y)-H(Y|X)=H(X)+H(Y)$$

边际熵、条件熵、联合熵、互信息之间关系示意图:

】

】

Intuitively, when X and Y are independent, they don't share any information. However, in machine learning tasks, there's a very tight dependence between an original feature and its prediction, so we want to maximize the information shared by both distributions. If the conditional entropy is small enough (so X is able to describe Y quite well), the mutual information gets close to the marginal entropy H(X), which measures the amount of information we want to learn.直觉上,若X与Y独立,它们之间不共享任何信息。然而,在机器学习任务中,一个原始特性和它的预测之间有非常紧密的依赖关系,所以我们想要最大化两个分布共享的信息。如果条件熵足够小(因此X可以很好地描述Y),互信息就会接近边际熵H(Y),边际熵H(Y)衡量我们想要学习的信息量。

【 为什么条件熵足够小X就可很好描述Y? 答:对于特定机器学习问题,数据集确定,那么X和Y的熵即边际熵一定。条件熵足够小,那么互信息足够大,那么X与Y紧密依赖,那么X可以很好地描述Y。 】

An interesting learning approach based on the information theory, called Minimum Description Length (MDL), is discussed in Russel S., Norvig P., Artificial Intelligence: A Modern Approach, Pearson, where I suggest you look for any further information about these topics.最小化描述长度(MDL)是基于信息理论的一个有趣学习方法。

datetime:2018/11/16 22:51

2.5 References参考文献

2.6 Summary总结

3 Feature Selection and Feature Engineering特征选择与特征工程

3.1 scikit-learn toy datasets scikit-learn玩具数据集

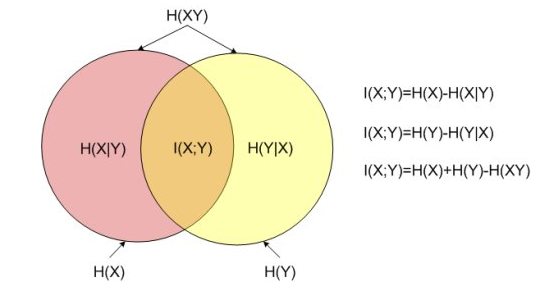

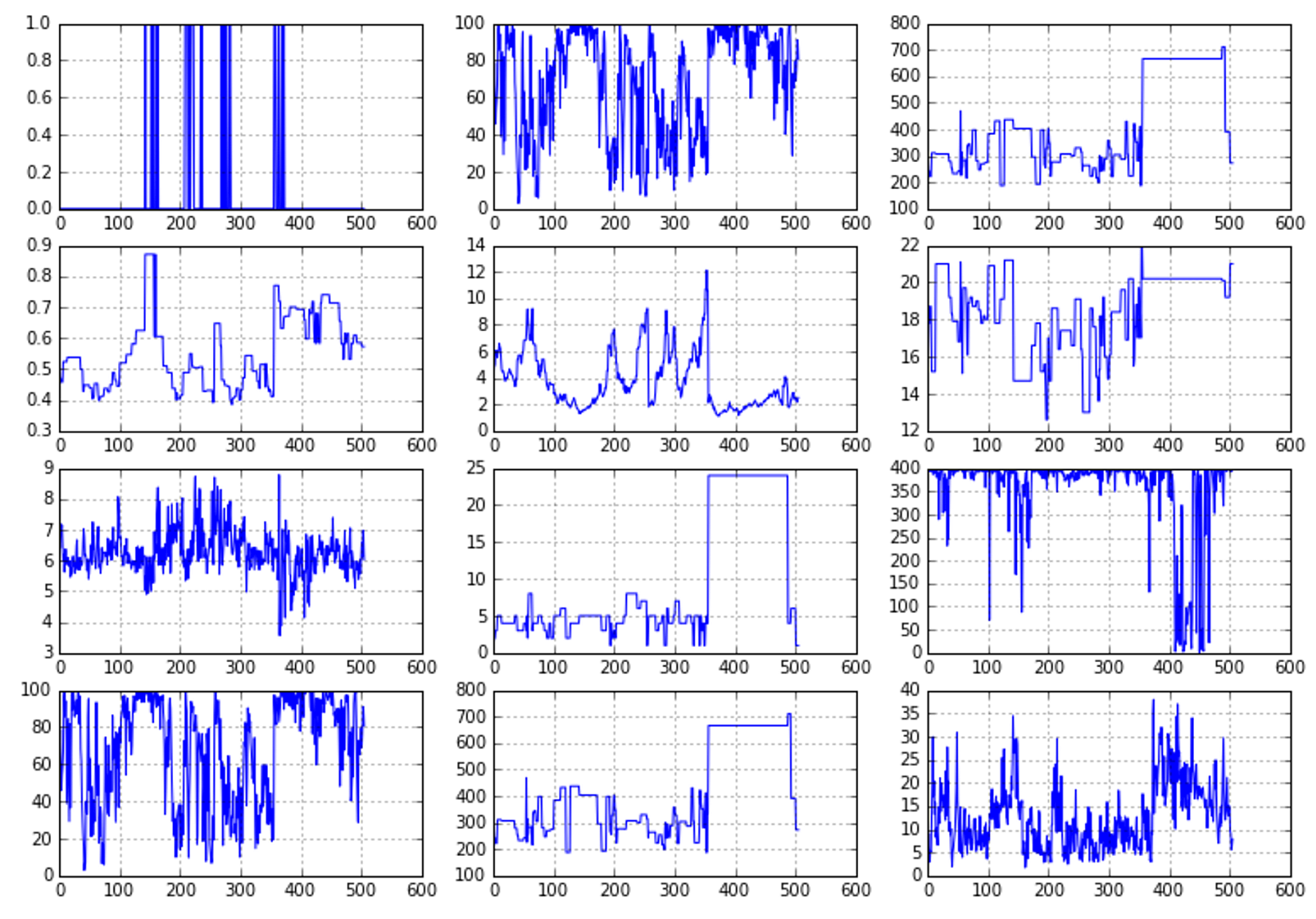

3.2 Creating training and test sets建立训练测试集

In the following figure, there's a schematic representation of process that split datasets into training and test sets:在下面的图中,有划分训练、测试集过程的示意图:

There are two main rules in performing such an operation:进行这一操作的两条主要规则:

There are two main rules in performing such an operation:进行这一操作的两条主要规则:

- Both datasets must reflect the original distribution训练、测试集均应反映原始分布

- The original dataset must be randomly shuffled before the split phase in order to avoid a correlation between consequent elements为了避免后续元素之间的相关性,在分离阶段之前必须随机打乱原始数据集

3.3 Managing categorical data处理分类数据

Categorical target variables representing by progressive integer number have drawback: all labels are turned into sequential numbers. A classifier which works with real values will then consider similar numbers according to their distance, without any concern for semantics. For this reason, it's often preferable to use so-called one-hot encoding。用累进整数表示的分类目标变量有缺点:所有的标签都变成了连续数字。使用实值的分类器将根据距离考虑相似的数字,而不考虑语义。因此,最好使用独热编码。

对于分类特征呢?按Feature_Engineering_for_Machine_Learning,特征中的分类变量也最好不要停留在累进整数。当然,需要考虑计算消耗。

3.4 Managing missing features处理缺失特征

Sometimes a dataset can contain missing features, so there are a few options that can be taken into account:有时一个数据集可能包含缺失的特性,因此有一些选项可以考虑:

- Removing the whole line去掉整行

- Creating sub-model to predict those features创建子模型来预测这些(缺失值)特征

- Using an automatic strategy to input them according to the other known values根据其他已知值自动补全

The first option is the most drastic one and should be considered only when the dataset is quite large, the number of missing features is high, and any prediction could be risky. The second option is much more difficult because it's necessary to determine a supervised strategy to train a model for each feature and, finally, to predict their value. Considering all pros and cons, the third option is likely to be the best choice.第一个选择是最激烈的,只有当数据集相当大,缺失特性个数很多,任何预测都可能有风险时才应该考虑。 第二种选择要困难得多,因为有必要确定一种监督策略来训练每个特性的模型,并最终预测它们的值。考虑全部优缺点,第三种很可能是最优选择。

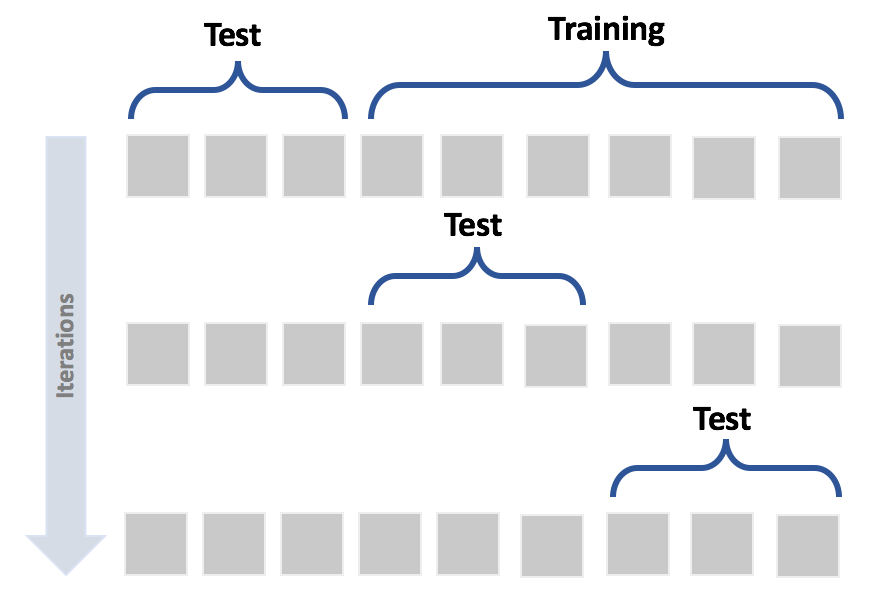

3.5 Data scaling and normalization数据缩放与正态化

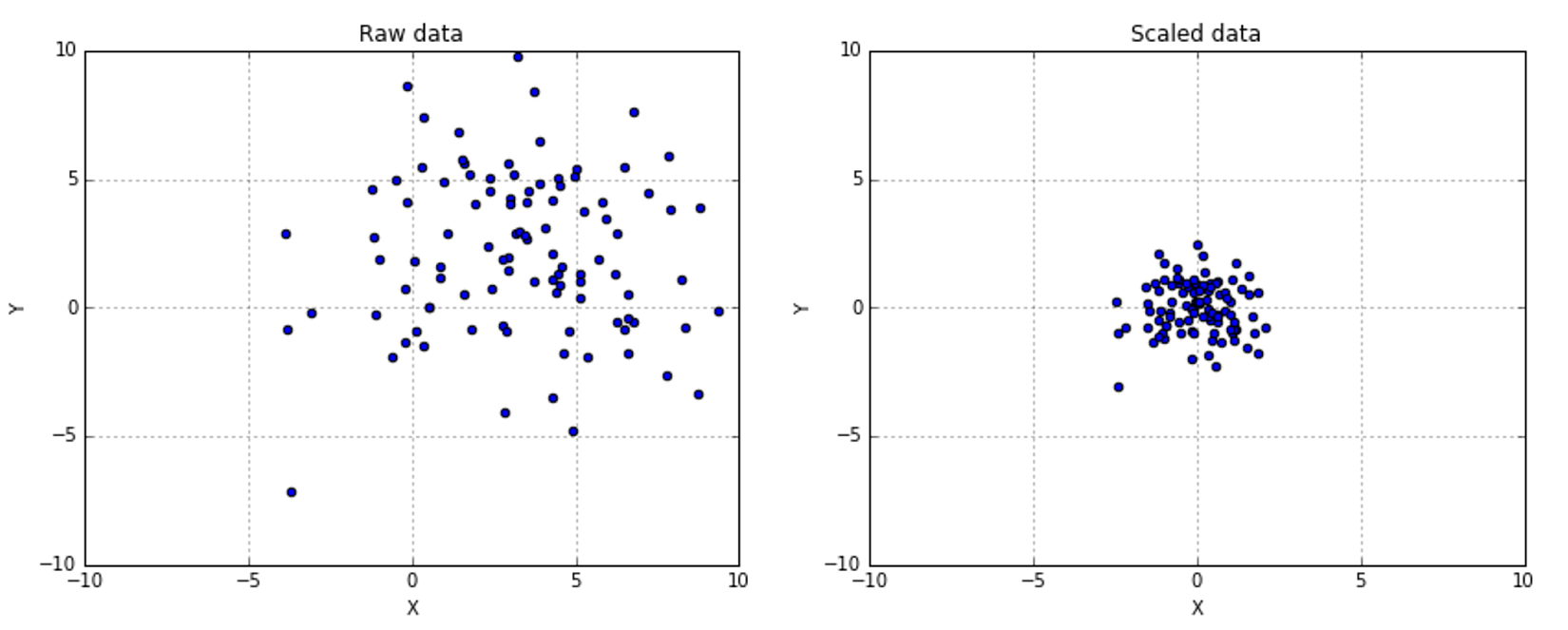

In the following figure, there's a comparison between a raw dataset and the same dataset scaled and centered:在下面的图中,将原始数据集和相同数据集经缩放并中心化进行比较:

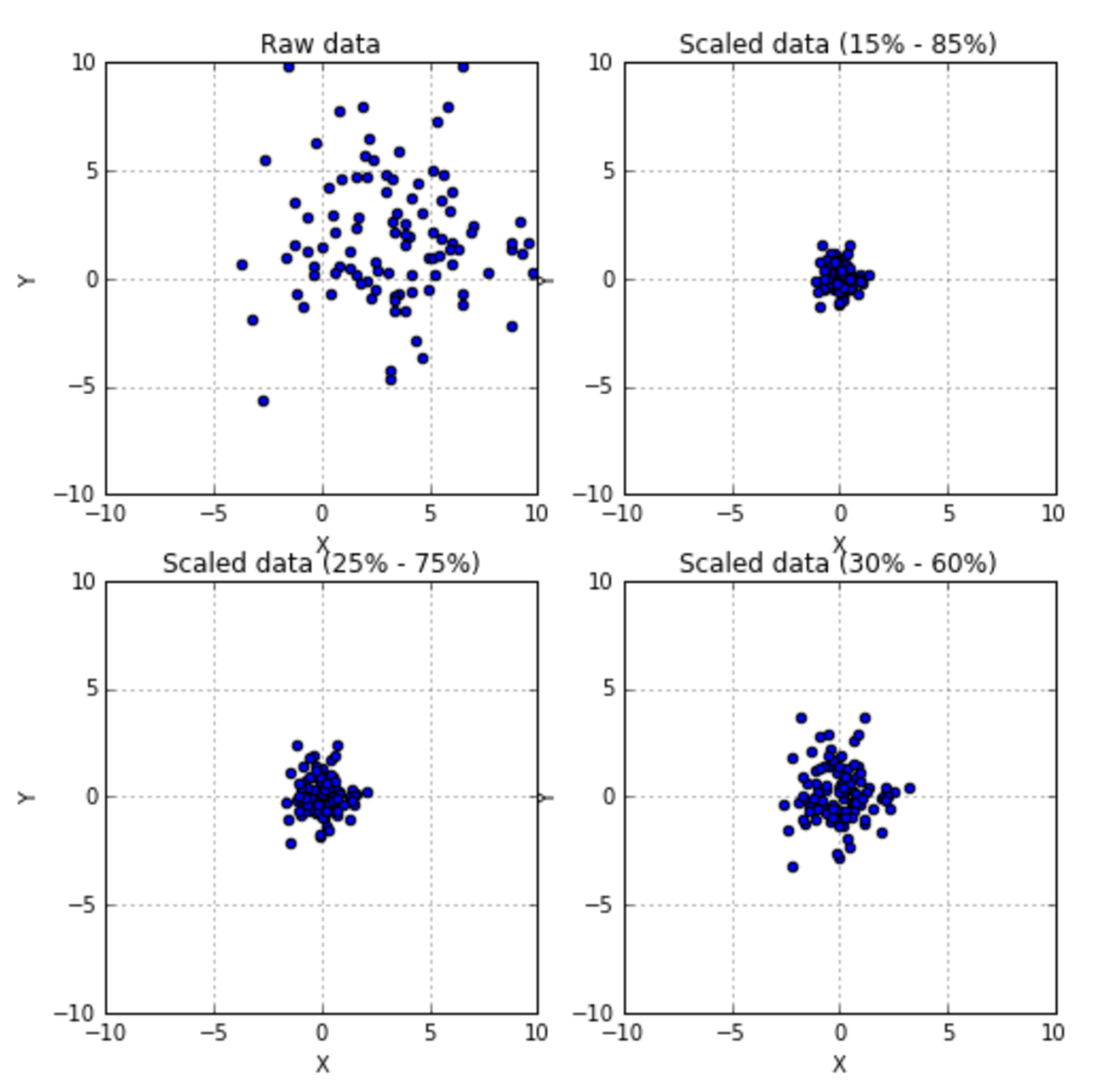

If you need a more powerful scaling feature, with a superior control on outliers and the possibility to select a quantile range, there's also the class RobustScaler.如果你需要一个更强大的缩放功能,对离群值有更好的控制并可选择分位数范围,那可以用

If you need a more powerful scaling feature, with a superior control on outliers and the possibility to select a quantile range, there's also the class RobustScaler.如果你需要一个更强大的缩放功能,对离群值有更好的控制并可选择分位数范围,那可以用RobustScaler。

The results are shown in the following figures:结果如下图所示:

scikit-learn also provides a class for per-sample normalization,

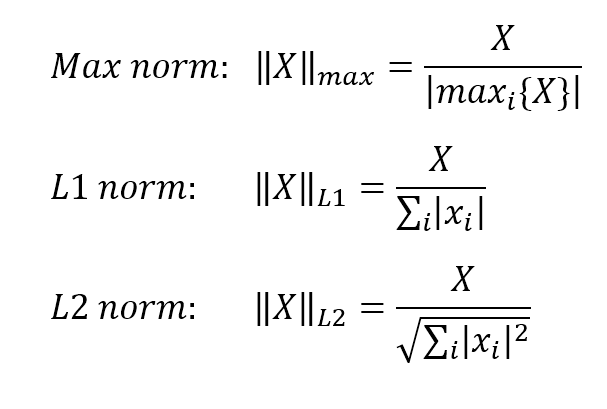

scikit-learn also provides a class for per-sample normalization, Normalizer. It can apply max, l1 and l2 norms to each element of a dataset. scikit-learn还提供了一个类,用于对每个样本进行规范化,即Normalizer。它可以对数据集的每个元素应用max(除以特征列的最大值)、l1(除以特征列的l1范数)和l2(除以特征列的l1范数)规范。

$$Max \ norm:{||X||}_{max}=\frac{X}{|max_{i} {\{X\}}|}$$

$$L1 \ norm:{||X||}_{L1}=\frac{X}{\sum_{i}{|X|}}$$

$$L2 \ norm:{||X||}_{L2}=\frac{X} {\sqrt{\sum_{i}{|X|^2}}}$$

3.6 Feature selection and filtering特征选择与过滤

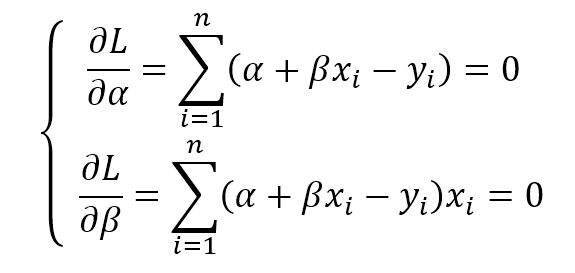

An unnormalized dataset with many features contains information proportional to the independence of all features and their variance. Let's consider a small dataset with three features, generated with random Gaussian distributions:具有许多特征的非规范化数据集包含与所有特征的独立性及其方差成比例的信息。考虑一个具有三个特性的小数据集,它是用随机高斯分布生成的:

Even without further analysis, it's obvious that the central line (with the lowest variance) is almost constant and doesn't provide any useful information. If you remember the previous chapter, the entropy H(X) is quite small, while the other two variables carry more information. A variance threshold is, therefore, a useful approach to remove all those elements whose contribution (in terms of variability and so, information) is under a predefined level. scikit-learn provides the class

Even without further analysis, it's obvious that the central line (with the lowest variance) is almost constant and doesn't provide any useful information. If you remember the previous chapter, the entropy H(X) is quite small, while the other two variables carry more information. A variance threshold is, therefore, a useful approach to remove all those elements whose contribution (in terms of variability and so, information) is under a predefined level. scikit-learn provides the class VarianceThreshold that can easily solve this problem. By applying it on the previous dataset, we get the following result:

Let's consider the following figure (without any particular interpretation):即使没有进一步的分析,很明显,中心那条线(方差最小的)几乎是恒定的,并且没有提供任何有用的信息。方差小意味着熵H(X)很小,而其他两变量含有更多信息。因此,方差阈值是一种有用的方法,可以删除所有那些贡献(就可变性和信息而言)在预定义级别之下的元素。scikit-learn提供VarianceThreshold类可以容易解决这一问题。

There are also many univariate methods that can be used in order to select the best features according to specific criteria based on F-tests and p-values, such as chi-square or ANOVA. However, their discussion is beyond the scope of this book and the reader can find further information in Freedman D., Pisani R., Purves R., Statistics, Norton & Company.也有许多单变量方法可以用来根据基于F检验和p值的特定标准来选择最佳特征,例如卡方或方差分析。已超本书的该讨论的范围。

搞清楚chi-square和ANOVA特征选择详情,并属于特征选择书本中2.6的哪类。

【 卡方检验与特征选择:

- 简介

卡方检验是一种用途非常广泛的假设检验方法,在统计推断中使用非常多,可以检测多个分类变量之间的相关性是否显著。

- 基本原理

卡方检验就是统计样本的实际观测值和理论推断值之间的偏离程度,如果chi-square值越大,二者偏差程度越大;反之,二者偏差越小。若chi-square为0,表明理论和实际值完全符合。

- 原型

1) 提出假设

H0: 总体X的分布律为$P(X= x_i )=p_i ,i=1,2,\ldots $

2)将总体X的取值范围分成k个互不相交的小区间A1,A2,A3,…,Ak,如可取:

A1=(a0,a1],A2=(a1,a2],...,Ak=(ak-1,ak),

其中:

i. a0可取$-\infty$,ak可取$+\infty$,区间的划分视具体情况而定

ii. 每个小区间所含的样本值个数不小于5

iii. 区间个数k适中

3)把落入第i个小区间的Ai的样本值的个数记作fi, 所有组频数之和f1+f2+...+fk等于样本容量n。

4)当H0为真时,根据所假设的总体理论分布,可算出总体X的值落入第i 个小区间Ai的概率pi,于是,npi就是落入第i个小区间Ai的样本值的理论频数(理论值)。

5)当H0为真时,n次试验中样本值落入第i个小区间Ai的频率fi/n与概率pi应很接近,当H0不真时,则fi/n与pi相差很大。

6)基于上面想法,引入下面统计量:

$${\chi}^2 = \sum_{i=1}^{k} {(f_i-n p_i)^2/(n p_i)}$$

,便得到了在H0假设成立的情况下服从自由度为k-1的卡方分布。

- 四表格法 四表格法是一种检验方法,主要检测两个分类变量X和Y,他们的值域分别为{x1, x2}和{y1, y2},其样本频数列联表为:

| y1 | y2 | 总计 | |

|---|---|---|---|

| x1 | a | b | a+b |

| x2 | c | d | c+d |

| 总计 | a+c | b+d | a+b+c+d |

按照上面原型: 1) 提出假设H0:X与Y有关系 2)计算chi-square值, chi-square值越大说明X与Y偏离程度越大,X与Y就相关性就越小,也就是越不相关。

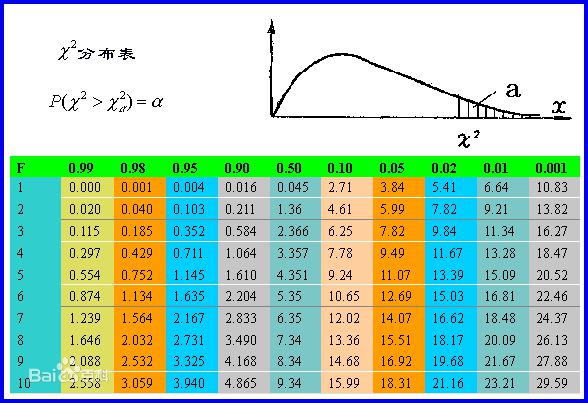

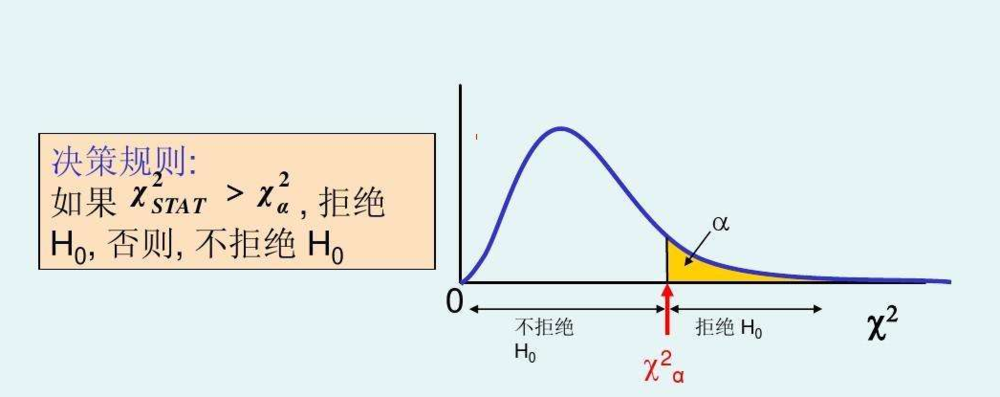

可以查阅下表,来确定X与Y是否有关系的可信度:

在上表中:

i. F代表自由度, 在表格中自由度v=(行数-1)(列数-1) ,所以四表格中,行数=列数=2,所以四表格中自由度v=1

ii. 显著水平α为第一行绿色部分。代表的二者的相关程度

iii. 从第二行,第二列起,每个值代表计算出来的chi-square值。

举例当F=1时, P(chi > 6.64) = 0.01 , 表示当chi方值>6.64的时候,相关的概率为0.01. 也就是相关的可信度是0.01。 不相关的可信度是0.99

在上表中:

i. F代表自由度, 在表格中自由度v=(行数-1)(列数-1) ,所以四表格中,行数=列数=2,所以四表格中自由度v=1

ii. 显著水平α为第一行绿色部分。代表的二者的相关程度

iii. 从第二行,第二列起,每个值代表计算出来的chi-square值。

举例当F=1时, P(chi > 6.64) = 0.01 , 表示当chi方值>6.64的时候,相关的概率为0.01. 也就是相关的可信度是0.01。 不相关的可信度是0.99

- 举例:

| 男 | 女 | 总计 | |

|---|---|---|---|

| 化妆 | 15(55) | 95(55) | 110 |

| 不化妆 | 85(45) | 5(45) | 90 |

| 总计 | 100 | 100 | 200 |

其中,每个格子的数是按假设计算得来的,这里我们假设H0:X(化妆与否)与Y(性别)不相关,那按这种假设化妆男、化妆女、不化妆男、不化妆女应该为55、55、45、45。

那么卡方=(15-55)^2/55 + (95-55)^2/55 + (85-45)^2/45 + (5-45)^2/45 = 129.30 自由度=(2-1)(2-1)=1,所以p(chi_square>129.30)远小于0.001(自由度1、卡方为129.30对应的显著性水平显然远小于0.001),H0是不能接受的,因此X与Y有很强的相关性。

卡方检验接受假设或拒绝假设的示意图:

datetime: 2018/11/18 0:58

datetime: 2018/11/18 0:58卡方检验与特征选择: 假设我们有x1到xn共n个特征和目标y,分别检验每个特征与目标的相关性,特征选择可保留相关性大者(不相关假设的显著水平低者)。 显然,机器学习的特征工程中将会把这种特征选择归入特征过滤。 】

【 ANOVA与特征选择: 百度百科垃圾,又要自己翻译wiki了Analysis of variance。有空再说。 https://www.cnblogs.com/stevenlk/p/6543628.html 这个博客不错。 】

3.7 Principal component analysis主成分分析(PCA)

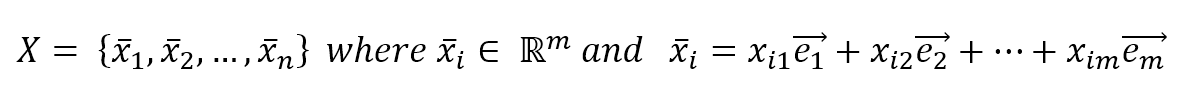

In general, if we consider a Euclidean space, we have:考虑欧式空间,有:

$$\mathbf{X}=\{\vec{x_1},\vec{x_2},\ldots,\vec{x_n}\} \ where \ \vec{x_n} \in \mathbb{R}^m \ and \ \vec{x_i}=x_{i1}\vec{e_1}+x_{i2}\vec{e_2}+\dots+x_{im}\vec{e_m}$$

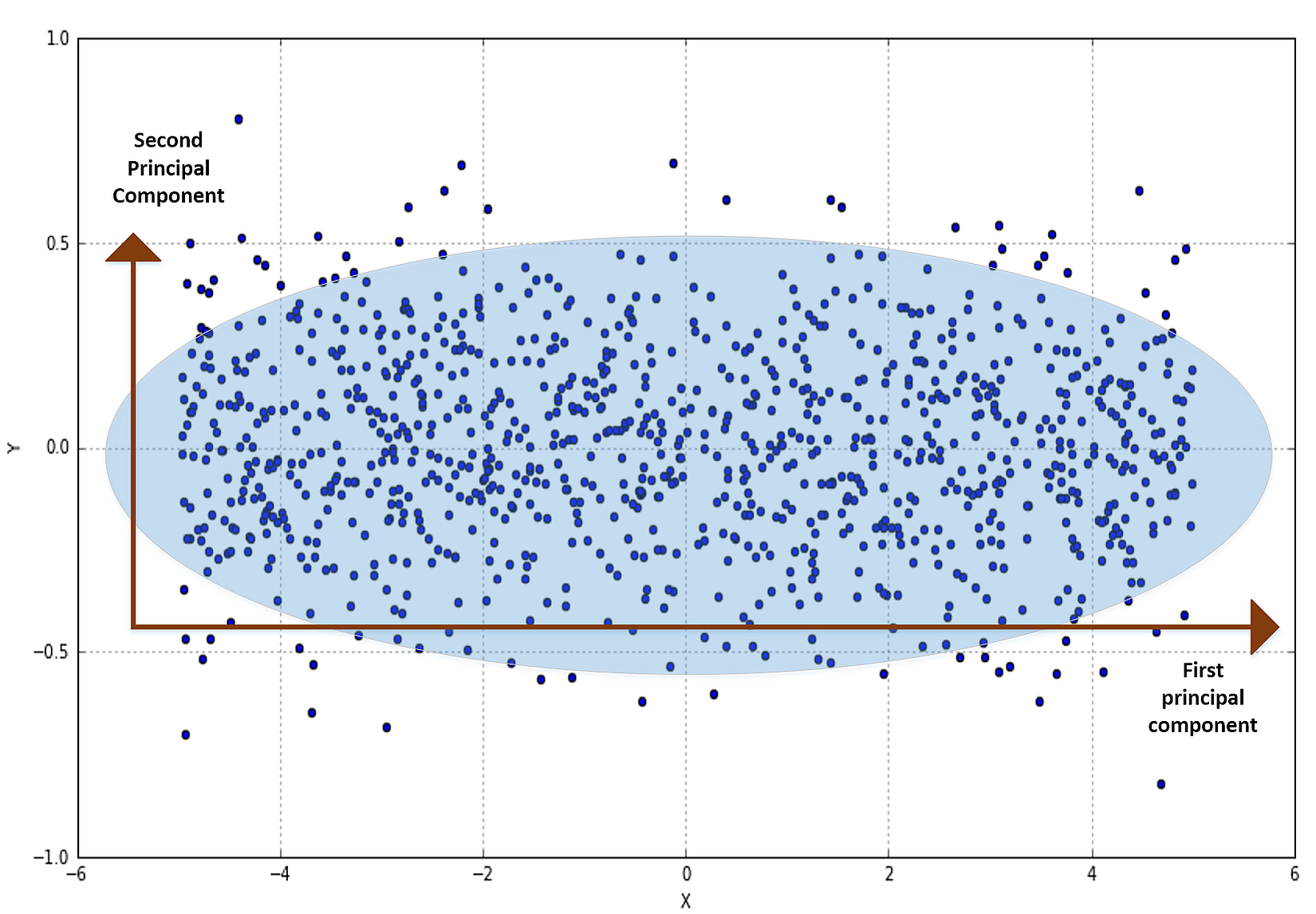

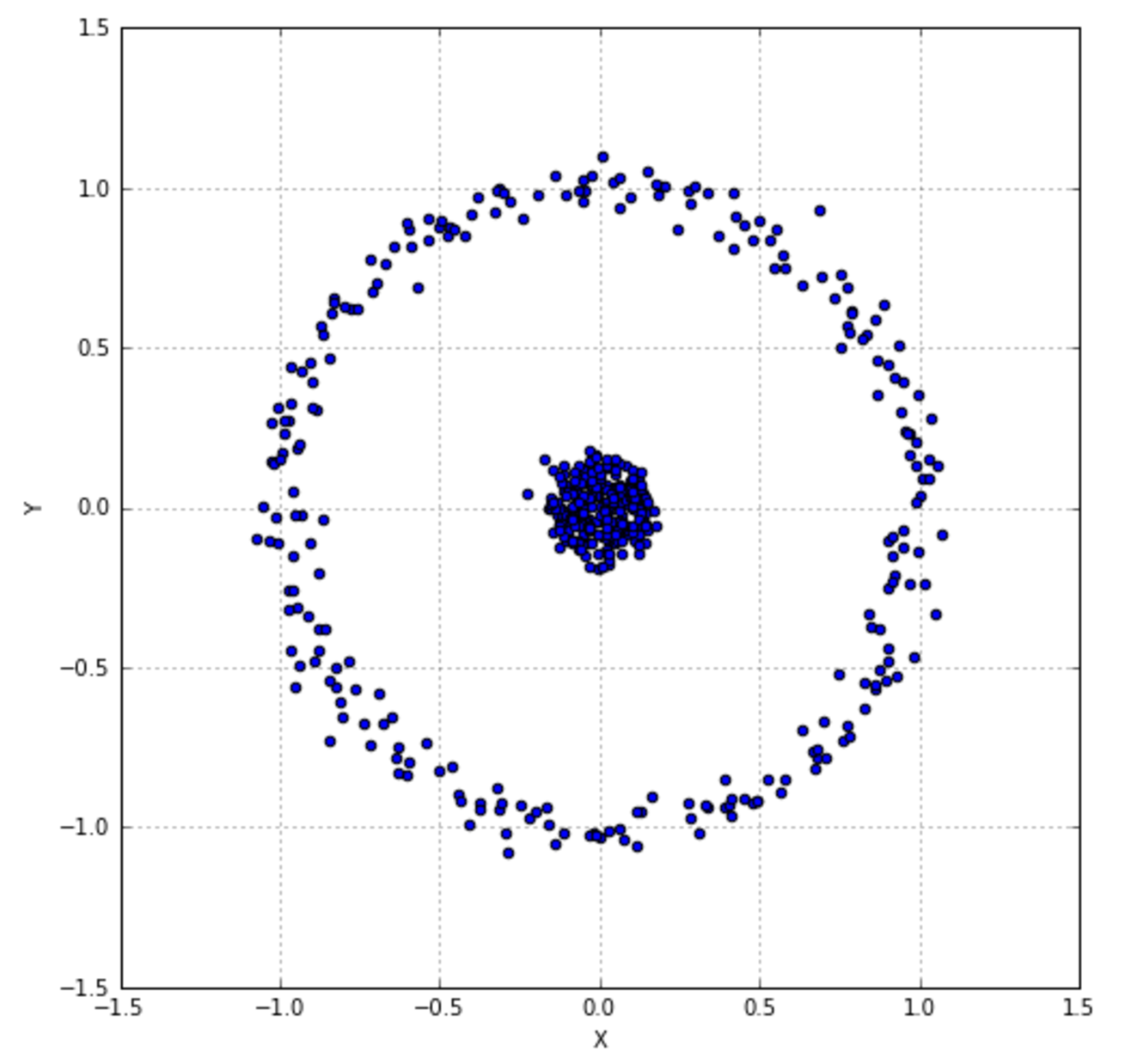

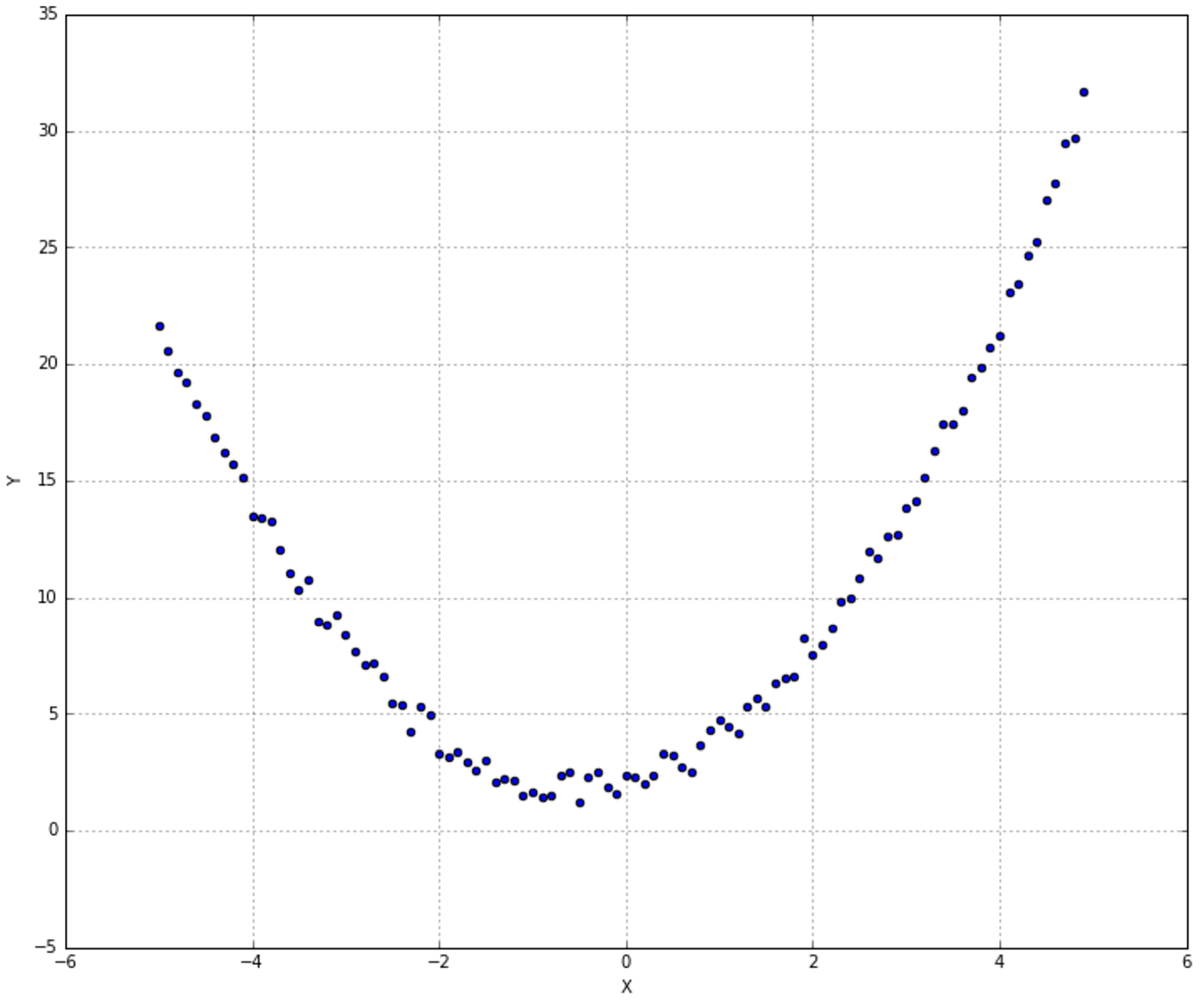

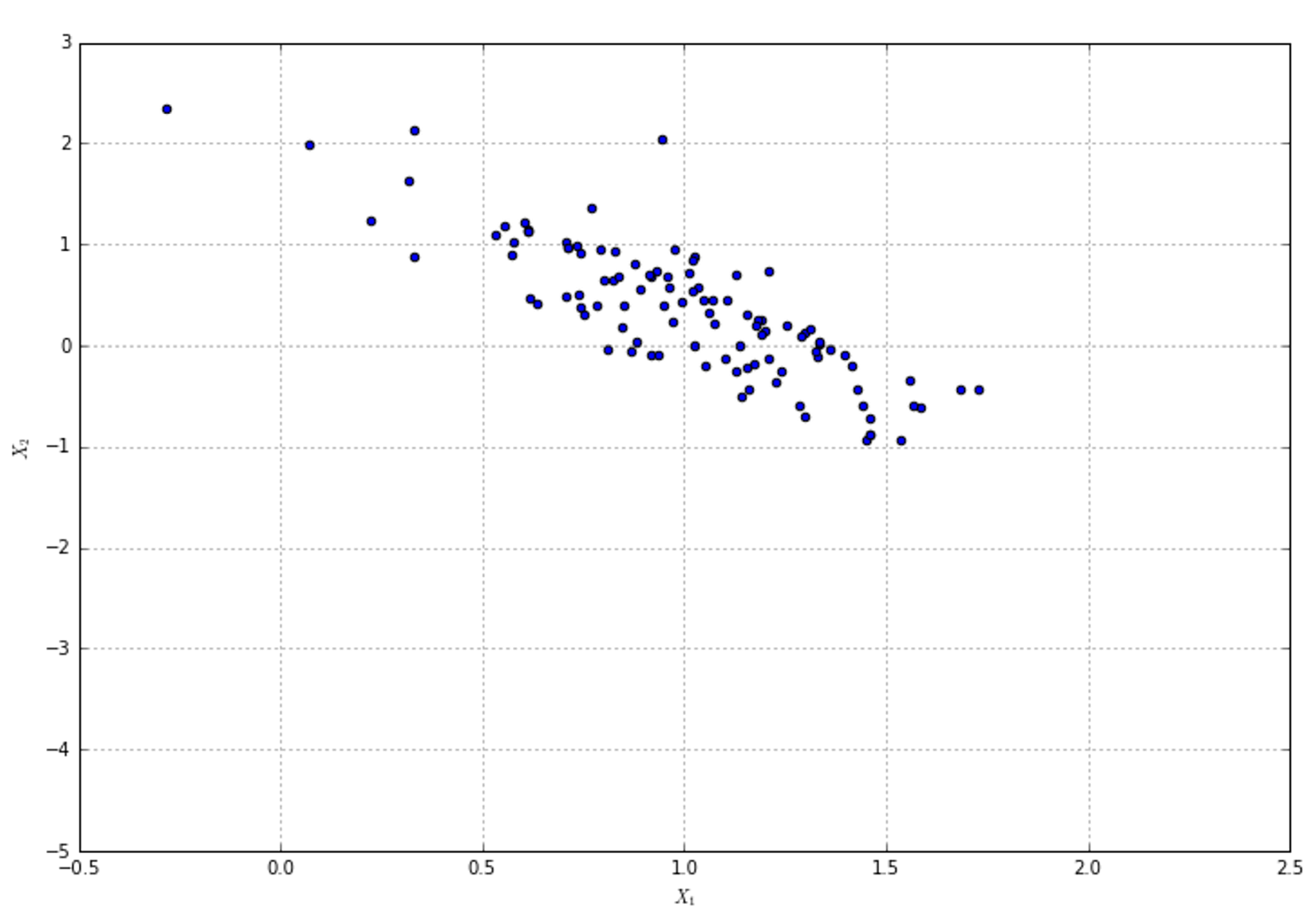

So each point is expressed using an orthonormal basis made of m linearly independent vectors. Let's consider the following figure (without any particular interpretation):所以每个点都是由m个线性无关的向量组成的标准正交基表示的。让我们考虑下面的图(没有任何特别的解释):

It doesn't matter which distributions generated X=(x1,x2), however, the variance of the horizontal component is clearly higher than the vertical one. (x1,x2)的分布并不重要,但水平成分的方差明显高于垂直成分。

It doesn't matter which distributions generated X=(x1,x2), however, the variance of the horizontal component is clearly higher than the vertical one. (x1,x2)的分布并不重要,但水平成分的方差明显高于垂直成分。

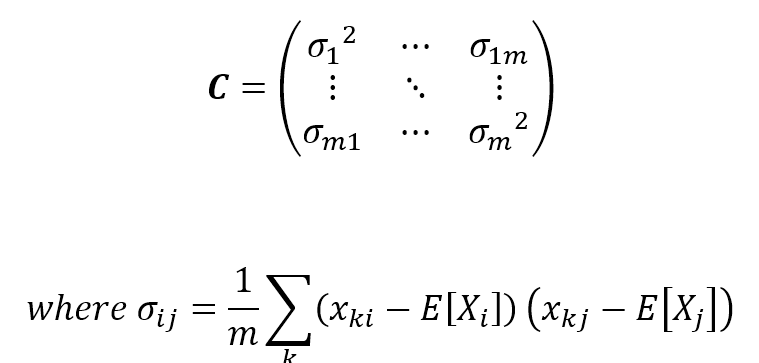

In order to assess how much information is brought by each component, and the correlation among them, a useful tool is the covariance matrix (if the dataset has zero mean, we can use the correlation matrix):为了评估每个组件带来了多少信息,以及它们之间的相关性,一个有用的工具是协方差矩阵(如果数据集的均值为零,我们可以使用相关矩阵):

$$\mathbf{C}=\begin{pmatrix} {{\sigma}_1}^2 & \cdots & {\sigma}_{1m} \\ \vdots & \ddots & \vdots \\ {\sigma}_{m1} & \cdots & {{\sigma}_m}^2 \end{pmatrix} \\

where \ {\sigma}_{ij}=\frac{1}{m}\sum_{k}{(x_{ki}-E[X_i])(x_{kj}-E[X_j])}$$

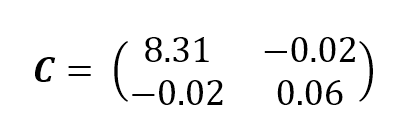

C is symmetric and positive semidefinite, so all the eigenvalues are non-negative, but what's the meaning of each value? The covariance matrix for the previous example is:C是对称的半正定的,所以所有的特征值都是非负的,但是每个值的意义是什么呢?上一个例子的协方差矩阵是:

$$\mathbf{C}=\begin{pmatrix} 8.31 & -0.02 \\ -0.02 & 0.06 \end{pmatrix}$$

As expected, the horizontal variance is quite a bit higher than the vertical one. Moreover, the other values are close to zero. If you remember the definition and, for simplicity, remove the mean term, they represent the cross-correlation between couples of components. It's obvious that in our example, x1 and x2 are uncorrelated (they're orthogonal), but in real-life examples, there could be features which present a residual cross-correlation. In terms of information theory, it means that knowing x1 gives us some information about x2 (which we already know), so they share information which is indeed doubled. So our goal is also to decorrelate X while trying to reduce its dimensionality.正如预期的那样,水平方差比垂直方差要高很多。而且,其他值接近于零。如果你记得协方差的定义,为了简单起见,去掉平均值,协方差表示组件对之间的相互关系。很明显,在我们的例子中,x1和x2基本是不相关的(它们基本是正交的,协方差接近于0),但是在真实的例子中,可能有一些特征呈现残余的相互关系。从信息论的角度来说,残余协方差意味着互信息,所以信息冗余了。所以我们的目标是在降低特征X的维数的同时,去除特征X相关性。

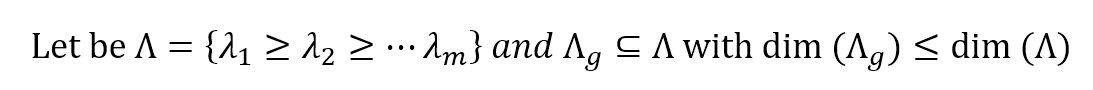

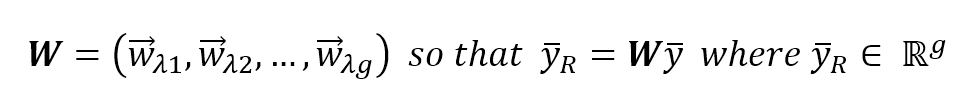

This can be achieved considering the sorted eigenvalues of C and selecting g < m values:可通过排序C的m个特征根并选择前g个最大值。

$$Let \ be \ \Lambda = \{\lambda_1 \ge \lambda_2 \ge \dots \lambda_m \} \ and \ \Lambda_g \subseteq \Lambda \ with \ dim(\Lambda_g) \le dim(\Lambda)$$

对应特征向量如下如下:

$$\mathbf{W} = (\vec{w_{\lambda_1}},\vec{w_{\lambda_2}},\ldots,\vec{w_{\lambda_g}}) \ so \ that \ \vec{y}_R=\mathbf{W}\vec{y} \ where \ \vec{y}_R \in \mathbb{R}^m$$

So, it's possible to project the original feature vectors into this new (sub-)space, where each component carries a portion of total variance and where the new covariance matrix is decorrelated to reduce useless information sharing (in terms of correlation) among different features.因此,可以将原始特征向量投射到这个新的(子)空间中,其中每个组件携带总方差的一部分,新协方差矩阵进行去相关,以减少不同特征之间无用的信息共享(即协方差项)。

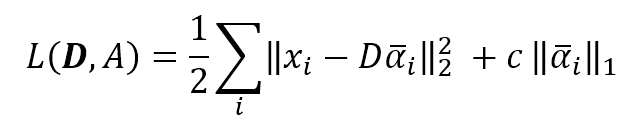

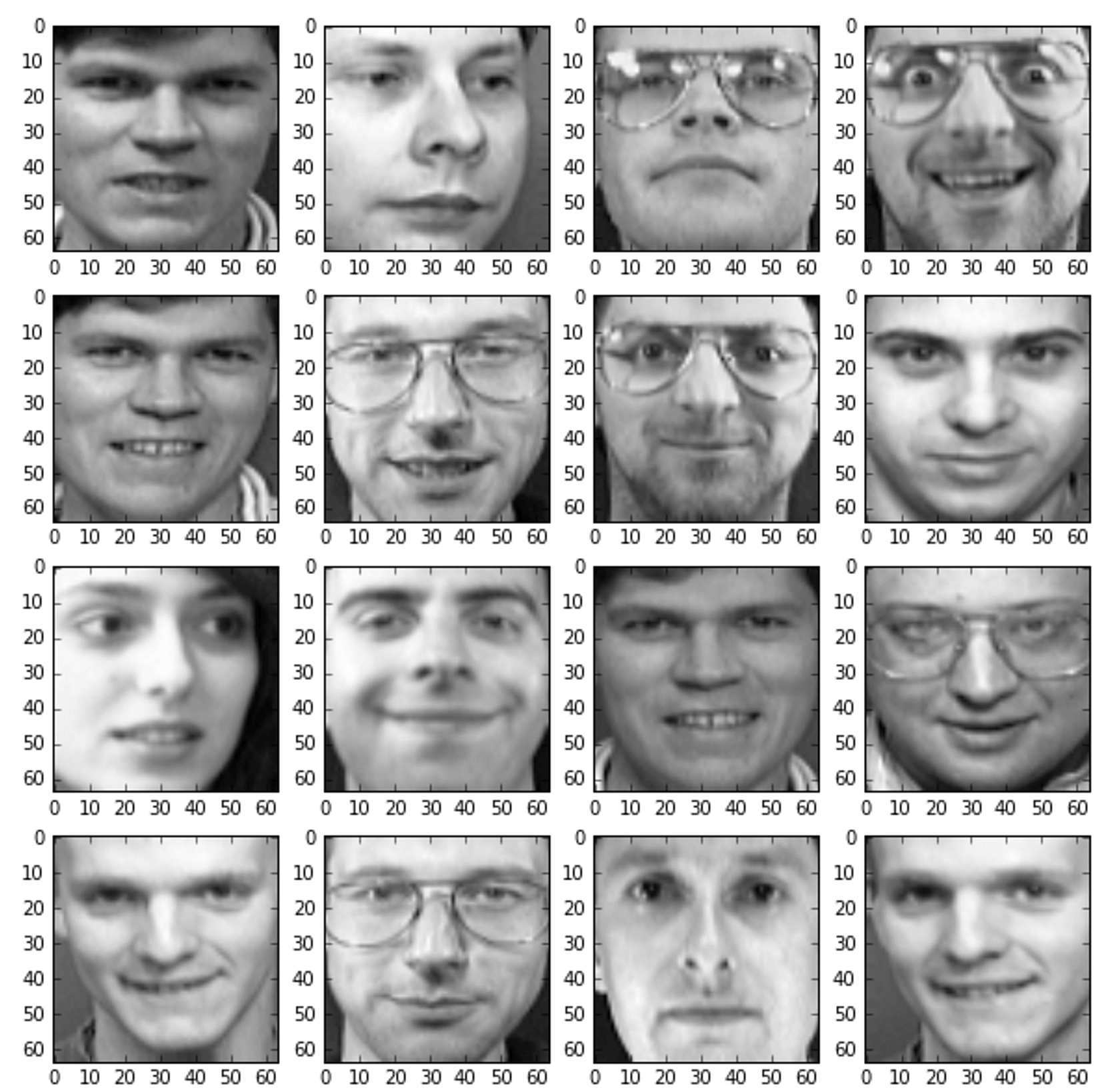

A figure with a few random MNIST handwritten digits is shown as follows:一些MNIST手写数字图像显示如下:

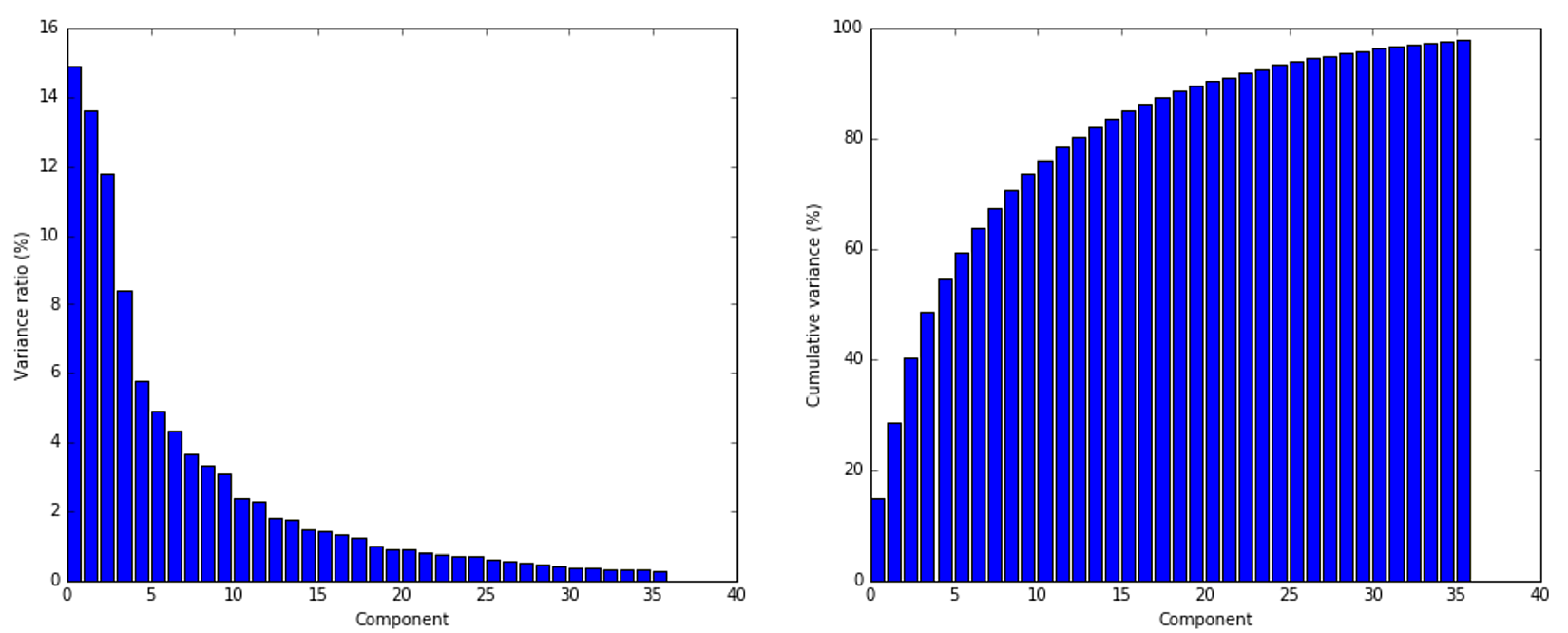

A plot for the example of MNIST digits is shown next. The left graph represents the variance ratio while the right one is the cumulative variance. It can be immediately seen how the first components are normally the most important ones in terms of information, while the following ones provide details that a classifier could also discard:MNIST数字样本作图如下。左侧代表方差比而右侧代表累积方差。可以立即看到,在信息方面,第一个成分通常是最重要的成分,而下面的成分提供了分类器也可以丢弃的细节:

A plot for the example of MNIST digits is shown next. The left graph represents the variance ratio while the right one is the cumulative variance. It can be immediately seen how the first components are normally the most important ones in terms of information, while the following ones provide details that a classifier could also discard:MNIST数字样本作图如下。左侧代表方差比而右侧代表累积方差。可以立即看到,在信息方面,第一个成分通常是最重要的成分,而下面的成分提供了分类器也可以丢弃的细节:

As expected, the contribution to the total variance decreases dramatically starting from the fifth component, so it's possible to reduce the original dimensionality without an unacceptable loss of information, which could drive an algorithm to learn wrong classes.正如预期的那样,从第5个成分开始,对总方差的贡献会急剧减少,因此可以降低原始维数,又不至于造成难以接受的信息损失,难以接受的信息损失将导致算法学习错误的类。

As expected, the contribution to the total variance decreases dramatically starting from the fifth component, so it's possible to reduce the original dimensionality without an unacceptable loss of information, which could drive an algorithm to learn wrong classes.正如预期的那样,从第5个成分开始,对总方差的贡献会急剧减少,因此可以降低原始维数,又不至于造成难以接受的信息损失,难以接受的信息损失将导致算法学习错误的类。

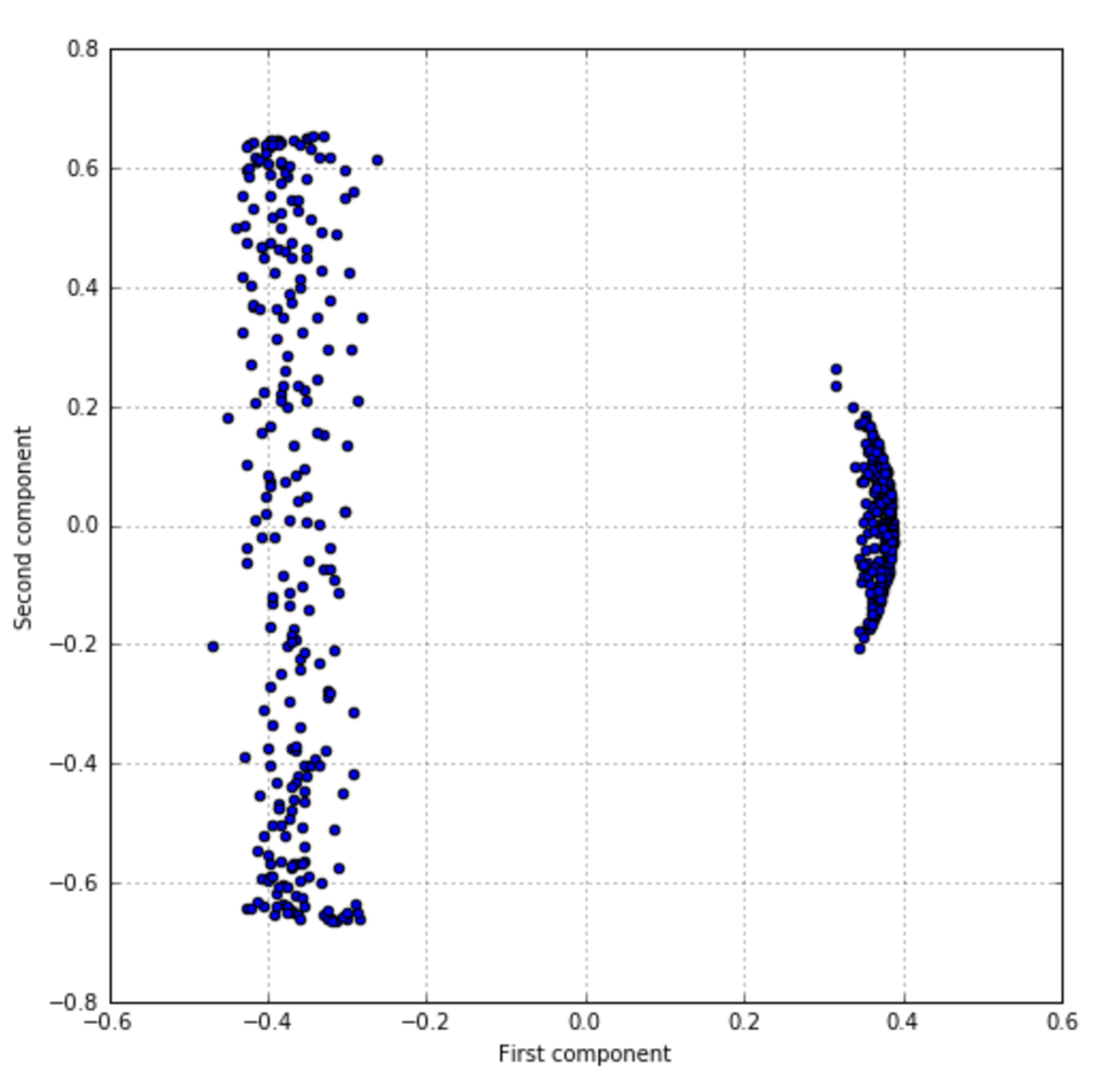

The result is shown in the following figure:(经PCA转换)结果显示如下:

This process can also partially denoise the original images by removing residual variance, which is often associated with noise or unwanted contributions (almost every calligraphy distorts some of the structural elements which are used for recognition).这个过程(指PCA)也可部分降噪 这个过程还可以通过去除残差来部分地降噪原始图像,残差通常与噪声或不必要的贡献相关联(几乎每一幅书法作品都会扭曲用于识别的某些结构元素)。

This process can also partially denoise the original images by removing residual variance, which is often associated with noise or unwanted contributions (almost every calligraphy distorts some of the structural elements which are used for recognition).这个过程(指PCA)也可部分降噪 这个过程还可以通过去除残差来部分地降噪原始图像,残差通常与噪声或不必要的贡献相关联(几乎每一幅书法作品都会扭曲用于识别的某些结构元素)。

【 请阅读pca原理

经PCA转换后的矩阵:

- 列向量线性无关;

- 维度减少。 】

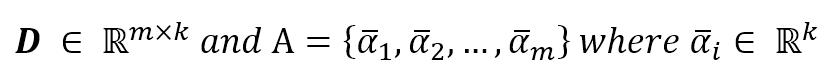

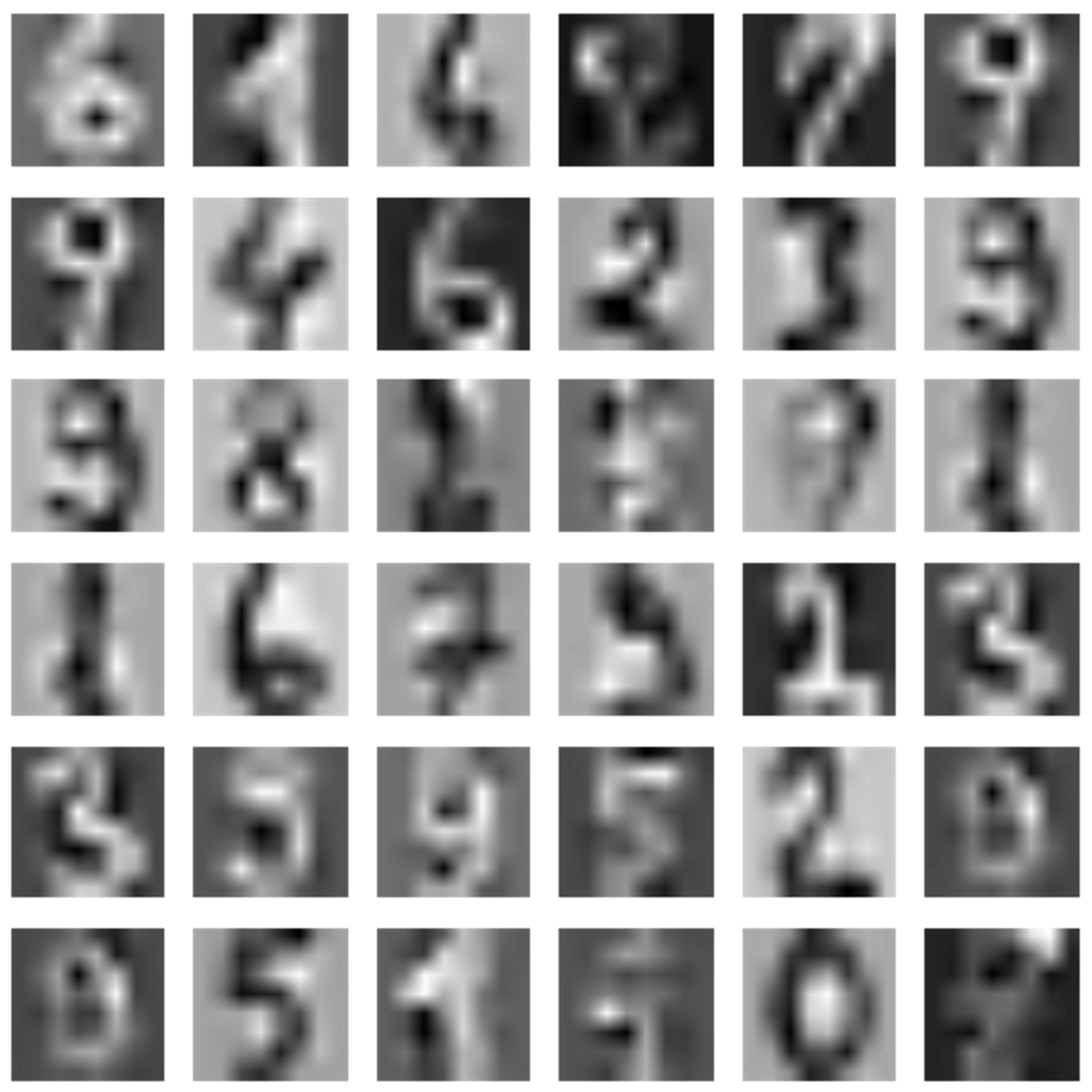

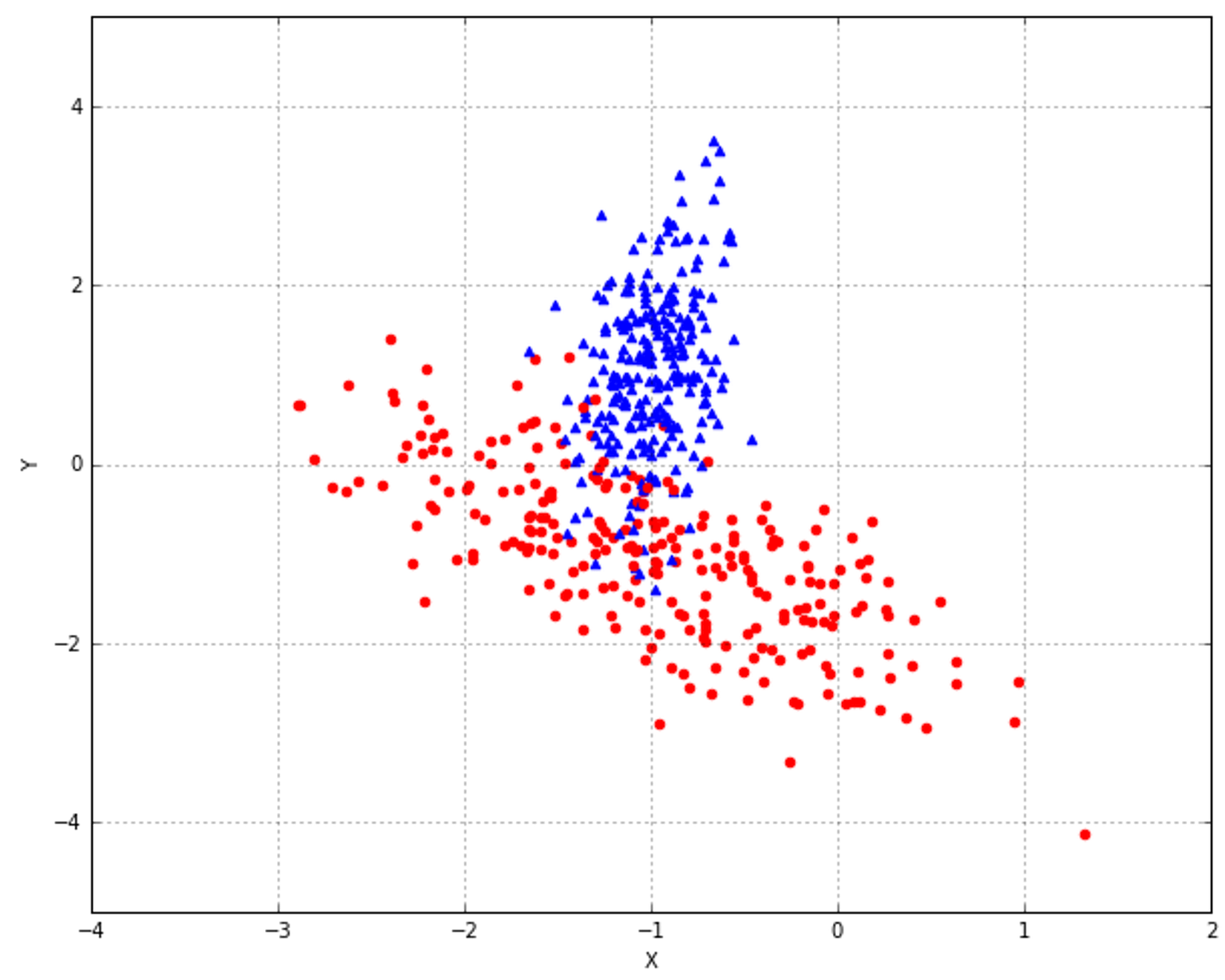

3.7.1 Non-negative matrix factorization非负矩阵分解(NNMF)

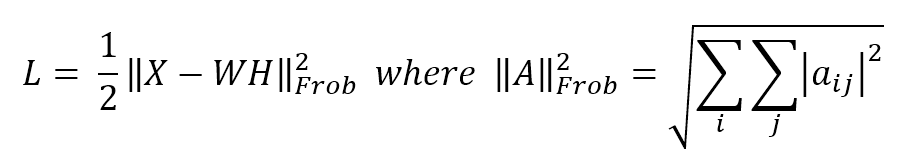

When the dataset is made up of non-negative elements, it's possible to use non-negative matrix factorization (NNMF) instead of standard PCA. The algorithm optimizes a loss function (alternatively on W and H) based on the Frobenius norm:当数据集由非负元素组成,可以由标准PCA改用非负矩阵分解(NNMF)。算法优化基于Frobenius范数的损失函数(在W和H上交替进行):

$$L = \frac{1}{2} {||\mathbf{X-WH}||}_{Frob}^2 \ where \ {||\mathbf{A}||}_{Frob}^2 = \sqrt{\sum_{i}\sum_{j} {|a_{ij}|^2}}$$

算法什么意思呢?用WH来代表原始特征矩阵。W和H均是非负矩阵,且需要W的列数小于X的列数。随意初始比如W、H为某非负矩阵,交替优化以使X-WH的Frobenius范数小到令我们满意。

If dim(X) = $n \times m$, then dim(W) = $n \times p$ and dim(H) = $p \times m$ with p equal to the number of requested components (the n_components parameter), which is normally smaller than the original dimensions n and m.如果dim(X) = $n \times m$, 那么dim(W) = $n \times p$, dim(H) = $p \times m$, p等于所请求的成分数(n_components参数),通常小于原尺寸n和m。

The final reconstruction is purely additive and it has been shown that it's particularly efficient for images or text where there are normally no non-negative elements.最后的重构是纯加法的,它已经被证明对于通常没有非负元素的图像或文本特别有效。

【 请阅读nmf或者叫nnmf原理

经稀疏PCA转换后的矩阵:

- 列向量线性无关;

- 维度减少;

- 分解出的两个矩阵只要有一个所有值非负。 】

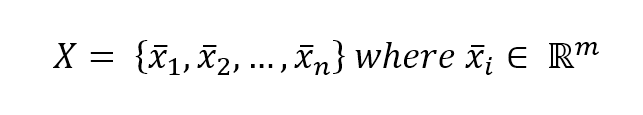

3.7.2 Sparse PCA稀疏主成分分析

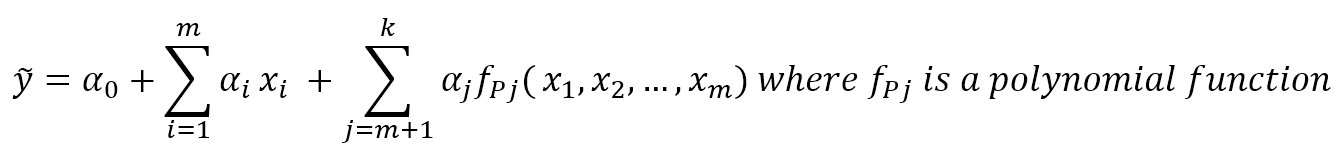

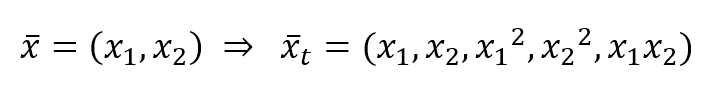

SparsePCAallows exploiting the natural sparsity of data while extracting principal components.If you think about the handwritten digits or other images that must be classified, their initial dimensionality can be quite high (a 10x10 image has 100 features). However, applying a standard PCA selects only the average most important features, assuming that every sample can be rebuilt using the same components. Simplifying, this is equivalent to:SparsePCA允许利用自然稀疏的数据,同时提取主成分。如果你考虑手写数字或其他必须分类的图像,它们的初始维度可能相当高(10x10的图像有100个特征)。然而,应用标准PCA只选择最重要的平均特征,假设每个样本都可以使用相同的成分重新构建。化简,这等于:

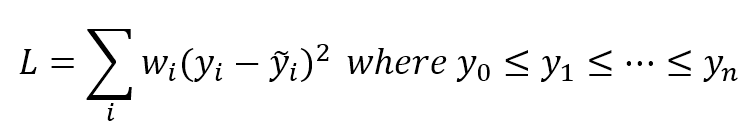

$$y_R = C_1 \cdot y_{R1} +C_2 \cdot y_{R2}+ \dots + C_g \cdot y_{Rg}$$

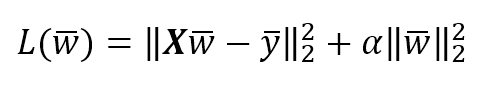

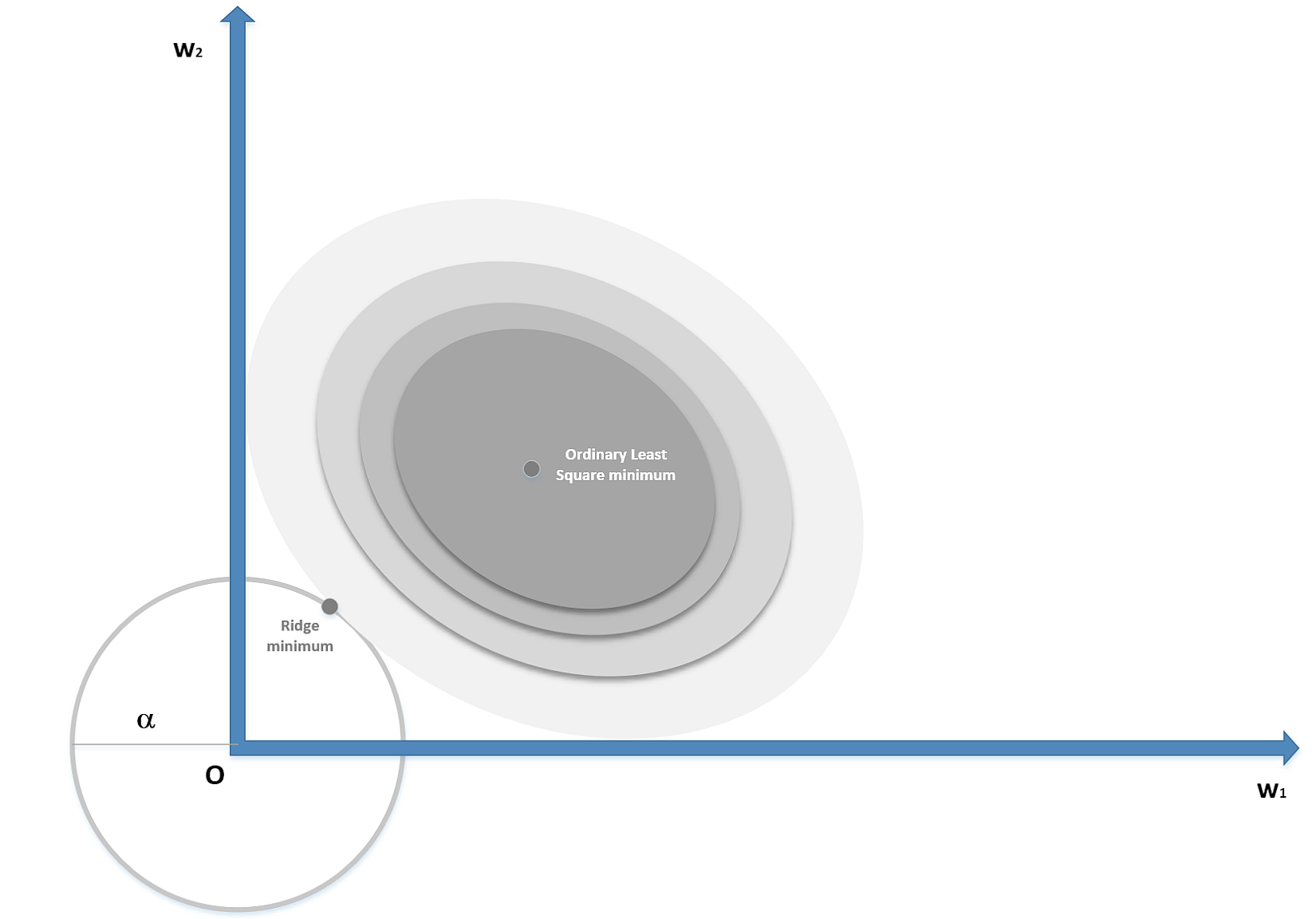

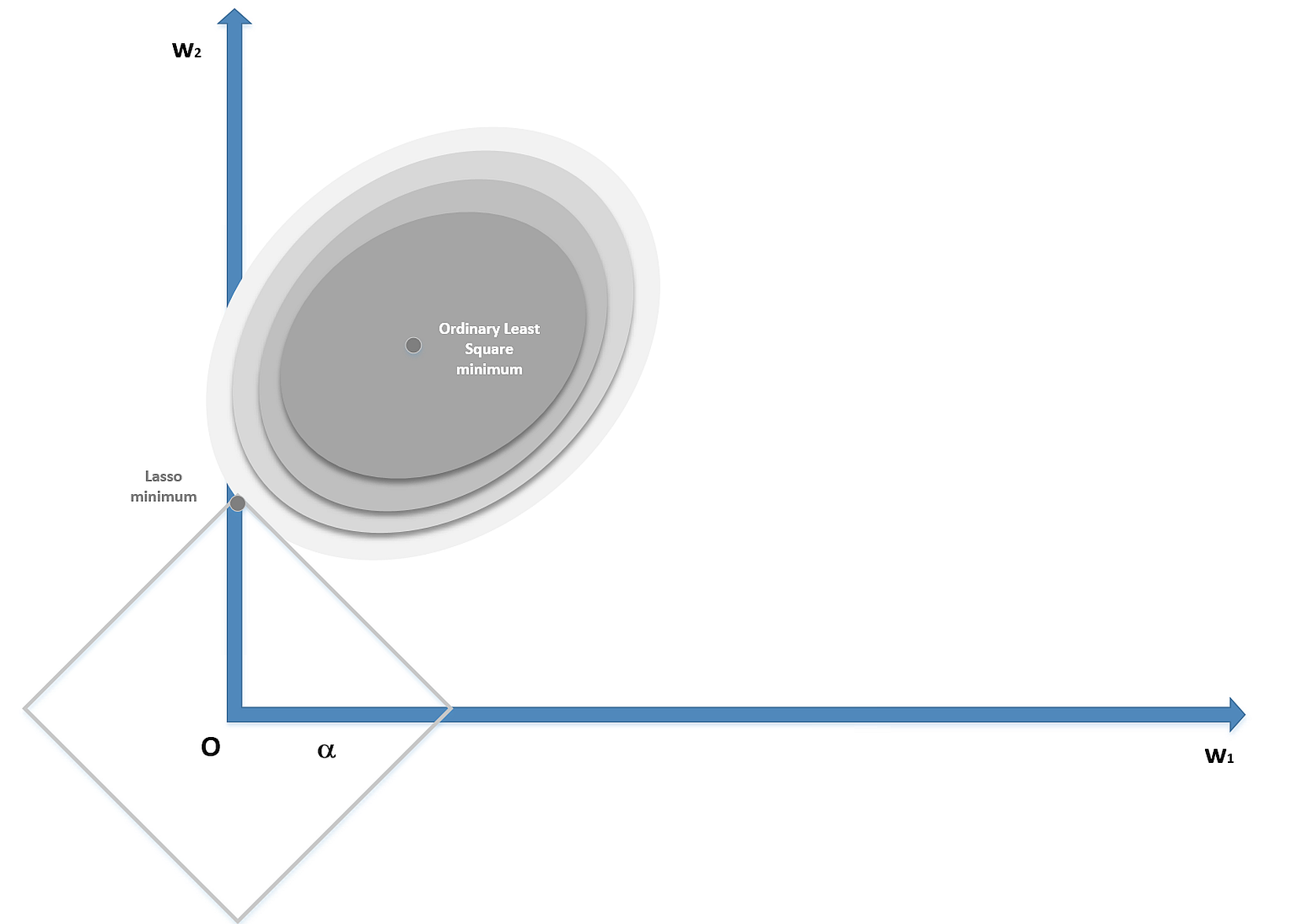

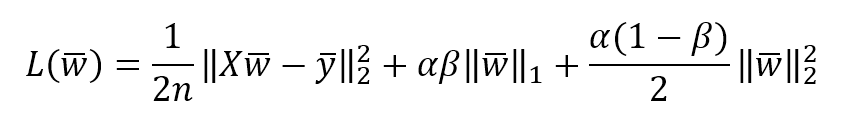

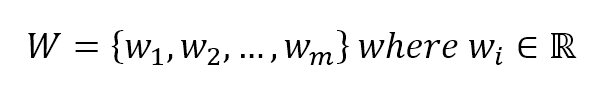

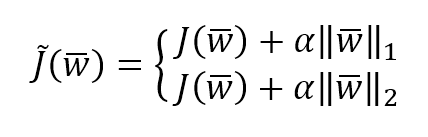

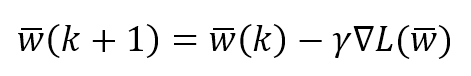

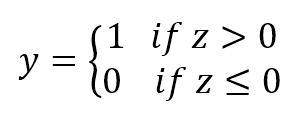

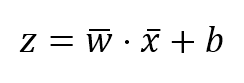

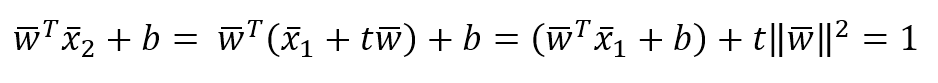

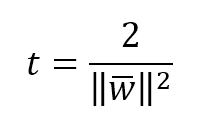

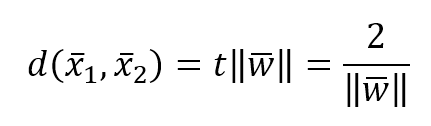

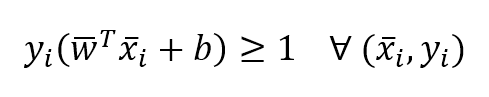

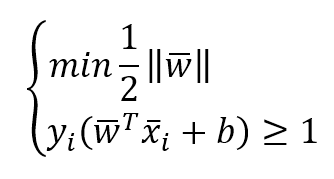

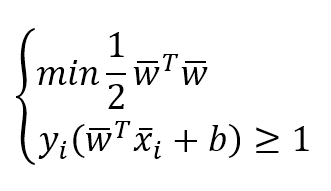

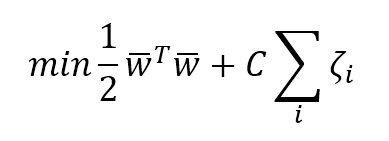

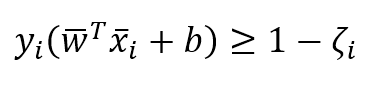

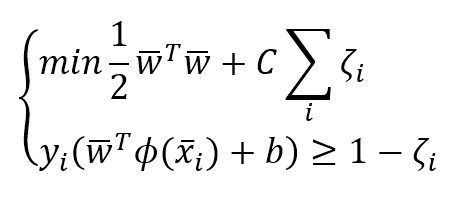

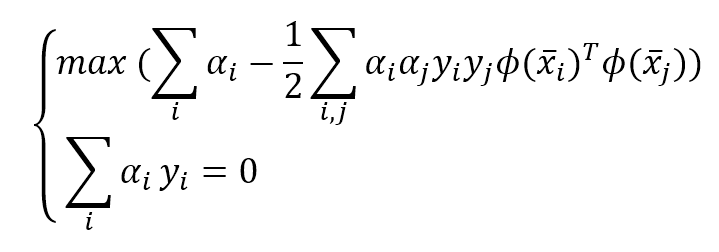

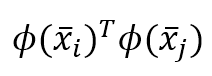

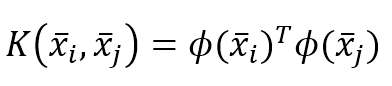

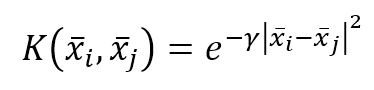

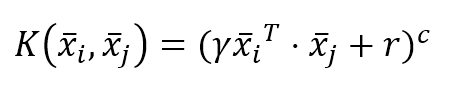

注意上式左边是原始数据中一个元素,右式是g个主成分对这个元素的模拟